This is a Plain English Papers summary of a research paper called Repairing Catastrophic-Neglect in Text-to-Image Diffusion Models via Attention-Guided Feature Enhancement. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

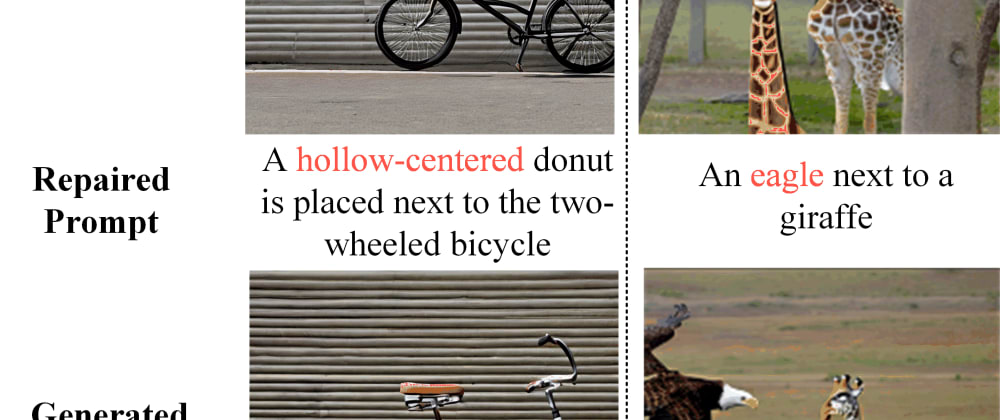

- This paper explores techniques to address the issue of "catastrophic-neglect" in text-to-image diffusion models, which occurs when the model struggles to generate images that accurately reflect the provided text.

- The researchers propose an "Attention-Guided Feature Enhancement" (AGFE) approach to improve the alignment between the generated images and the input text.

- The AGFE method aims to enhance the relevant visual features in the generated images based on the attention mechanism, helping the model better capture the semantic relationships between the text and the desired image.

Plain English Explanation

The paper focuses on a problem in text-to-image AI models, where the generated images may not always accurately reflect the provided text. This issue is known as "catastrophic-neglect," and it can be frustrating for users who expect the AI to generate images that closely match their textual descriptions.

To address this problem, the researchers developed a new technique called "Attention-Guided Feature Enhancement" (AGFE). The key idea behind AGFE is to use the attention mechanism, which helps the AI model understand the relationships between different parts of the text, to enhance the relevant visual features in the generated images.

Imagine you ask the AI to generate an image of a "cute, fluffy dog." The attention mechanism would identify the important elements of the text, like "cute," "fluffy," and "dog," and use that information to ensure the generated image has the appropriate visual characteristics, such as a soft, furry appearance and a canine shape. This helps the AI model create images that are more closely aligned with the textual description, reducing the issue of "catastrophic-neglect."

By improving the relationship between the text and the generated images, the AGFE approach can make text-to-image AI models more useful and user-friendly, allowing them to better translate our ideas and descriptions into visual representations.

Technical Explanation

The paper introduces an "Attention-Guided Feature Enhancement" (AGFE) approach to address the problem of "catastrophic-neglect" in text-to-image diffusion models. Catastrophic-neglect is a well-known issue in these models, where the generated images may fail to accurately reflect the provided text description.

The core idea behind AGFE is to leverage the attention mechanism, which helps the model understand the semantic relationships between different parts of the text, to enhance the relevant visual features in the generated images. This builds on previous work on using attention to improve text-to-image generation and personalization.

The AGFE method works by first extracting visual features from the generated image and text features from the input text. It then uses the attention weights to identify the most relevant text features and selectively enhances the corresponding visual features. This "object-attribute binding" approach has been explored in other text-to-image generation research.

The researchers evaluate the AGFE approach on several text-to-image benchmarks and find that it outperforms existing methods in terms of improving the alignment between the generated images and the input text descriptions. The work builds on a growing body of research on enhancing text-to-image generation capabilities.

Critical Analysis

The paper presents a promising approach to addressing the "catastrophic-neglect" issue in text-to-image diffusion models. However, the researchers acknowledge that their method has some limitations:

- The AGFE approach is designed to work with specific text-to-image diffusion models and may not be easily transferable to other architectures or modalities.

- The performance improvements, while significant, are still limited, and there is room for further refinement and optimization of the attention-guided feature enhancement.

- The researchers only evaluate the method on standard benchmarks and do not explore real-world applications or user studies to assess the practical impact of their approach.

Additionally, it would be valuable to see further exploration of the underlying mechanisms and biases in text-to-image models that contribute to the "catastrophic-neglect" problem. Understanding these issues more deeply could lead to more robust and generalizable solutions.

Overall, the AGFE approach represents an important step forward in improving the alignment between text and generated images, but continued research and innovation will be necessary to fully address the challenges in this rapidly evolving field.

Conclusion

This paper presents a novel "Attention-Guided Feature Enhancement" (AGFE) technique to address the issue of "catastrophic-neglect" in text-to-image diffusion models. The AGFE method leverages the attention mechanism to selectively enhance the relevant visual features in the generated images, improving the alignment between the text descriptions and the resulting images.

The researchers demonstrate the effectiveness of AGFE through experiments on several text-to-image benchmarks, showing that it outperforms existing approaches. While the method has some limitations, it represents an important advancement in the ongoing efforts to enhance the capabilities and real-world applicability of text-to-image generation systems.

As the field of text-to-image AI continues to evolve, the AGFE approach and similar attention-guided techniques may play a crucial role in developing more robust and user-friendly models that can reliably translate our ideas and descriptions into compelling visual representations.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Top comments (0)