This is a Plain English Papers summary of a research paper called The Geometry of Categorical and Hierarchical Concepts in Large Language Models. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

• This paper explores the geometry of categorical and hierarchical concepts in large language models, which are AI systems trained on vast amounts of text data to understand and generate human language.

• The researchers investigate how these models represent and organize different types of concepts, including broad categories like "animal" and more specific subcategories like "dog" and "cat."

• They use techniques from topology and geometry to analyze the structure and relationships between these conceptual representations in the models' internal "thought processes."

Plain English Explanation

Large language models like GPT-3 and BERT have shown remarkable abilities to understand and generate human language. However, the inner workings of how these models represent and organize different concepts, from broad categories to specific examples, is not well understood.

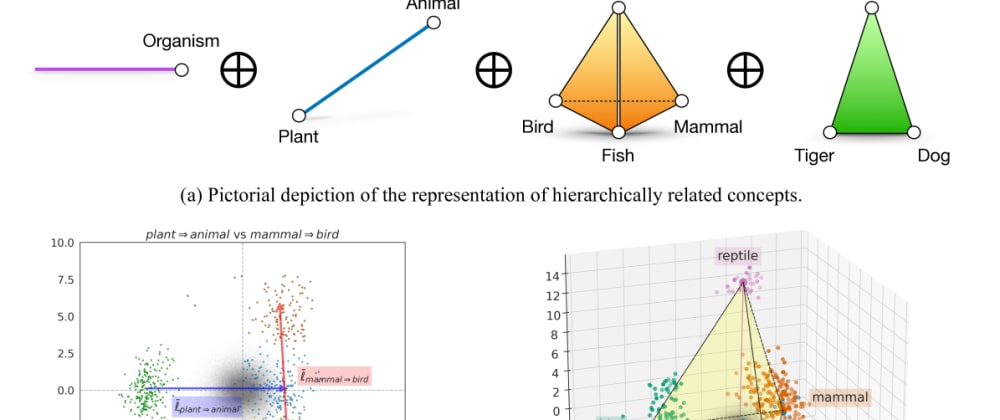

This research paper dives into the geometric and topological properties of how these models represent and structure conceptual knowledge. The researchers find that broader categorical concepts like "animal" tend to occupy larger, more diffuse regions in the models' internal representation spaces. Meanwhile, more specific concepts like "dog" and "cat" are represented by tighter, more concentrated clusters.

Interestingly, the researchers also observe clear hierarchical relationships between these concepts, where subcategories like "dog" and "cat" are embedded within the broader "animal" concept. This mirrors the way humans organize knowledge into taxonomies and ontologies.

By using advanced mathematics techniques like manifold learning and persistent homology, the researchers are able to extract and visualize these complex conceptual structures within the models. This provides valuable insights into how large language models represent meaning and semantics in a hierarchical and structured way.

Technical Explanation

The paper begins by establishing that large language models, despite their impressive linguistic capabilities, have an internal representational structure that is not well understood. The researchers hypothesize that these models may possess rich geometric and topological properties that organize conceptual knowledge in a hierarchical fashion.

To investigate this, the authors use a variety of techniques from topology and geometry. First, they leverage manifold learning algorithms to extract low-dimensional manifold structures from the high-dimensional vector representations of concepts within the models. This reveals that broader categorical concepts occupy larger, more diffuse regions, while specific subcategories form tighter, more concentrated clusters.

Next, the researchers apply persistent homology, a technique from algebraic topology, to uncover the hierarchical relationships between these conceptual representations. They find clear topological structures that mirror human taxonomic knowledge, with subcategories nesting within broader categories.

The paper also explores how these geometric and topological properties relate to the models' ability to reason about and manipulate concepts in downstream tasks. The authors provide visualizations and quantitative analyses to support their findings.

Critical Analysis

The researchers present a compelling and rigorous analysis of the geometric and topological properties underlying the conceptual representations in large language models. By leveraging advanced mathematical techniques, they are able to uncover structural insights that were previously hidden within these complex systems.

One potential limitation of the study is the reliance on a single language model (GPT-3) and a limited set of conceptual categories. It would be valuable to extend the analysis to a broader range of models and concept types to validate the generalizability of the findings.

Additionally, while the paper provides evidence for the hierarchical organization of concepts, it does not fully address the question of how this structure emerges during the training process. Further research could explore the developmental dynamics that lead to the formation of these conceptual geometries.

Overall, this study makes an important contribution to our understanding of how large language models represent and organize knowledge. The insights could have significant implications for fields like commonsense reasoning, semantic parsing, and knowledge extraction from these powerful AI systems.

Conclusion

This paper presents a novel investigation into the geometric and topological structure of conceptual representations in large language models. The researchers find that broader categorical concepts occupy larger, more diffuse regions, while specific subcategories form tighter, more concentrated clusters. Importantly, they also uncover clear hierarchical relationships between these conceptual representations, mirroring the way humans organize knowledge.

By leveraging advanced mathematical techniques, the authors are able to shed light on the complex inner workings of these powerful AI systems. The insights gained could have significant implications for our understanding of how large language models represent and reason about meaning, with potential applications in areas like commonsense reasoning, knowledge extraction, and semantic parsing.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Top comments (0)