Data structures are an essential part of computer science and programming. They allow us to organise and store data efficiently, making it easier and faster to access and manipulate. One such data structure is the AVL tree, which is a self-balancing binary search tree. In this article, we will discuss AVL trees in detail, including their properties, balancing factor, rotation, implementation, time complexity, space complexity, advantages, disadvantages, when AVL trees might [not] be the best choice for your data.

What is an AVL Tree?

An AVL tree is a binary search tree that is balanced. This means that the heights of the two subtrees of any node differ by at most one. In other words, an AVL tree is a binary search tree in which the difference between the heights of the left and right subtrees of any node is at most one. AVL trees were invented by Georgy Adelson-Velsky and Evgenii Landis in 1962 and were the first dynamically balanced trees to be proposed.

Properties of AVL Trees

AVL trees have the following properties:

The height of the tree is at most O(log n), where n is the number of nodes in the tree.

For every node in the tree, the heights of the left and right subtrees differ by at most one.

The left and right subtrees of every node are themselves AVL trees.

Balancing Factor

In an AVL tree, the balancing factor of a node is the difference between the height of its left subtree and the height of its right subtree. Mathematically, it can be represented as:

balanceFactor = height(leftSubtree) - height(rightSubtree)

The balancing factor can be either -1, 0, or 1. If the balancing factor of a node is -1, it means that the node is left-heavy. If the balancing factor is 0, it means that the node is balanced. If the balancing factor is 1, it means that the node is right-heavy.

The AVL tree uses the balancing factor to maintain a balanced tree structure. Whenever a new node is added to the tree, or an existing node is removed, the AVL tree performs rotation operations to ensure that the balancing factor of every node in the tree is either -1, 0, or 1. This ensures that the height of the tree remains logarithmic in proportion to the number of nodes in the tree.

Rotations

In an AVL tree, there are two types of rotation operations that can be performed to balance the tree

Left Rotation

A left rotation is performed when a node becomes right-heavy. It involves moving the node to its left child’s position, and the left child becoming the new root of the subtree. This operation ensures that the left child’s height is increased by one, and the right child’s height is decreased by one.

Right Rotation

A right rotation is performed when a node becomes left-heavy. It involves moving the node to its right child’s position, and the right child becoming the new root of the subtree. This operation ensures that the right child’s height is increased by one, and the left child’s height is decreased by one.

These two operations can be used in combination to perform double rotations, which are needed in some cases to balance the tree. There are two types of double rotations:

Left-Right Rotation

This is performed when a node becomes left-heavy, and its left child becomes right-heavy. It involves first performing a left rotation on the left child, and then a right rotation on the original node.

Right-Left Rotation

This is performed when a node becomes right-heavy, and its right child becomes left-heavy. It involves first performing a right rotation on the right child, and then a left rotation on the original node.

By performing these rotation operations, an AVL tree maintains a balanced structure, ensuring that the height of the tree remains logarithmic in proportion to the number of nodes in the tree.

Implementation

AVL trees can be implemented in various programming languages. The implementation typically consists of the following operations:

Insertion

This operation inserts a new node into the tree while maintaining the balance of the tree.

Algorithm

Create a new node with the given value to be inserted.

If the tree is empty, make the new node the root of the tree.

If the tree is not empty, perform a binary search to find the appropriate position for the new node in the tree.

Insert the new node at the appropriate position as in a regular binary search tree.

Starting from the newly inserted node, move up the tree towards the root, updating the height of each node and checking the balancing factor of each node.

If the balancing factor of any node becomes greater than 1 or less than -1, perform the appropriate rotation(s) to balance the tree.

Continue moving up the tree until the root node is reached, and the tree is balanced.

Pseudocode

function insert(node, value):

if node is null:

create a new node with value

return the new node

if value < node.value:

node.left = insert(node.left, value)

else if value > node.value:

node.right = insert(node.right, value)

else:

the value already exists in the tree, return the node

// update height of the current node

node.height = 1 + max(height(node.left), height(node.right))

// check the balancing factor of the current node

balance = getBalance(node)

// perform rotations to balance the tree if necessary

if balance > 1 and value < node.left.value:

return rightRotate(node)

if balance < -1 and value > node.right.value:

return leftRotate(node)

if balance > 1 and value > node.left.value:

node.left = leftRotate(node.left)

return rightRotate(node)

if balance < -1 and value < node.right.value:

node.right = rightRotate(node.right)

return leftRotate(node)

// return the (unchanged) node pointer

return node

In this pseudocode, getBalance() is a helper function that returns the balancing factor of a given node. leftRotate() and rightRotate() are functions that perform left and right rotations, respectively.

Deletion

This operation removes a node from the tree while maintaining the balance of the tree.

Algorithm

Perform a binary search to find the node to be deleted.

If the node is a leaf node or has only one child, delete the node and replace it with its child (if any). If the node has two children, proceed to step 3.

Find the node’s in-order successor (the smallest node in its right subtree) or in-order predecessor (the largest node in its left subtree).

Replace the node to be deleted with its in-order successor or predecessor.

Recursively delete the in-order successor or predecessor from its new location.

Starting from the parent of the deleted node, move up the tree towards the root, updating the height of each node and checking the balancing factor of each node.

If the balancing factor of any node becomes greater than 1 or less than -1, perform the appropriate rotation(s) to balance the tree.

Continue moving up the tree until the root node is reached, and the tree is balanced.

Pseudocode

function delete(node, value):

if node is null:

the value does not exist in the tree, return null

if value < node.value:

node.left = delete(node.left, value)

else if value > node.value:

node.right = delete(node.right, value)

else:

// node is the one to be deleted

if node.left is null or node.right is null:

if node.left is null:

temp = node.right

else:

temp = node.left

if temp is null:

// node is a leaf node

temp = node

node = null

else:

// node has only one child

node = temp

free(temp)

else:

// node has two children

temp = minValueNode(node.right)

node.value = temp.value

node.right = delete(node.right, temp.value)

if node is null:

return node

// update height of the current node

node.height = 1 + max(height(node.left), height(node.right))

// check the balancing factor of the current node

balance = getBalance(node)

// perform rotations to balance the tree if necessary

if balance > 1 and getBalance(node.left) >= 0:

return rightRotate(node)

if balance > 1 and getBalance(node.left) < 0:

node.left = leftRotate(node.left)

return rightRotate(node)

if balance < -1 and getBalance(node.right) <= 0:

return leftRotate(node)

if balance < -1 and getBalance(node.right) > 0:

node.right = rightRotate(node.right)

return leftRotate(node)

// return the (possibly) updated node pointer

return node

In this pseudocode, minValueNode() is a helper function that returns the node with the minimum value in a given subtree. getBalance() is a helper function that returns the balancing factor of a given node. leftRotate() and rightRotate() are functions that perform left and right rotations, respectively.

Searching

This operation searches for a node with a given key in the tree.

Algorithm

Start at the root node.

If the root node is null, return null (the value is not in the tree).

If the root node’s value matches the target value, return the root node.

If the target value is less than the root node’s value, recursively search the left subtree.

If the target value is greater than the root node’s value, recursively search the right subtree.

Pseudocode

function search(node, value):

if node is null or node.value is equal to value:

return node

else if value is less than node.value:

return search(node.left, value)

else:

return search(node.right, value)

In this pseudocode, node is the root of the subtree to search, and value is the target value. If the target value is found, the algorithm returns the node containing that value. Otherwise, it returns null.

The implementation of AVL trees is complex and requires careful attention to detail. The balance of the tree must be maintained during every operation, and rotations must be performed when necessary to balance the tree.

AVL Tree Implementation Using Java With Operations And Traversals

class Node {

int key, height;

Node left, right;

Node(int d) {

key = d;

height = 1;

}

}

class AVLTree {

Node root;

int height(Node N) {

if (N == null)

return 0;

return N.height;

}

int max(int a, int b) {

return (a > b) ? a : b;

}

Node rightRotate(Node y) {

Node x = y.left;

Node T2 = x.right;

x.right = y;

y.left = T2;

y.height = max(height(y.left), height(y.right)) + 1;

x.height = max(height(x.left), height(x.right)) + 1;

return x;

}

Node leftRotate(Node x) {

Node y = x.right;

Node T2 = y.left;

y.left = x;

x.right = T2;

x.height = max(height(x.left), height(x.right)) + 1;

y.height = max(height(y.left), height(y.right)) + 1;

return y;

}

int getBalance(Node N) {

if (N == null)

return 0;

return height(N.left) - height(N.right);

}

Node minValueNode(Node node) {

Node current = node;

while (current.left != null)

current = current.left;

return current;

}

Node insert(Node node, int key) {

if (node == null)

return (new Node(key));

if (key < node.key)

node.left = insert(node.left, key);

else if (key > node.key)

node.right = insert(node.right, key);

else

return node;

node.height = 1 + max(height(node.left), height(node.right));

int balance = getBalance(node);

if (balance > 1 && key < node.left.key)

return rightRotate(node);

if (balance < -1 && key > node.right.key)

return leftRotate(node);

if (balance > 1 && key > node.left.key) {

node.left = leftRotate(node.left);

return rightRotate(node);

}

if (balance < -1 && key < node.right.key) {

node.right = rightRotate(node.right);

return leftRotate(node);

}

return node;

}

Node deleteNode(Node root, int key) {

if (root == null)

return root;

if (key < root.key)

root.left = deleteNode(root.left, key);

else if (key > root.key)

root.right = deleteNode(root.right, key);

else {

if ((root.left == null) || (root.right == null)) {

Node temp = null;

if (temp == root.left)

temp = root.right;

else

temp = root.left;

if (temp == null) {

temp = root;

root = null;

} else

root = temp;

} else {

Node temp = minValueNode(root.right);

root.key = temp.key;

root.right = deleteNode(root.right, temp.key);

}

}

if (root == null)

return root;

root.height = max(height(root.left), height(root.right)) + 1;

int balance = getBalance(root);

if (balance > 1 && getBalance(root.left) >= 0)

return rightRotate(root);

if (balance > 1 && getBalance(root.left) < 0) {

root.left = leftRotate(root.left);

return rightRotate(root);

}

if (balance < -1 && getBalance(root.right) <= 0)

return leftRotate(root);

if (balance < -1 && getBalance(root.right) > 0) {

root.right = rightRotate(root.right);

return leftRotate(root);

}

return root;

}

Node search(Node node, int key) {

if (node == null || node.key == key)

return node;

if (node.key > key)

return search(node.left, key);

return search(node.right, key);

}

void preOrder(Node node) {

if (node != null) {

System.out.print(node.key + " ");

preOrder(node.left);

preOrder(node.right);

}

}

void inOrder(Node node) {

if (node != null) {

inOrder(node.left);

System.out.print(node.key + " ");

inOrder(node.right);

}

}

void postOrder(Node node) {

if (node != null) {

postOrder(node.left);

postOrder(node.right);

System.out.print(node.key + " ");

}

}

public static void main(String[] args) {

AVLTree tree = new AVLTree();

tree.root = tree.insert(tree.root, 10);

tree.root = tree.insert(tree.root, 20);

tree.root = tree.insert(tree.root, 30);

tree.root = tree.insert(tree.root, 40);

tree.root = tree.insert(tree.root, 50);

tree.root = tree.insert(tree.root, 25);

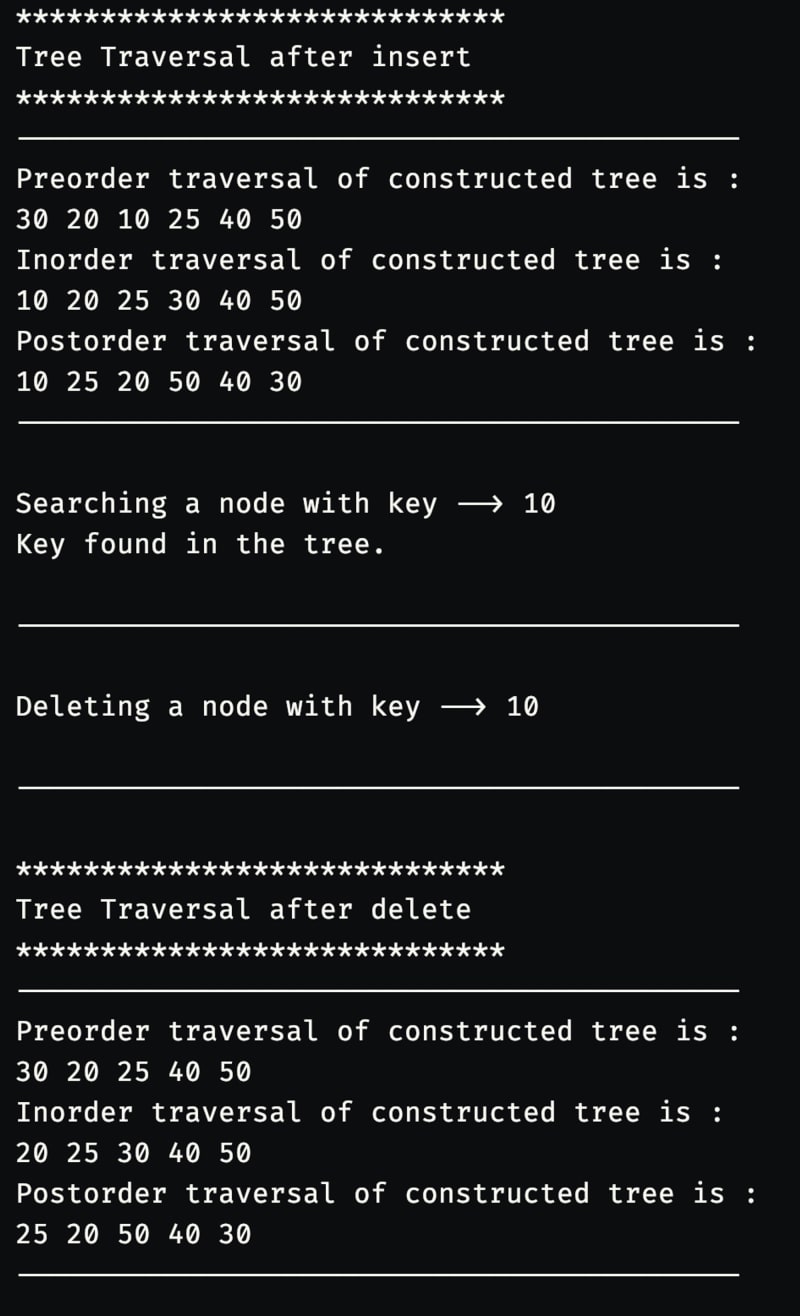

System.out.println("*****************************");

System.out.println("Tree Traversal after insert");

System.out.println("*****************************");

System.out.println("-------------------------------------------");

System.out.println("Preorder traversal of constructed tree is : ");

tree.preOrder(tree.root);

System.out.println("\nInorder traversal of constructed tree is : ");

tree.inOrder(tree.root);

System.out.println("\nPostorder traversal of constructed tree is : ");

tree.postOrder(tree.root);

System.out.println("\n-------------------------------------------\n");

System.out.println("Searching a node with key --> " + 10);

Node searchResult = tree.search(tree.root, 10);

if (searchResult != null)

System.out.println("Key found in the tree.");

else

System.out.println("Key not found in the tree.");

System.out.println("\n-------------------------------------------\n");

System.out.println("Deleting a node with key --> " + 10);

tree.deleteNode(tree.root, 10);

System.out.println("\n-------------------------------------------\n");

System.out.println("*****************************");

System.out.println("Tree Traversal after delete");

System.out.println("*****************************");

System.out.println("-------------------------------------------");

System.out.println("Preorder traversal of constructed tree is : ");

tree.preOrder(tree.root);

System.out.println("\nInorder traversal of constructed tree is : ");

tree.inOrder(tree.root);

System.out.println("\nPostorder traversal of constructed tree is : ");

tree.postOrder(tree.root);

System.out.println("\n-------------------------------------------\n");

}

}

Time Complexity

The time complexity of AVL trees for basic operations is as follows:

Search

The time complexity of search operation in an AVL tree is O(log n), where n is the number of nodes in the tree.

Insert

The time complexity of insert operation in an AVL tree is O(log n), where n is the number of nodes in the tree. In the worst case, when the AVL tree needs to be rebalanced, the time complexity is O(log n) as well.

Delete

The time complexity of delete operation in an AVL tree is O(log n), where n is the number of nodes in the tree. In the worst case, when the AVL tree needs to be rebalanced, the time complexity is O(log n) as well.

Traversal

The time complexity of tree traversal in an AVL tree is O(log n), where n is the number of nodes in the tree.

Overall, the time complexity of basic operations in AVL trees is efficient and comparable to other self-balancing trees like Red-Black trees.

Space Complexity

The space complexity of AVL trees is O(n), where n is the number of nodes in the tree. This is because each node in an AVL tree contains data, pointers to its left and right children, and a balance factor. The balance factor is a single bit of information that indicates whether the tree is balanced or not. Therefore, the space required for each node is constant.

In addition to the space required for each node, AVL trees also require space for pointers to the root node and any temporary variables used during operations like insert or delete. However, the space required for these variables is negligible compared to the space required for the nodes themselves.

Overall, the space complexity of AVL trees is proportional to the number of nodes in the tree, making them a space-efficient data structure. However, it’s important to note that AVL trees can have a higher memory overhead than simpler data structures like linked lists or arrays.

Advantages

Efficient operations

AVL trees have a guaranteed logarithmic time complexity for operations such as insert, delete and search, making them efficient for large datasets.

Balanced structure

AVL trees maintain a balanced structure, ensuring that the tree height is minimised and the operations are efficient.

Self-balancing

AVL trees are self-balancing, which means that after an operation is performed, the tree is automatically rebalanced, eliminating the need for manual rebalancing

Disadvantages

Space overhead

AVL trees require additional space to store the balance factors, which can add significant overhead for large datasets.

Complex implementation

Implementing AVL trees can be complex, requiring careful consideration of balance factors and rotation operations.

Slower insertion

While AVL trees have efficient search and deletion operations, insertion can be slower due to the need for rebalancing.

When AVL Trees Might Be The Best Choice For Your Data

AVL trees are a good choice when the dataset is large and efficient search, delete and insert operations are needed. Here are some specific situations when choosing AVL trees would be appropriate

Large datasets

AVL trees are optimised for large datasets and offer efficient search, delete and insert operations with logarithmic time complexity. Therefore, they are a good choice when dealing with large datasets.

Real-time applications

AVL trees are self-balancing, which means that they are ideal for applications that require real-time updates. For example, if you are building a real-time stock market application, AVL trees would be a good choice to track the price changes of stocks.

Applications with frequent updates

AVL trees are also good for applications that require frequent updates. They automatically balance themselves, making it easier to maintain the efficiency of the tree.

Maintaining sorted data

AVL trees maintain sorted data. If your application requires the data to be sorted, AVL trees can be an excellent choice.

In summary, AVL trees are a good choice when the dataset is large and efficient search, delete and insert operations are needed, or when the application requires real-time updates or frequent updates. They are also a good choice when maintaining sorted data is essential.

When AVL Trees Might Not Be The Best Choice For Your Data

While AVL trees have many advantages, they may not be the best choice in all situations. Here are some specific situations when you may want to consider other data structures

Small datasets

AVL trees require additional space to store balance factors and have a more complex implementation than simpler data structures such as linked lists or arrays. If you have a small dataset, the overhead of an AVL tree may not be worth the benefits.

Static datasets

AVL trees are optimised for datasets that are frequently updated. If your dataset is static (i.e., it doesn’t change often), there is no need for a self-balancing data structure like an AVL tree.

Limited memory

AVL trees can have a higher memory overhead than simpler data structures. If you are working with limited memory, you may want to consider a data structure that uses less memory, such as a binary search tree.

Simple implementation

If your application requires a simple implementation, AVL trees may not be the best choice. AVL trees require careful consideration of balance factors and rotation operations, which can be more complex than other data structures.

In summary, AVL trees may not be the best choice for small datasets, static datasets, limited memory, or when a simple implementation is required. In these cases, other data structures may be more appropriate.

Conclusion

AVL trees are an important data structure in computer science and programming. They are self-balancing binary search trees that guarantee a balanced height, ensuring efficient search, insertion, and deletion operations. While the implementation of AVL trees is complex, the advantages of using them outweigh the effort required to implement them.

Top comments (0)