Machine Learning (ML) is playing a key role in a wide range of critical applications.

Recently, I was tasked to design a biometric identification system that integrated with real-time camera streaming. The task had several constraints that required innovative solutions. For instance, the system workflow had to not only detect faces but also had to recognize them in a near-instant in order to expedite further action.

A camera application triggers the detection and recognition workflow. The application (local console app for Ubuntu and Raspbian) written in Golang is installed on a device that is connected to the camera.

We used a JSON config file with Local Camera ID and Camera Reader type for the first app launch configuration.

For face detection we experimented with several processes and discovered that Caffe Face tracking and TensorFlow object detection models provided the best detection outcomes. In addition, both programs are available through OpenCV library.

For face detection we don’t use Dlib. We have a separate API to calculate vectors of features with dlib behind the scenes and compare with references and we call it not for every video frame: we assume that if the bounding box didn’t move too fast it’s the same person.

When a face is captured, the image is cropped and transmitted via HTTP form data request to the backend. The image is saved to a local file system using a backend API. A record is created and saved to Detection Log along with a personID.

A background worker at the app’s backend finds any record with a “classified=false” tag. Using Dlib, the worker calculates a 128-dimensional descriptor vector of face features. Each feature vector is run against multiple reference images within an existing database. The application finds a match by comparing the Euclidean distance between feature vectors of the live-stream image with the Euclidean distance between feature vectors of existing records and entries of each person in the database.

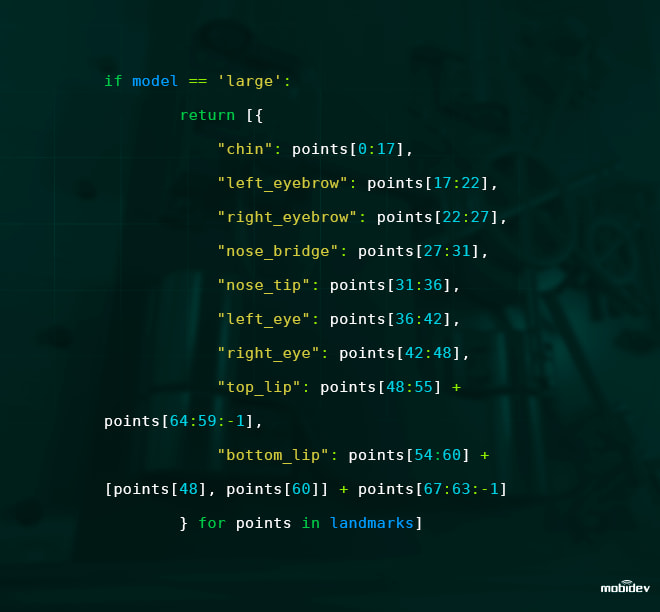

The code uses properly defined point indices in Dlib and represents each point index with a facial feature.

To get started, you can use the dlib wrapper: https://github.com/ageitgey/face_recognition

There is an example how to compare faces: https://github.com/ageitgey/face_recognition/blob/master/examples/web_service_example.py

A worker knows if an image is of a known or an unknown individual based on the Euclidean distance of facial vectors. If a detected person's features rank less than 0.6, then the background worker sets a personID that is marked as classified and enters it into the Detection Log. If a feature is greater than 0.6, then a new record is created. This record is set as unknown and a new personID is entered into the Detection Log.

Unidentified person images are sent as notifications to the corresponding manager. We chose to implement chatbot messenger notifications and found that simple alert chatbot could be implemented within 2-5 days.

We created two chatbots, one with Microsoft Bot Framework and the other with Python-based Errbot. Once chatbots are in place, it is possible for security personnel or others to manually grant remote access to unknown individuals on a case-by-case basis.

Captured images and their corresponding records are managed using an Admin Panel that acts as a portal to a database that contains stored photos and IDs. Both the Admin Panel and database are prepared and entered into the biometric identification system before its implementation. Still, unidentified images and IDs can be added to the existing database using the Admin Portal.

NOTES:

It is necessary to note that the app’s backend requires Golang and MongoDB Collections to store employee data. Yet, API requests are based on RESTful API. Users can test the system on regular workstations prior to implementation.

As unidentified images and IDs are added, databases will grow. Our use case employed a 200-entry database. Since the app works in real-time and recognition is near-instant, the need to scale becomes evident. If organizations need to add cameras or create databases with entries of 10,000 or more, then there could be a lag in real-time analysis and recognition speed. To solve this issue we used parallelization. Using a load balancer and several web workers for simultaneous tasks, the system can chunk an entire database which allows for quick match searches and provides swift results.

Anti-spoofing measures must be highly adaptable to bad actors that might gain entry using false facial images. Our team has put in place enhanced security measures and anti-spoofing features to counteract fraudulent attempts at access.

While this case study is focused on facial recognition, the underlying technology can be used for a range of objects. Object recognition models can be trained to identify any other object once a dataset has been created.

Top comments (0)