This is a reblog of the original post at Java Advent Calendar 2020, see original post.

A bit of a background

Many of you know of my first post on this topic Two years in the life of AI, ML, DL and Javathat was back in 2018/2019 when I had just started with my journey which still continues but I have more to share in this update (and I hope you will like it). At that time we had a good number of resources and the divide between Java and non-Java related resources were not so to have discussions about — since then things have changed, let’s see what the landscape looks like.

I’m hoping that I’m able to share richer aspects of my journey, views, opinions, observations and learnings this time around as compared to my previous summary post.

I do admit that this is only a summary post and I intend to write more specific and focussed posts like the ones I did in 2019 (see the following section) — I have earmarked a couple of topics and I will flesh them out as soon as I’m happy with my selections.

2019: year of blogs

Looking at my activities in 2019 towards 2020, I can say I was more focussed on writing blogs and creating a lot of hands-on solution which I shared via my projects on GitHub and blogs on Medium. Here is a list of all of them:

- August 2019: How to build Graal-enabled JDK8 on CircleCI?

- August 2019: How to do Deep Learning for Java?

- September 2019: Running your JuPyTeR notebooks on the cloud

- October 2019: Running Apache Zeppelin on the cloud

- November 2019: Applying NLP in Java, all from the command-line

- November 2019: Exploring NLP concepts using Apache OpenNLP

- December 2019: Exploring NLP concepts using Apache OpenNLP inside a Java-enabled Jupyter notebook

2020: year of presentations

Similarly in 2020, from a blogging point-of-view I didn’t write much, this officially my first proper post of the year (with the exception of one in August 2020, which you will find below) — I was quite busy with preparing for talks and presentations and online meetings and discussions. I kicked off the year with my talk at the Grakn Cosmos 2020 conference in London, UK. So let me list them here so you can take a look at them in your own time:

- February 2020: Naturally, getting productive, my journey with Grakn and Graql at Grakn Cosmos 2020 conference

- July 2020: “nn” things every Java Developer should know about AI/ML/DL at JOnConf 2020 conference

- August 2020: From backend development to machine learning on Abhishek Talks(YouTube channel) meetup

- August 2020: An Interview by Neural Magic: Machine Learning Engineer Spotlight(blog post)

- October 2020: Profiling Text Data at the NLP Zurich meetup

- October 2020: Tribuo: an introduction to a Java ML Library at MakeITWeek 2020 conference

- October 2020: “nn” things every Java Developer should know about AI/ML/DL at Oracle Groundbreakers Tour – APAC 2020 conference

You can find slides (and videos of some) of the above presentations under the links provided.

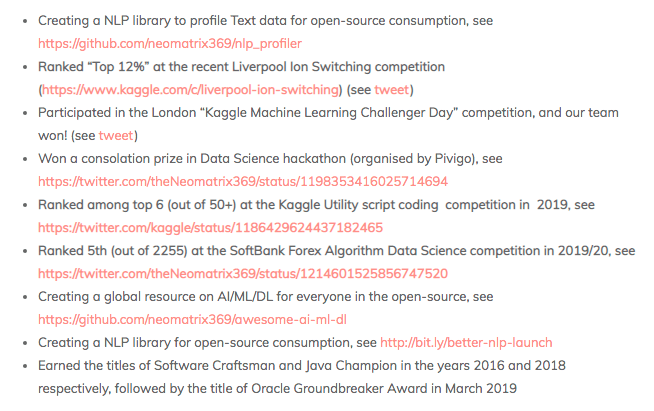

Other achievements and activities this year

As to my journey, I have also been involved a good part of this year learning by online AI/ML competitions, see this and this post shared to learn more. You can also find all of my datasets and notebooks for further study. Also the launch of an Python-based NLP Library called NLP Profiler was another important feat for me this year.

What’s happening in this space?

There is a lot of hype happening in the space and as Suyash Joshi rightly said during a conversation sometime back: “It’s easy to get sucked into hype and shiny new stuff I fell a lot for that and then get overwhelmed“. And I think you will agree with me that we can relate to this situation. Also many of the things we are seeing look nice, and shiny but may NOT be fully ready for the real-world yet, I did cover similar arguments in a post in August.

There are few entities who are openly claiming various things about the not-so-bright-sides of AI, they are things like:

- the statistical methods used are from the last century and may not be suited to modern day data(led by Nassim Taleb’s school of thought)

- many modern-day open-source tools and industry methods to derive at solutions are broken or inadequate

- we are not able to explain our predictions and findings(blackbox issue: explainability and interpretability)

- our AI solutions lack causality, intent and reasoning (similar to the previous point)

These come from individuals, groups and companies who are building their own solutions (mostly closed-source) as they have noticed the above drawbacks and doing things to overcome them but also making the rest of the world aware of it.

Where is Java on the map?

Java has been contributing to the AI force since sometime now, all the way when there was a lot of talk during the Big Data buzz, which then transformed to the new buzz about AI, Machine Learning and Deep Learning. If we closely look at the categories and sub-categories of resources here:

- Apache Spark [1] |[2]

- Apache Hadoop

- Deeplearning4J

- and many other such tools, libraries, frameworks, and products

and now more recently

- grCuda and graalPython (from the folks at Oracle Labs behind all the GraalVM magic)

- DeepNetts

- Tribuo

and more under these resources

- Java: AI/ML/DL

- NLP Java

- Tools and libraries(Java/JVM)

- some intriguing GitHub resources: AI or Artificial Intelligence | ML or Machine Learning | DL or Deep Learning | RL or Reinforcement Learning | NLP or Natural Language Processing

[2] Oracle Labs and Apache Spark

You can already see various implementations using Java and other JVM languages — they have just not been noticed by us, unless we made an attempt to look for them.

If you have been following the progress of GraalVM and Truffle (see GitHub) in this space boosting server-side, desktop and cloud-base systems in terms of performance, ergonomic footprint and language interop — then you will, just like me also see that Java’s presence and position is growing from strength-to-strength in this space.

(grCuda and graalPython are two such examples: see AI/ML/DL related resources on Awesome Graal and also at the appendix sections of the talk slides for more resources — see section above for talks)

Some highlights in pictures

Recommended Resources

If I had to go through many of the resources I have shared in this post so far, of all of them I would look at these topics (in no particular order and also not an exhaustive list, it’s still a lot of topics to learn and know about):

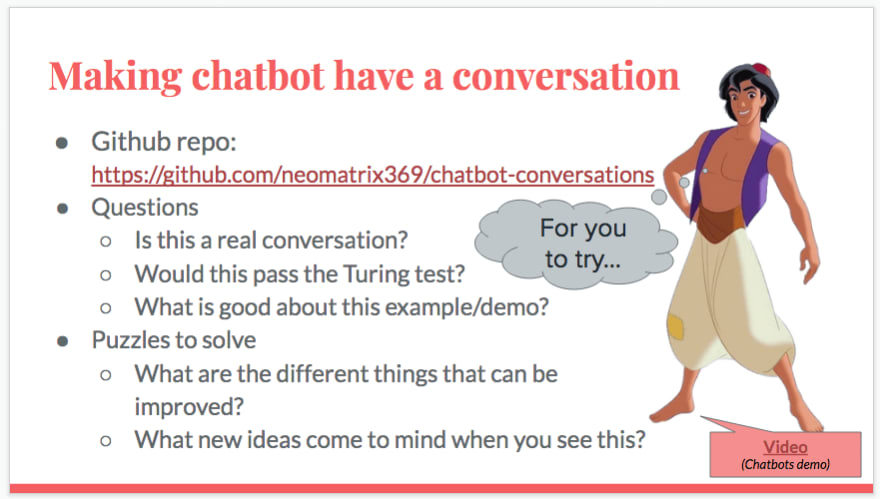

Bayesian | Performance in Python | Made With ML | Virgilio | Visualisation | TVM: Compiler stack for CPUs, GPUs, etc… | Hyperparameter tuning | Normalising (and Standardising) a distribution | Things to Know | grCuda | Running compiled and optimised AI Models in the Cloud | Neural Magic: Model Pruning and Sparsification | Train and Infer models via a native-image | Genetic Algorithms by Eyal | DeepNetts: Deep Learning in Java by Zoran | NLP | NLP with Java | Graphs | ML on Code/Program/Source Code | Tribuo: A java-base ML library|Chatbots talking to each other | Testing

My apologies as YMMV — I’m working on these topics and slowly gaining knowledge and experience and hence my urge to immediately share them with the rest of the community. I’ll admit few of these may be still Work in Progress — but I hope we can all learn these together. I’ll share more as I make more progress with them during the course of the days, weeks and months into 2021.

Conclusions

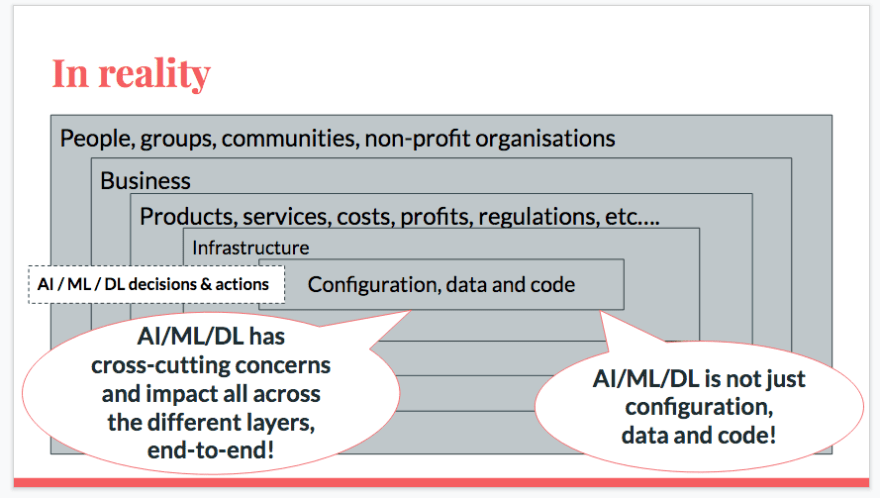

We are in a heterogenous space and AI/ML/DL ideas and innovations from creation to deployment go through various forms and shapes and depending on the comfort-zones and end-use cases of the ideas and innovations get implemented using multitudes of technologies. It won’t be correct or fair to say only one technology is being used.

We are seeing R&D, rapid prototyping, MVP, PoC and the likes are being done using languages like Python, R, Matlab, Julia and the likes while when it comes to robust, reliable, scalable, production-ready solutions more serious contenders are chosen for encapsulating the original work. And Java and other JVM languages, and the likes that can provide such stability are often being considered both for desktop and cloud implementations.

Wearing my Oracle Groundbreaker Ambassador hat: I recommend taking advantage of the Free cloud credits from Oracle (also see this post — the VMs are super fast and easy to set-up (see my posts above).

What next?

The journey continues, the road may be long, but it’s full of learnings — that certainly helps us grow. Many times we learn at the turning points while the milestones keep changing. Let’s continue learning and improving ourselves.

Please do feedback if you are curious to explore deeper aspects of what you read above, as you may understand the topic is wide and deep and one post cannot do full justice to such rich topics (plus many of them are moving targets).

This is a reblog of the original post at Java Advent Calendar 2020, see original post.

Top comments (0)