In this tutorial, we'll be building an OCR app in Node.js using Google vision API.

An OCR app performs text recognition on an image. It can be used to get the text from an image.

Getting started with Google vision API

To get started with the Google Vision API, visit the link below

https://cloud.google.com/vision/docs/setup.

Follow the instructions on how to set up the Google vision API and also obtain your GOOGLE APPLICATION CREDENTIALS, which is a JSON file that contains your service keys, the file is downloaded into your computer once you're done with the setup. The GOOGLE APPLICATION CREDENTIALS is very useful, as the app we are about to build can't work without it.

Using the Node.js client library

To use the Node.js client library, visit the link below to get started.

https://cloud.google.com/vision/docs/quickstart-client-libraries

The page shows how to use the Google Vision API in your favorite programming language. Now that we've seen what's on the page, we can go straight to implementing it in our code.

Create a directory called ocrGoogle and open it in your favorite code editor.

run

npm init -y

to create a package.json file. Then run

npm install --save @google-cloud/vision

to install the google vision API. Create a resources folder, download the image from wakeupcat.jpg into the folder, then create an index.js file and fill it up with the following code

process.env.GOOGLE_APPLICATION_CREDENTIALS = 'C:/Users/lenovo/Documents/readText-f042075d9787.json'

async function quickstart() {

// Imports the Google Cloud client library

const vision = require('@google-cloud/vision');

// Creates a client

const client = new vision.ImageAnnotatorClient();

// Performs label detection on the image file

const [result] = await client.labelDetection('./resources/wakeupcat.jpg');

const labels = result.labelAnnotations;

console.log('Labels:');

labels.forEach(label => console.log(label.description));

}

quickstart()

In the first line, we set the environment variable for GOOGLE_APPLICATION_CREDENTIALS to the JSON file we downloaded earlier. The asynchronous function quickstart contains some google logic, then in the last line, we invoke the function.

run

node index.js

to process the image, this should print the labels of the image to the console.

That looks good, but we don't want to work with label detection so go ahead and update the index.js as follows

// Imports the Google Cloud client library

const vision = require('@google-cloud/vision');

process.env.GOOGLE_APPLICATION_CREDENTIALS = 'C:/Users/lenovo/Documents/readText-f042075d9787.json'

async function quickstart() {

try {

// Creates a client

const client = new vision.ImageAnnotatorClient();

// Performs text detection on the local file

const [result] = await client.textDetection('./resources/wakeupcat.jpg');

const detections = result.textAnnotations;

const [ text, ...others ] = detections

console.log(`Text: ${ text.description }`);

} catch (error) {

console.log(error)

}

}

quickstart()

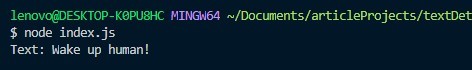

The above logic returns the text on the image, it looks identical to the previous logic except for some changes.

- We now use the client.textDetection method instead of client.labelDetection.

- We destructure the detections array into two parts, text and others. The text variable contains the complete text from the image. Now, running ```javascript

node index.js

returns the text on the image.

## Installing and using Express.js

We need to install express.js, to create a server and an API that would request Google Vision API.

```javascript

npm install express --save

Now, we can update index.js to

const express = require('express');

// Imports the Google Cloud client library

const vision = require('@google-cloud/vision');

const app = express();

const port = 3000

process.env.GOOGLE_APPLICATION_CREDENTIALS = 'C:/Users/lenovo/Documents/readText-f042075d9787.json'

app.use(express.json())

async function quickstart(req, res) {

try {

// Creates a client

const client = new vision.ImageAnnotatorClient();

// Performs text detection on the local file

const [result] = await client.textDetection('./resources/wakeupcat.jpg');

const detections = result.textAnnotations;

const [ text, ...others ] = detections

console.log(`Text: ${ text.description }`);

res.send(`Text: ${ text.description }`)

} catch (error) {

console.log(error)

}

}

app.get('/detectText', async(req, res) => {

res.send('welcome to the homepage')

})

app.post('/detectText', quickstart)

//listen on port

app.listen(port, () => {

console.log(`app is listening on ${port}`)

})

Open insomnia, then make a post request to http://localhost:3000/detectText, the text on the image would be sent as the response.

Image upload with multer

This app would be no fun if we could only use the app with one image or if we had to edit the image we wish to process in the backend every time. We want to upload any image to the route for processing, to do that we use an npm package called multer. Multer enables us to send images to a route.

npm install multer --save

to configure multer, create a file called multerLogic.js and edit it with the following code

const multer = require('multer')

const path = require('path')

const storage = multer.diskStorage({

destination: function (req, file, cb) {

cb(null, path.join(process.cwd() + '/resources'))

},

filename: function (req, file, cb) {

cb(null, file.fieldname + '-' + Date.now() + path.extname(file.originalname))

}

})

const upload = multer( { storage: storage, fileFilter } ).single('image')

function fileFilter(req, file, cb) {

const fileType = /jpg|jpeg|png/;

const extname = fileType.test(path.extname(file.originalname).toLowerCase())

const mimeType = fileType.test(file.mimetype)

if(mimeType && extname){

return cb(null, true)

} else {

cb('Error: images only')

}

}

const checkError = (req, res, next) => {

return new Promise((resolve, reject) => {

upload(req, res, (err) => {

if(err) {

res.send(err)

}

else if (req.file === undefined){

res.send('no file selected')

}

resolve(req.file)

})

})

}

module.exports = {

checkError

}

Let's take a minute to understand the logic above. This is all multer logic, the logic that will enable us to send an image to the detectText route. We specify storage that has two properties

- destination: this specifies where the uploaded file will be stored, then

- filename: this allows us to rename the file before storing it. Here we rename our file by concatenating the fieldname(which is literally the name of the field, here ours is image), the current date, and also the extension name of the original file.

We create a variable upload that's equal to multer called with an object containing storage and fileFilter. After that, we create a function fileFilter that checks the file type(here we specify the png, jpg, and jpeg file types).

Next, we create a function checkError that checks for errors, it returns a promise that resolves with req.file if there are no errors, else the errors are handled appropriately, finally, we export checkError. That was quite the explanation, now we can move on with our code.

In order to use checkError, We require it in index.js as follows,

const { checkError } = require('./multerLogic')

then edit the quickstart function as follows

async function quickstart(req, res) {

try {

//Creates a client

const client = new vision.ImageAnnotatorClient();

const imageDesc = await checkError(req, res)

console.log(imageDesc)

// Performs text detection on the local file

// const [result] = await client.textDetection('');

// const detections = result.textAnnotations;

// const [ text, ...others ] = detections

// console.log(`Text: ${ text.description }`);

// res.send(`Text: ${ text.description }`)

} catch (error) {

console.log(error)

}

}

We call the checkError function (that returns a promise) and assign the resolved req.file to imageDesc then we print imageDesc to the console. Make a POST request with insomnia

we should get the following result printed to the console.

Fine, now that we have image upload up and running, its time to update our code to work with the uploaded image. Edit the quickstart function with the following code,

//Creates a client

const client = new vision.ImageAnnotatorClient();

const imageDesc = await checkError(req, res)

console.log(imageDesc)

//Performs text detection on the local file

const [result] = await client.textDetection(imageDesc.path);

const detections = result.textAnnotations;

const [ text, ...others ] = detections

res.send(`Text: ${ text.description }`)

finally, make a POST request to our route using insomnia and we should get a result similar to this.

This tutorial is a very simple example of what could be built using the Google vision API, the Github repo can be found here,

for a more robust version, visit this repo.

Please follow me on twitter @oviecodes, thanks, and have a wonderful day.

Top comments (0)