Originally published at pbhadani.com

In this blog, I will show how Terraform can be used to create a Google Kubernetes Engine (GKE) cluster.

Goal

Create a GKE Cluster which has Workload Identity feature enabled using Terraform.

Prerequisites

This post assumes the following:

- We already have a GCP Project and a GCS Bucket (we will use this to store Terraform State file) created.

-

Google Kubernetes EngineAPI is enabled in the GCP Project. - Google Cloud SDK (

gcloud),kubectlandTerraformis setup on your workstation. If you don't have, then refer to my previous blogs - Getting started with Terraform and Getting started with Google Cloud SDK.

Source Code

Full code is available on GitHub.

Note: This is not a production-ready codebase.

Create a GKE Cluster

Step 1: Create a Unix directory for the Terraform project.

mkdir ~/terraform-gke-example

cd ~/terraform-gke-example

Step 2: Create Terraform configuration file which defines GKE and Google provider.

vi gke.tf

This file has following content

# Specify the GCP Provider

provider "google-beta" {

project = var.project_id

region = var.region

version = "~> 3.10"

alias = "gb3"

}

# Create a GKE cluster

resource "google_container_cluster" "my_k8s_cluster" {

provider = google-beta.gb3

name = "my-k8s-cluster"

location = var.region

initial_node_count = 1

master_auth {

username = ""

password = ""

}

# Enable Workload Identity

workload_identity_config {

identity_namespace = "${var.project_id}.svc.id.goog"

}

node_config {

machine_type = var.machine_type

oauth_scopes = [

"https://www.googleapis.com/auth/logging.write",

"https://www.googleapis.com/auth/monitoring",

]

metadata = {

"disable-legacy-endpoints" = "true"

}

workload_metadata_config {

node_metadata = "GKE_METADATA_SERVER"

}

labels = { # Update: Replace with desired labels

"environment" = "test"

"team" = "devops"

}

}

}

Note: workload_identity_config & workload_metadata_config block enables Workload Identity.

Step 3: Define Terraform variables.

vi variables.tf

This file looks like:

variable "project_id" {

description = "Google Project ID."

type = string

}

variable "region" {

description = "Google Cloud region"

type = string

default = "europe-west2"

}

variable "machine_type" {

description = "Google VM Instance type."

type = string

}

Note: We are defining a default value for region. This means if a value is not supplied for this variable, Terraform will use europe-west2 as its value.

Step 4: Create a tfvars file.

vi terraform.tfvars

project_id = "" # Put GCP Project ID.

machine_type = "n1-standard-1" # Put the desired VM Instance type.

Step 5: Set the remote state.

vi backend.tf

terraform {

backend "gcs" {

bucket = "my-tfstate-bucket" # GCS bucket name to store terraform tfstate

prefix = "gke-cluster" # Update to desired prefix name. Prefix name should be unique for each Terraform project having same remote state bucket.

}

}

Step 6: File structure looks like below

cntekio:~ terraform-gke-example$ tree

.

├── backend.tf

├── gke.tf

├── terraform.tfvars

└── variables.tf

0 directories, 4 files

Step 7: Now, initialize the terraform project.

terraform init

Output

Initializing the backend...

Successfully configured the backend "gcs"! Terraform will automatically

use this backend unless the backend configuration changes.

Initializing provider plugins...

- Checking for available provider plugins...

- Downloading plugin for provider "google-beta" (terraform-providers/google-beta) 3.10.0...

Terraform has been successfully initialized!

You may now begin working with Terraform. Try running "terraform plan" to see

any changes that are required for your infrastructure. All Terraform commands

should now work.

If you ever set or change modules or backend configuration for Terraform,

rerun this command to reinitialize your working directory. If you forget, other

commands will detect it and remind you to do so if necessary.

Note: terraform init downloads all the required provider and plugins.

Step 8: Let's see the execution plan.

terraform plan --out 1.plan

Output

Refreshing Terraform state in-memory prior to plan...

The refreshed state will be used to calculate this plan, but will not be

persisted to local or remote state storage.

------------------------------------------------------------------------

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

+ create

Terraform will perform the following actions:

# google_container_cluster.my_k8s_cluster will be created

+ resource "google_container_cluster" "my_k8s_cluster" {

+ additional_zones = (known after apply)

+ cluster_ipv4_cidr = (known after apply)

+ default_max_pods_per_node = (known after apply)

+ enable_binary_authorization = false

+ enable_intranode_visibility = false

+ enable_kubernetes_alpha = false

+ enable_legacy_abac = false

+ enable_shielded_nodes = false

+ enable_tpu = false

+ endpoint = (known after apply)

+ id = (known after apply)

+ initial_node_count = 1

+ instance_group_urls = (known after apply)

+ label_fingerprint = (known after apply)

+ location = "europe-west2"

+ logging_service = "logging.googleapis.com/kubernetes"

+ master_version = (known after apply)

+ monitoring_service = "monitoring.googleapis.com/kubernetes"

+ name = "my-k8s-cluster"

+ network = "default"

+ node_locations = (known after apply)

+ node_version = (known after apply)

+ operation = (known after apply)

+ project = (known after apply)

+ region = (known after apply)

+ services_ipv4_cidr = (known after apply)

+ subnetwork = (known after apply)

+ tpu_ipv4_cidr_block = (known after apply)

+ zone = (known after apply)

+ addons_config {

+ cloudrun_config {

+ disabled = (known after apply)

}

+ horizontal_pod_autoscaling {

+ disabled = (known after apply)

}

+ http_load_balancing {

+ disabled = (known after apply)

}

+ istio_config {

+ auth = (known after apply)

+ disabled = (known after apply)

}

+ kubernetes_dashboard {

+ disabled = (known after apply)

}

+ network_policy_config {

+ disabled = (known after apply)

}

}

+ authenticator_groups_config {

+ security_group = (known after apply)

}

+ cluster_autoscaling {

+ autoscaling_profile = (known after apply)

+ enabled = (known after apply)

+ auto_provisioning_defaults {

+ oauth_scopes = (known after apply)

+ service_account = (known after apply)

}

+ resource_limits {

+ maximum = (known after apply)

+ minimum = (known after apply)

+ resource_type = (known after apply)

}

}

+ database_encryption {

+ key_name = (known after apply)

+ state = (known after apply)

}

+ master_auth {

+ client_certificate = (known after apply)

+ client_key = (sensitive value)

+ cluster_ca_certificate = (known after apply)

+ client_certificate_config {

+ issue_client_certificate = (known after apply)

}

}

+ network_policy {

+ enabled = (known after apply)

+ provider = (known after apply)

}

+ node_config {

+ disk_size_gb = (known after apply)

+ disk_type = (known after apply)

+ guest_accelerator = (known after apply)

+ image_type = (known after apply)

+ labels = {

+ "environment" = "test"

+ "team" = "devops"

}

+ local_ssd_count = (known after apply)

+ machine_type = "n1-standard-1"

+ metadata = {

+ "disable-legacy-endpoints" = "true"

}

+ oauth_scopes = [

+ "https://www.googleapis.com/auth/logging.write",

+ "https://www.googleapis.com/auth/monitoring",

]

+ preemptible = false

+ service_account = (known after apply)

+ taint = (known after apply)

+ shielded_instance_config {

+ enable_integrity_monitoring = (known after apply)

+ enable_secure_boot = (known after apply)

}

+ workload_metadata_config {

+ node_metadata = "GKE_METADATA_SERVER"

}

}

+ node_pool {

+ initial_node_count = (known after apply)

+ instance_group_urls = (known after apply)

+ max_pods_per_node = (known after apply)

+ name = (known after apply)

+ name_prefix = (known after apply)

+ node_count = (known after apply)

+ node_locations = (known after apply)

+ version = (known after apply)

+ autoscaling {

+ max_node_count = (known after apply)

+ min_node_count = (known after apply)

}

+ management {

+ auto_repair = (known after apply)

+ auto_upgrade = (known after apply)

}

+ node_config {

+ boot_disk_kms_key = (known after apply)

+ disk_size_gb = (known after apply)

+ disk_type = (known after apply)

+ guest_accelerator = (known after apply)

+ image_type = (known after apply)

+ labels = (known after apply)

+ local_ssd_count = (known after apply)

+ machine_type = (known after apply)

+ metadata = (known after apply)

+ min_cpu_platform = (known after apply)

+ oauth_scopes = (known after apply)

+ preemptible = (known after apply)

+ service_account = (known after apply)

+ tags = (known after apply)

+ taint = (known after apply)

+ sandbox_config {

+ sandbox_type = (known after apply)

}

+ shielded_instance_config {

+ enable_integrity_monitoring = (known after apply)

+ enable_secure_boot = (known after apply)

}

+ workload_metadata_config {

+ node_metadata = (known after apply)

}

}

+ upgrade_settings {

+ max_surge = (known after apply)

+ max_unavailable = (known after apply)

}

}

+ release_channel {

+ channel = (known after apply)

}

+ workload_identity_config {

+ identity_namespace = "workshop-demo-adqw12.svc.id.goog"

}

}

Plan: 1 to add, 0 to change, 0 to destroy.

------------------------------------------------------------------------

This plan was saved to: 1.plan

To perform exactly these actions, run the following command to apply:

terraform apply "1.plan"

Note: terraform plan output shows that this code is going to a GKE cluster.

--out 1.plan flag tells terraform to save the plan in a file.

Step 9: The execution plan looks good, so let's move ahead and apply this plan.

terraform apply 1.plan

Output

google_container_cluster.my_k8s_cluster: Creating...

google_container_cluster.my_k8s_cluster: Still creating... [10s elapsed]

google_container_cluster.my_k8s_cluster: Still creating... [1m10s elapsed]

google_container_cluster.my_k8s_cluster: Still creating... [2m10s elapsed]

google_container_cluster.my_k8s_cluster: Still creating... [3m20s elapsed]

google_container_cluster.my_k8s_cluster: Creation complete after 3m23s [id=projects/workshop-demo-adqw12/locations/europe-west2/clusters/my-k8s-cluster]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

Note: Creating a GKE cluster could take 4-7mins.

Step 10: Let's connect with GKE cluster.

gcloud container clusters get-credentials my-k8s-cluster --region europe-west2 --project workshop-demo-adqw12

Output

Fetching cluster endpoint and auth data.

kubeconfig entry generated for my-k8s-cluster.

Step 11: Now, run kubectl version command to check server version.

kubectl version

Output

Client Version: version.Info{Major:"1", Minor:"14+", GitVersion:"v1.14.10-dispatcher", GitCommit:"f5757a1dee5a89cc5e29cd7159076648bf21a02b", GitTreeState:"clean", BuildDate:"2020-02-06T03:31:35Z", GoVersion:"go1.12.12b4", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"14+", GitVersion:"v1.14.10-gke.17", GitCommit:"bdceba0734835c6cb1acbd1c447caf17d8613b44", GitTreeState:"clean", BuildDate:"2020-01-17T23:10:13Z", GoVersion:"go1.12.12b4", Compiler:"gc", Platform:"linux/amd64"}

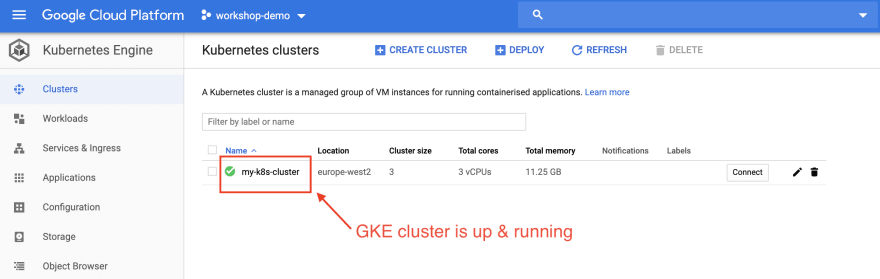

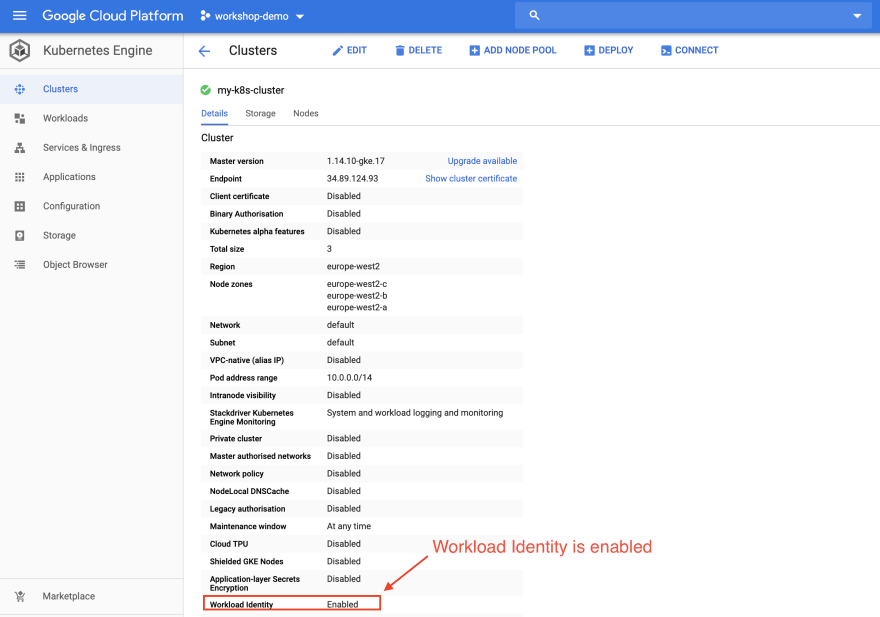

Step 12: Now, we will move to Google Cloud Platform Console to view GKE cluster properties.

Step 13: Once we no longer need this infrastructure, we can cleanup to reduce costs.

terraform destroy

Output

google_container_cluster.my_k8s_cluster: Refreshing state... [id=projects/workshop-demo-adqw12/locations/europe-west2/clusters/my-k8s-cluster]

An execution plan has been generated and is shown below.

Resource actions are indicated with the following symbols:

- destroy

Terraform will perform the following actions:

# google_container_cluster.my_k8s_cluster will be destroyed

- resource "google_container_cluster" "my_k8s_cluster" {

- additional_zones = [] -> null

- cluster_ipv4_cidr = "10.0.0.0/14" -> null

- default_max_pods_per_node = 110 -> null

- enable_binary_authorization = false -> null

- enable_intranode_visibility = false -> null

- enable_kubernetes_alpha = false -> null

- enable_legacy_abac = false -> null

- enable_shielded_nodes = false -> null

- enable_tpu = false -> null

- endpoint = "34.89.124.93" -> null

- id = "projects/workshop-demo-adqw12/locations/europe-west2/clusters/my-k8s-cluster" -> null

- initial_node_count = 1 -> null

- instance_group_urls = [

- "https://www.googleapis.com/compute/beta/projects/workshop-demo-adqw12/zones/europe-west2-c/instanceGroups/gke-my-k8s-cluster-default-pool-7dc4a362-grp",

- "https://www.googleapis.com/compute/beta/projects/workshop-demo-adqw12/zones/europe-west2-b/instanceGroups/gke-my-k8s-cluster-default-pool-0538b086-grp",

- "https://www.googleapis.com/compute/beta/projects/workshop-demo-adqw12/zones/europe-west2-a/instanceGroups/gke-my-k8s-cluster-default-pool-858715ad-grp",

] -> null

- label_fingerprint = "a9dc16a7" -> null

- location = "europe-west2" -> null

- logging_service = "logging.googleapis.com/kubernetes" -> null

- master_version = "1.14.10-gke.17" -> null

- monitoring_service = "monitoring.googleapis.com/kubernetes" -> null

- name = "my-k8s-cluster" -> null

- network = "projects/workshop-demo-adqw12/global/networks/default" -> null

- node_locations = [

- "europe-west2-a",

- "europe-west2-b",

- "europe-west2-c",

] -> null

- node_version = "1.14.10-gke.17" -> null

- project = "workshop-demo-adqw12" -> null

- resource_labels = {} -> null

- services_ipv4_cidr = "10.3.240.0/20" -> null

- subnetwork = "projects/workshop-demo-adqw12/regions/europe-west2/subnetworks/default" -> null

- addons_config {

- network_policy_config {

- disabled = true -> null

}

}

- cluster_autoscaling {

- autoscaling_profile = "BALANCED" -> null

- enabled = false -> null

}

- database_encryption {

- state = "DECRYPTED" -> null

}

- master_auth {

- cluster_ca_certificate = "LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURERENDQWZTZ0F3SUJBZ0lSQVBmNzJzWRGZhd25uQUYvZz09Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K" -> null

- client_certificate_config {

- issue_client_certificate = false -> null

}

}

- network_policy {

- enabled = false -> null

- provider = "PROVIDER_UNSPECIFIED" -> null

}

- node_config {

- disk_size_gb = 100 -> null

- disk_type = "pd-standard" -> null

- guest_accelerator = [] -> null

- image_type = "COS" -> null

- labels = {

- "environment" = "test"

- "team" = "devops"

} -> null

- local_ssd_count = 0 -> null

- machine_type = "n1-standard-1" -> null

- metadata = {

- "disable-legacy-endpoints" = "true"

} -> null

- oauth_scopes = [

- "https://www.googleapis.com/auth/logging.write",

- "https://www.googleapis.com/auth/monitoring",

] -> null

- preemptible = false -> null

- service_account = "default" -> null

- tags = [] -> null

- taint = [] -> null

- shielded_instance_config {

- enable_integrity_monitoring = true -> null

- enable_secure_boot = false -> null

}

- workload_metadata_config {

- node_metadata = "GKE_METADATA_SERVER" -> null

}

}

- node_pool {

- initial_node_count = 1 -> null

- instance_group_urls = [

- "https://www.googleapis.com/compute/v1/projects/workshop-demo-adqw12/zones/europe-west2-c/instanceGroupManagers/gke-my-k8s-cluster-default-pool-7dc4a362-grp",

- "https://www.googleapis.com/compute/v1/projects/workshop-demo-adqw12/zones/europe-west2-b/instanceGroupManagers/gke-my-k8s-cluster-default-pool-0538b086-grp",

- "https://www.googleapis.com/compute/v1/projects/workshop-demo-adqw12/zones/europe-west2-a/instanceGroupManagers/gke-my-k8s-cluster-default-pool-858715ad-grp",

] -> null

- max_pods_per_node = 0 -> null

- name = "default-pool" -> null

- node_count = 1 -> null

- node_locations = [

- "europe-west2-a",

- "europe-west2-b",

- "europe-west2-c",

] -> null

- version = "1.14.10-gke.17" -> null

- management {

- auto_repair = false -> null

- auto_upgrade = true -> null

}

- node_config {

- disk_size_gb = 100 -> null

- disk_type = "pd-standard" -> null

- guest_accelerator = [] -> null

- image_type = "COS" -> null

- labels = {

- "environment" = "test"

- "team" = "devops"

} -> null

- local_ssd_count = 0 -> null

- machine_type = "n1-standard-1" -> null

- metadata = {

- "disable-legacy-endpoints" = "true"

} -> null

- oauth_scopes = [

- "https://www.googleapis.com/auth/logging.write",

- "https://www.googleapis.com/auth/monitoring",

] -> null

- preemptible = false -> null

- service_account = "default" -> null

- tags = [] -> null

- taint = [] -> null

- shielded_instance_config {

- enable_integrity_monitoring = true -> null

- enable_secure_boot = false -> null

}

- workload_metadata_config {

- node_metadata = "GKE_METADATA_SERVER" -> null

}

}

}

- release_channel {

- channel = "UNSPECIFIED" -> null

}

- workload_identity_config {

- identity_namespace = "workshop-demo-adqw12.svc.id.goog" -> null

}

}

Plan: 0 to add, 0 to change, 1 to destroy.

Do you really want to destroy all resources?

Terraform will destroy all your managed infrastructure, as shown above.

There is no undo. Only 'yes' will be accepted to confirm.

Enter a value: yes

google_container_cluster.my_k8s_cluster: Destroying... [id=projects/workshop-demo-adqw12/locations/europe-west2/clusters/my-k8s-cluster]

google_container_cluster.my_k8s_cluster: Still destroying... [id=projects/workshop-demo-adqw12/locations/europe-west2/clusters/my-k8s-cluster, 10s elapsed]

google_container_cluster.my_k8s_cluster: Still destroying... [id=projects/workshop-demo-adqw12/locations/europe-west2/clusters/my-k8s-cluster, 1m10s elapsed]

google_container_cluster.my_k8s_cluster: Still destroying... [id=projects/workshop-demo-adqw12/locations/europe-west2/clusters/my-k8s-cluster, 2m40s elapsed]

google_container_cluster.my_k8s_cluster: Destruction complete after 2m41s

Destroy complete! Resources: 1 destroyed.

Hope this blog helps you build GKE Cluster Terraform.

If you have a feedback or questions, please reach out to me on LinkedIn or Twitter

Top comments (0)