“A series of blog posts, where I present my thoughts on various subjects.”

I’ve recently been watching MIT’s lecture series on AGI(Artificial General Intelligence). The motto of this course states “Our goal is to take an engineering approach to explore possible paths toward building human-level intelligence for a better world”. I’ll attempt to summarize the content discussed in these lectures. In this post, I’ll go over the idea of general intelligence. We’ll also discuss modern AI systems and their limitations.

To start off, let’s differentiate AGI from AI. AI systems are systems that can perform certain tasks without being explicitly programmed or hard-coded to do so, i.e. a certain level of “learning” is involved. AGI systems are intelligent systems whose learning capabilities are as flexible as humans. Let’s take an example to further show the difference between AI and AGI. Recently, AlphaGo (an AI system) beat Lee Sedol (18-time world champion) at the game of Go. The game of Go is an unbelievably complex game with up to 3^361 states (board configurations) which is way more than the number of atoms in the universe. It’s safe to say that there’s no way to write “rules” or algorithms to “solve” this game. Harnessing the power of neural networks and reinforcement learning has made it possible for AlphaGo to beat humans at playing Go.

“Does this mean AlphaGo has surpassed human intelligence?”

Not exactly. Intelligence is the ability to gain and apply knowledge and skills, which is more often measured by how quickly someone(or something) can learn new tasks than their skill in a particular domain. Hence, AlphaGo, even with its extraordinary skill at Go wouldn’t be equal to human intelligence as all it knows is how to play the game of Go(think how it can’t do a Google search). We call systems like these Narrow AI. Narrow AI is AI that is programmed to perform a single task like facial recognition or play chess. All AI systems today are Narrow AI systems.

AGI is still a dream. It’s quite difficult to quantify how close we are to achieving AGI. Take, ‘for instance’ when in 2014,

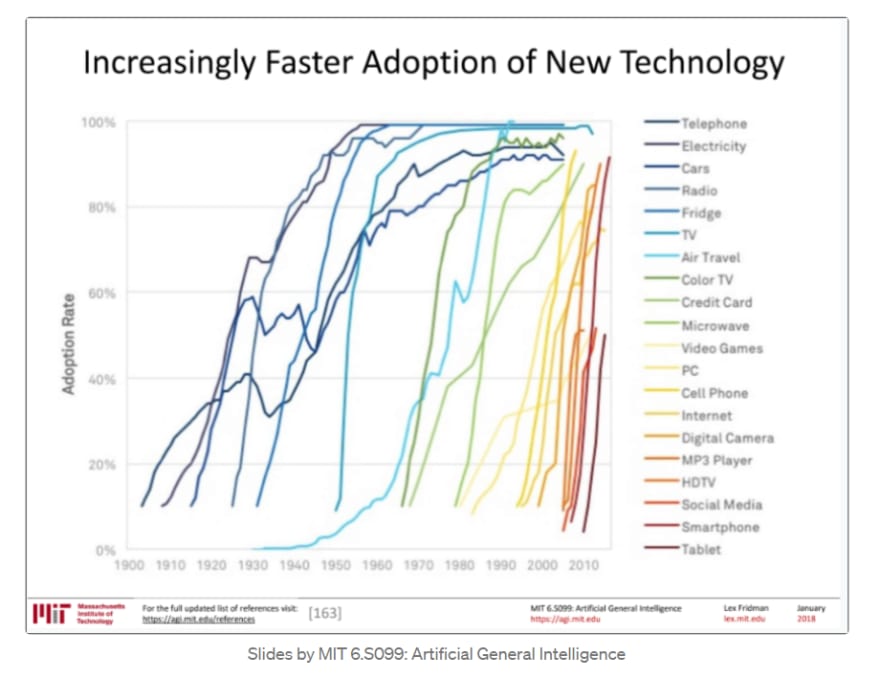

Nick Bostrom in his book — Superintelligence, mentioned how beating the world champion in Go would take about a decade. However, this was done in 2016, about 8 years early. This is in fact what I love about Machine Learning. The stochasticity doesn’t just lie in the algorithms but also in the speed with which the field is moving ahead. There’s been a rise in the number of breakthroughs and innovations post-2010 and this number will continue to increase thanks to Kurzweil’s Law of Accelerating Returns, Moore’s Law, and increasingly faster adoption of new technology.

Some flaws in our current AI systems include:-

- Transfer Learning- Unable to transfer representations to most related domains

- Requires lots of data- Inefficient at learning from data i.e. requires lots of examples to learn

- Requires supervised data- Costly to annotate real-world data

- Not fully automated- Needs hyper-parameter tuning (Neural networks are very sensitive to values of hyperparameters such as learning rate, loss function,mini-batch size, optimizer, etc)

- Reward function- Difficult to define a good reward function (Reinforcement Learning)

- Transparency- Neural Networks are mostly black boxes even with tools that visualize various aspects of their operations

- Edge cases- Deep Learning isn’t any good at dealing with edge cases

Overall, the picture painted is one of hope, promise, and excitement. The distant future holds many surprises, and we can’t speculate enough.

If you liked this article, feel free to connect with me. Any constructive feedback is welcome. You can reach out to me on Twitter, Linkedin, or in the comments

Top comments (1)

Quite informative and very well written :)