Breaking stuff on purpose primarily in the production environment is one of the mantras in Chaos Engineering. But when you tell your plan to your engineering manager or product owner, often we get some resistance. Their concerns are valid, what-if breaking stuff is irreversible, what will happen to the end-users, will our support ticket system get busy and more. This blog post will help you get started with Chaos Engineering practice in your organization.

What is Chaos Engineering?

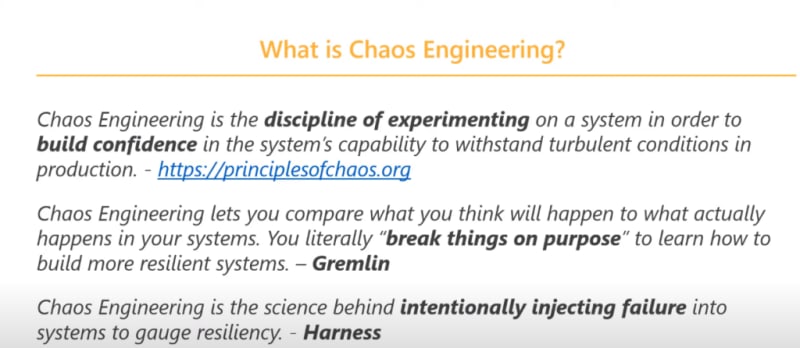

There are various definitions available from industry leaders about chaos engineering. Here is my slide from one of my videos :

Getting Started

The goal of chaos experiments is to learn how our system will behave in case of catastrophic failures in production, how resilient our system is which gives us an opportunity to optimize and fix the issues.

Here is how you can begin your chaos engineering practice.

Get a buy-in from your manager

The first step is to get an approval from your manager to carry out the experiments in the test environment. Typically, chaos experiments should be carried out in production. But I suggest you take baby steps. You can perform chaos experiments in any valid environment. If the production environment is not available, I recommend running the experiments in a non-production (or stage) environment.

Explain the values that you are bringing by performing the chaos experiments, see below to name a few :

- Identifying failures and bottlenecks

- Resilient validation

- Scaling validation

Understand the Architecture

System fails, all the time. Before running your chaos experiments, thoroughly understand your system's architecture. Have a working session with your developers, architects, SREs and brainstorm the application architecture and understand the upstream / downstream components, dependencies, timeline, game day schedule, and more. This will help you to understand where your system could fail.

Write Hypothesis

Start writing the list of hypothesis (/h??päTH?s?s/) (a supposition or proposed explanation made on the basis of limited evidence as a starting point for further investigation).

e.g. given a Kubernetes deployment, deleting one pod should not increase the service response time under the typical load.

Another example : load balancer must route the requests only to the healthy and running nodes.

There is no right or wrong while writing a hypothesis. It is an iterative process.

Our objective is NOT to make our hypothesis PASS or FAIL. Each hypothesis will give us an opportunity to understand our system.

Minimize the Blast Radius

Always start small. Reduce the impact on the end users while running the chaos experiments by minimizing the blast radius. E.g. instead of deleting a deployment in the Kubernetes cluster, delete the pods and validate the resiliency. Even if you are deleting a deployment, make sure GitOps is in effect, so that GitOps flow will create a deployment automatically.

Another example: instead of shutting down all the nodes in the cluster, go for 50% of running nodes or instead of plugging off the entire region, shutdown a zone.

Once the chaos process is matured and your team is in a comfortable zone, you can slowly increase the blast radius.

Plan for a Game Day

Plan ahead and always have a Plan B. Notify all your stakeholders at least one week before and start a unified communication channel in Slack (or your company's collaboration platform) to post updates regularly. I suggest having your developers, or DevOps or SRE team when you run your first experiment.

Run your First Experiment

No one is perfect. It is okay if you are having trouble running your first experiment. Post an update promptly and inform all the stakeholders.

If you are able to run your first experiment successfully, here is my virtual pat.

Believe me, running the first chaos experiment is like riding a high thrill level roller coaster. If things go south, make sure you are able to halt the experiment and revert the infrastructure with the help of your DevOps or SRE team.

During the experiment, monitor your Observability dashboard and observe the vitals such as response time, disk utilization, pass / fail transactions, health checks, and more.

Analysis

Once the experiment is done, log all your observations in a spreadsheet, analyze them and define your hypothesis verdict. Again, there is no pass or fail; it is a learning.

Brainstorm

Schedule a meeting with your developers, architects, DevOps / SRE team to discuss your verdict. This will help the team to understand the verdicts and fix the issues which you discovered. Once the issues are addressed, you can rerun the experiments.

If you find that the system is resilient, you can try increasing the blast radius and rerun the experiments.

Next Steps

After running various game days, you can learn about team dynamics, system performance, and more. The next step is embedding chaos experiments into your developer workflow, so that chaos experiments will be automated, which will bring more confidence to your team.

Happy Chaos'ing!

Top comments (0)