Rockset is a database used for real-time search and analytics on streaming data. In scenarios involving analytics on massive data streams, we’re often asked the maximum throughput and lowest data latency Rockset can achieve and how it stacks up to other databases. To find out, we decided to test the streaming ingestion performance of Rockset’s next generation cloud architecture and compare it to open-source search engine Elasticsearch, a popular sink for Apache Kafka.

For this benchmark, we evaluated Rockset and Elasticsearch ingestion performance on throughput and data latency. Throughput measures the rate at which data is processed, impacting the database's ability to efficiently support high-velocity data streams. Data latency, on the other hand, refers to the amount of time it takes to ingest and index the data and make it available for querying, affecting the ability of a database to provide up-to-date results. We examine latency at the 95th and 99th percentile, given that both databases are used for production applications and require predictable performance.

We found that Rockset beat Elasticsearch on both throughput and end-to-end latency at the 99th percentile. Rockset achieved up to 4x higher throughput and 2.5x lower latency than Elasticsearch for streaming data ingestion.

In this blog, we’ll walk through the benchmark framework, configuration and results. We’ll also delve under the hood of the two databases to better understand why their performance differs when it comes to search and analytics on high-velocity data streams.

Why measure streaming data ingestion?

Streaming data is on the rise with over 80% of Fortune 100 companies using Apache Kafka. Many industries including gaming, internet and financial services are mature in their adoption of event streaming platforms and have already graduated from data streams to torrents. This makes it crucial to understand the scale at which eventually consistent databases Rockset and Elasticsearch can ingest and index data for real-time search and analytics.

In order to unlock streaming data for real-time use cases including personalization, anomaly detection and logistics tracking, organizations pair an event streaming platform like Confluent Cloud, Apache Kafka and Amazon Kinesis with a downstream database. There are several advantages that come from using a database like Rockset or Elasticsearch including:

- Incorporating historical and real-time streaming data for search and analytics

- Supporting transformations and rollups at time of ingest

- Ideal when data model is in flux

- Ideal when query patterns require specific indexing strategies

Furthermore, many search and analytics applications are latency sensitive, leaving only a small window of time to take action. This is the benefit of databases that were designed with streaming in mind, they can efficiently process incoming events as they come into the system rather than go into slow batch processing modes.

Now, let’s jump into the benchmark so you can have an understanding of the streaming ingest performance you can achieve on Rockset and Elasticsearch.

Using RockBench to measure throughput and latency

We evaluated the streaming ingest performance of Rockset and Elasticsearch on RockBench, a benchmark that measures the peak throughput and end-to-end latency of databases.

RockBench has two components: a data generator and a metrics evaluator. The data generator writes events every second to the database; the metrics evaluator measures the throughput and end-to-end latency or the time from when the event is generated until it is queryable.

Multiple instances of the benchmark connect to the database under test.

The data generator generates documents, each document is the size of 1.25KB and represents a single event. This means that 8,000 writes is equivalent to 10 MB/s.

Peak throughput is the highest throughput at which the database can keep up without an ever-growing backlog. For this benchmark, we continually added ingested data in increments of 10 MB/s until the database could no longer sustainably keep up with the throughput for a period of 45 minutes. We determined the peak throughput as the increment of 10 MB/s above which the database could no longer sustain the write rate.

Each document has 60 fields containing nested objects and arrays to mirror semi-structured events in real life scenarios. The documents also contain several fields that are used to calculate the end-to-end latency:

-

_id: The unique identifier of the document -

_event_time: Reflects the clock time of the generator machine -

generator_identifier: 64-bit random number

The _event_time of that document is then subtracted from the current time of the machine to arrive at the data latency of the document. This measurement also includes round-trip latency—the time required to run the query and get results from the database back to the client. This metric is published to a Prometheus server and the p50, p95 and p99 latencies are calculated across all evaluators.

In this performance evaluation, the data generator inserts new documents to the database and does not update any existing documents.

RockBench Configuration & Results

To compare the scalability of ingest and indexing performance in Rockset and Elasticsearch, we used two configurations with different compute and memory allocations. We selected the Elasticsearch Elastic Cloud cluster configuration that most closely matches the CPU and memory allocations of the Rockset virtual instances. Both configurations made use of Intel Ice Lake processors.

Table of the Rockset and Elasticsearch configurations used in the benchmark.

The data generators and data latency evaluators for Rockset and Elasticsearch were run in their respective clouds and the US West 2 regions for regional compatibility. We selected Elastic Elasticsearch on Azure as it is a cloud that offers Intel Ice Lake processors. The data generator used Rockset’s write API and Elasticsearch’s bulk API to write new documents to the databases.

We ran the Elasticsearch benchmark on the Elastic Elasticsearch managed service version v8.7.0, the newest stable version, with 32 primary shards, a single replica and availability zone. We tested several different refresh intervals to tune for better performance and landed on a refresh interval of 1 second which also happens to be the default setting in Elasticsearch. We settled on a 32 primary shard count after evaluating performance using 64 and 32 shards, following the Elastic guidance that shard size range from 10 GB to 50 GB. We ensured that the shards were equally distributed across all of the nodes and that rebalancing was disabled.

As Rockset is a SaaS service, all cluster operations including shards, replicas and indexes are handled by Rockset. You can expect to see similar performance on standard edition Rockset to what was achieved on the RockBench benchmark.

We ran the benchmark using batch sizes of 50 and 500 documents per write request to showcase how well the databases can handle higher write rates. We chose batch sizes of 50 and 500 documents as they mimic the load typically found in incrementally updating streams and high volume data streams.

Throughput: Rockset sees up to 4x higher throughput than Elasticsearch

Peak throughput is the highest throughput at which the database can keep up without an ever-growing backlog. The results with a batch size of 50 showcase that Rockset achieves up to 4x higher throughput than Elasticsearch.

Table of the peak throughput and p95 latency of Elasticsearch and Rockset. Databases were evaluated using vCPU 64 and vCPU 128 instances and a batch size of 50.

The results with a batch size of 50 showcase that Rockset achieves up to 4x higher throughput than Elasticsearch.

Table of the peak throughput and p95 latency of Elasticsearch and Rockset. Databases were evaluated using vCPU 64 and vCPU 128 instances and a batch size of 500.

With a batch size of 500, Rockset achieves up to 1.6x higher throughput than Elasticsearch.

Graph of the peak throughput of Elasticsearch and Rockset using batches of 50 and 500. Databases were evaluated on 64 and 128 vCPU instances. Higher throughput indicates better performance.

One observation from the performance benchmark is that Elasticsearch handles larger batch sizes better than smaller batch sizes. The Elastic documentation recommends using bulk requests as they achieve better performance than single-document index requests. In comparison to Elasticsearch, Rockset sees better throughput performance with smaller batch sizes as it’s designed to process incrementally updating streams.

We also observe that the peak throughput scales linearly as the amount of resources increases on Rockset and Elasticsearch. Rockset consistently beats the throughput of Elasticsearch on RockBench, making it better suited to workloads with high write rates.

Data Latency: Rockset sees up to 2.5x lower data latency than Elasticsearch

We compare Rockset and Elasticsearch end-to-end latency at the highest possible throughput that each system achieved. To measure the data latency, we start with a dataset size of 1 TB and measure the average data latency over a period of 45 minutes at the peak throughput.

We see that for a batch size of 50 the maximum throughput in Rockset is 90 MB/s and in Elasticsearch is 50 MB/s. When evaluating on a batch size of 500, the maximum throughput in Rockset is 110 MB/s and Elasticsearch is 80 MB/s.

Table of the 50th, 95th and 99th percentile data latencies on batch sizes of 50 and 500 in Rockset and Elasticsearch. Data latencies are recorded for 128 vCPU instances.

At the 95th and 99th percentiles, Rockset delivers lower data latency than Elasticsearch at the peak throughput. What you can also see is that the data latency is within a tighter bound on Rockset compared to the delta between p50 and p99 on Elasticsearch.

Graph of the data latency at 50th, 95th and 99th percentiles at the peak throughput rate of Rockset and Elasticsearch. Shows the results of a batch of 500 on 128 vCPU instances. Lower data latency indicates better performance.

Rockset was able to achieve up to 2.5x lower latency than Elasticsearch for streaming data ingestion.

How did we do it?: Rockset gains due to cloud-native efficiency

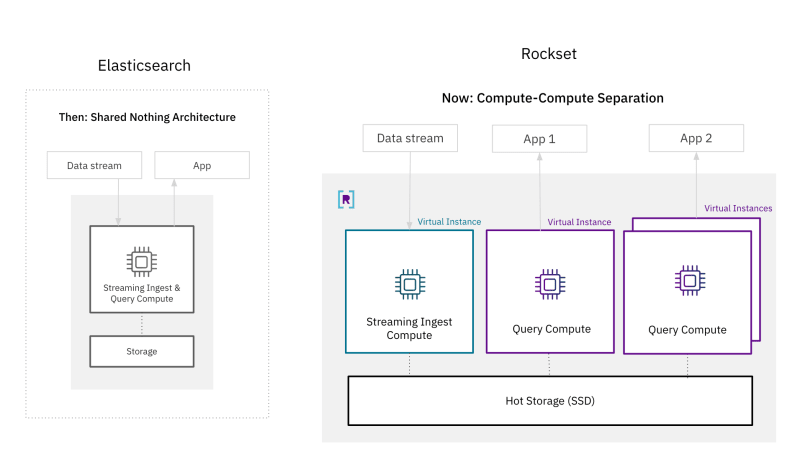

There have been open questions as to whether it is possible for a database to achieve both isolation and real-time performance. The de-facto architecture for real-time database systems, including Elasticsearch, is a shared nothing architecture where compute and storage resources are tightly coupled for better performance. With these results, we show that it is possible for a disaggregatedcloud architecture to support search and analytics on high-velocity streaming data.

One of the tenets of a cloud-native architecture is resource decoupling, made famous by compute-storage separation, which offers better scalability and efficiency. You no longer need to overprovision resources for peak capacity as you can scale up and down on demand. And, you can provision the exact amount of storage and compute needed for your application.

The knock against decoupled architectures is that they have traded off performance for isolation. In a shared nothing architecture, the tight coupling of resources underpins performance; data ingestion and query processing use the same compute units to ensure that the most recently generated data is available for querying. Storage and compute are also colocated in the same nodes for faster data access and improved query performance.

While tightly coupled architectures made sense in the past, they are no longer necessary due to advances in cloud architectures. Rockset’s compute-storage and compute-compute separation for real-time search and analytics lead the way by isolating streaming ingest compute, query compute and hot storage from each other. Rockset is able to ensure queries access the most recent writes by replicating the in-memory state across virtual instances, a cluster of compute and memory resources, making the architecture well-suited to latency sensitive scenarios. Furthermore, Rockset creates an elastic hot storage tier that is a shared resource for multiple applications.

Diagrams of a (a) shared nothing architecture like Elasticsearch and (b) a compute-compute separation architecture introduced by Rockset.

With compute-compute separation, Rockset achieves better ingest performance than Elasticsearch because it only has to process incoming data once. In Elasticsearch, which has a primary-backup model for replication, every replica needs to expend compute indexing and compacting newly generated writes. With compute-compute separation, only a single virtual instance does the indexing and compaction before transferring the newly written data to other instances for application serving. The efficiency gains from needing to only process incoming writes once is why Rockset recorded up to 4x higher throughput and 2.5x lower end-to-end latency than Elasticsearch on RockBench.

In Summary: Rockset achieves up to 4x higher throughput and 2.5x lower latency

In this blog, we have walked through the performance evaluation of Rockset and Elasticsearch for high-velocity data streams and come to the following conclusions:

Throughput : Rockset supports higher throughput than Elasticsearch, writing incoming streaming data up to 4x faster. We came to this conclusion by measuring the peak throughput, or the rate in which data latency starts monotonically increasing, on different batch sizes and configurations.

Latency : Rockset consistently delivers lower data latencies than Elasticsearch at the 95th and 99th percentile, making Rockset well suited for latency sensitive application workloads. Rockset provides up to 2.5x lower end-to-end latency than Elasticsearch.

Cost/Complexity : We compared Rockset and Elasticsearch streaming ingest performance on hardware resources, using similar allocations of CPU and memory. We also found that Rockset offers the best value. For a similar price point, you can not only get better performance on Rockset but you can do away with managing clusters, shards, nodes and indexes. This greatly simplifies operations so your team can focus on building production-grade applications.

We ran this performance benchmark on Rockset’s next generation cloud architecture with compute-compute separation. We were able to prove that even with the isolation of streaming ingestion compute, query compute and storage Rockset was still able to achieve better performance than Elasticsearch.

You can evaluate Rockset for your own real-time search and analytics workload by starting a free trial with $300 in credits. We have built-in connectors to Confluent Cloud, Kafka and Kinesis along with a host of OLTP databases to make it easy for you to get started.

Top comments (0)