I wondered if there is an easy way to identify faces in images and classify them with recognized names. So I don't know much about KI or face recognition or so, but hey let's get started to try it out. So my goal is to let the model identify me in a picture (or maybe later in a stream, but that are only many pictures;)).

Startingpoint

So I am very new to this topic, so I started googling around how I can identify a picture from me in a video stream. After a little research, these are the resulting steps

- Generate a custom training model that recognizes my face

- Grab the Images and identify me in this

I know that there are way more substeps but this is the result for now. So let's start to generate the custom model.

The Setup

First I want to describe the setup. I use as input some pictures taken from me at my shitty camera on the laptop 😉. To train the model I use Python and the library face_recogintion.

I try to avoid the dependency hell mess, so I use also docker containers to generate the model and run the identification. This is not the main focus of this article but you can get this project on my GitHub (more below in this article)

Train the Libary to identify me

So at first, I use an image from myself, not a fancy one but for testing it's okay. It was in the morning or late evening, but it doesn't matter

So I will for now use it as a source to identify my picture in other pictures.

The code looks like this:

import face_recognition

import os

import numpy as np

from PIL import Image, ImageDraw

# Function to get th images from the folder

def load_images_from_folder(folder):

images = []

for filename in os.listdir(folder):

img_path = os.path.join(folder, filename)

if os.path.isfile(img_path):

images.append(face_recognition.load_image_file(img_path))

return images

# Function to train the model

def train_face_recognition_model(images_folder):

# load the images

sascha_image = face_recognition.load_image_file("images/sascha.jpg")

sascha_face_encoding = face_recognition.face_encodings(sascha_image)[0]

face_encodings = [

sascha_face_encoding,

]

labels = [

"Sascha",

]

return face_encodings, labels

This will now take the Image called "sascha.jpg" and get the metrics from my face for recognizing it in other pictures. Next, I create a label array with the same index size. So when I add another image from my son I can put it there too.

Next, we want to identify me in other Images. For that, I have created the following code. So unfortunately I am not a phython developer i must do some try and errors. It maybe looked like this to my wife like this

But anyway this is the result

# The folder wit the images

images_folder = 'images'

target_image_path = 'test/image_1.jpg'

# Do the training

known_encoding, labels = train_face_recognition_model(images_folder)

# load image and reconize image

target_image = face_recognition.load_image_file(target_image_path)

face_locations = face_recognition.face_locations(target_image)

face_encodings = face_recognition.face_encodings(target_image, face_locations)

for face_encoding in face_encodings:

matches = face_recognition.compare_faces(known_encoding, face_encoding)

name = "Unknown"

face_distances = face_recognition.face_distance(known_encoding, face_encoding)

best_match_index = np.argmin(face_distances)

print(best_match_index)

if matches[best_match_index]:

name = labels[best_match_index]

# open image for drawwing

pil_image = Image.open(target_image_path)

draw = ImageDraw.Draw(pil_image)

# Check if any of the detected faces match the known face

for (top, right, bottom, left), face_encoding in zip(face_locations, face_encodings):

match = face_recognition.compare_faces([known_encoding], face_encoding)

face_distances = face_recognition.face_distance([known_encoding], face_encoding)

best_match_index = np.argmin(match)

draw.rectangle([left, top, right, bottom], outline="green", width=2)

draw.text(text= name, xy= [left, top])

# Save or display the modified image

##pil_image.show()

pil_image.save("test/image_1_ex.jpg")

# Save the trained modell

model_data = {'face_encodings': face_encodings, 'labels': labels}

# face_recognition.save_known_faces(model_data, 'face_recognition_model.pkl')

print("Finished training the modell." , labels)

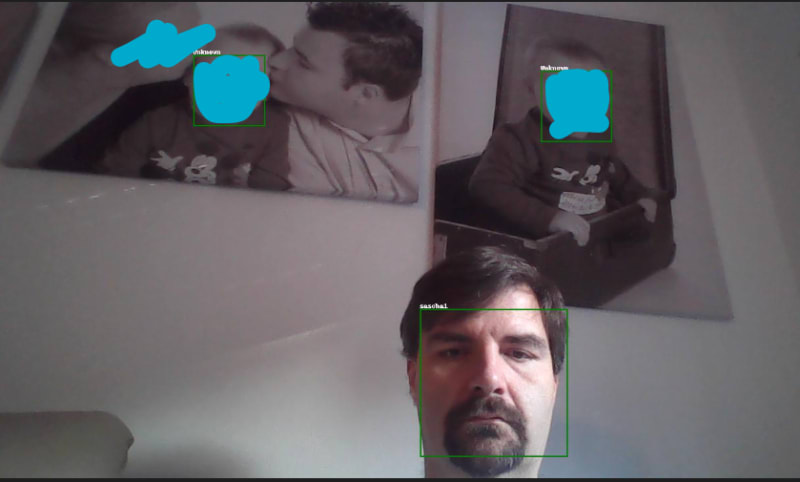

This piece of code will take the given image to compare and open it. After that, It will iterate each comparable image and try to find the given persons in it (in our example me). When there is a match, I will draw a bounding box around the recognized face, and use the same index for the label to identify the name of the person. So the result will be (when it recognizes my face) a green bounded box around the identified face. So I tested it with this code and the resulting image looks like this:

That's nice because it identified me without a front view. But what's about identifying the face within a picture more than my face. This is the result

I masked the other faces but it does not identify me. ... Yeah it's difficult because on the picture are two variants of me. First of all the completely shaved one and the other the partially shaved one. So the reference picture is not a shaved variant from me 😃. So It must have another source to compare. this means more pictures of myself

Rewrite the code

Now it is time to rewrite the code to use it for more than one image as the comparable source I adjusted the training method to get all files and put the filename into the array as a label. The resulting code looks like this

def train_face_recognition_model(images_folder):

# load the images

face_encodings = []

labels = []

for filename in os.listdir(images_folder):

img_path = os.path.join(images_folder, filename)

if os.path.isfile(img_path):

image = face_recognition.load_image_file(img_path)

face_encoding = face_recognition.face_encodings(image)[0]

face_encodings.append(face_encoding)

labels.append(os.path.splitext(filename)[0]) # Use the filename as the label

return face_encodings, labels

Now you will have the possibility to add the filename as a label. This is for debugging purposes very useful because you see then which image source was used for the match. Now I run the script again and the result looks like this

Whoa, that looks nice so it identifies also other faces and it identifies my face correctly by the given input. I am happy about the result

Conclusion

I am not a KI developer or Data Analyst but I think that this example shows how easy it can be to identify faces within an image. Sure you can now integrate it into webcam streams (in my security cams for example) but this is not the main topic of this blog post. So In conclusion I can say, that this library is very useful for identifying faces, but it has some problems in identifying variants for small examples, it is very helpful

Additional note

Yes! I can smile but not in the early morning 😉

The Source

The working source code of this project, with example pictures, is available at my GitHub:

[

GitHub - SBajonczak/face_recognizer: This project takes known picutres and try to identify them in other picutres

This project takes known picutres and try to identify them in other picutres - GitHub - SBajonczak/face_recognizer: This project takes known picutres and try to identify them in other picutres

](https://github.com/SBajonczak/face_recognizer?ref=blog.bajonczak.com)

Top comments (0)