In this writing, we are going to compare current Open Source and Paid Large Language Models, ChatGPT and SerpApi for a technical task that requires a precise and fast solution. We will make a comparison by extracting different parts of the visible text from Google Local Results.

For more in-depth information on what Google Local Results look like, and how they might be served in a structured order, you can visit SerpApi's Google Local Results API Documentation.

For visual confirmation, here is how the feature looks like this:

Prompt to Test out

Here is the structure of the prompt we will be testing throughout different models:

Example 1:

Lineified text of Google Local Result

Classifications of different parts of the text.

Example 2:

...

Example in Question:

Lineified text of Google Local Result

Follow the rules:

- Rule 1

- Rule 2

classification:

This prompt consists of examples to tighten and empower the classification process, some rules for LLM not to generate unwanted words, and to point to the example in question, and the example in question. The prompt ends with a classification to complete, which will hopefully be followed by other classifications.

Below is the example prompt engineered to do text classification of Google Local Results. I have deducted the phone numbers for privacy purposes. But know that I have used the actual phone numbers when prompting to eliminate bias in phone numbers being picked up by only consisting of zeros.

Example 1:

Houndstooth Coffee 4.6(824) · $$ · Coffee shop 401 Congress Ave. #100c · In Frost Bank Tower Closed ⋅ Opens 7AM Cozy hangout for carefully sourced brews

title: Houndstooth Coffee

rating: 4.6

number of reviews: 824

expensiveness: $$

type: Coffee shop

address: 401 Congress Ave. #100c · In Frost Bank Tower

hours information: Opens 7AM

description: Cozy hangout for carefully sourced brews

service options: -

phone number: -

years in business: -

Example 2:

A.D.A. Auto Repair Center 4.8(26) · Auto repair shop 30+ years in business · Nicosia · 00 000000 Closes soon ⋅ 3PM "I strongly recommend this repair shop."

title: A.D.A. Auto Repair Center

rating: 4.8

number of reviews: 26

expensiveness: -

type: Auto repair shop

address: Nicosia

hours information: Closes soon ⋅ 3PM

years in business: 30+ years in business

description: "I strongly recommend this repair shop."

phone number: 00 000000

years in business: -

Example 3:

A to M MARKET 5.0(2) · General store Nicosia · Near Macro Süpermarket Open ⋅ Closes 2AM In-store shopping

title: A to M MARKET

rating: 5.0

number of reviews: 2

expensiveness: -

type: General store

address: Nicosia · Near Macro Süpermarket

hours information: Open ⋅ Closes 2AM

description: -

service options: In-store shopping

phone number: -

years in business: -

Example 4:

Expeditionary 4x4 5.0(1) · Auto parts store (000) 000-0000 Open 24 hours

title: Expeditionary 4x4

rating: 5.0

number of reviews: 1

expensiveness: -

type: Auto parts store

address: -

hours information: Open 24 hours

description: -

service options: -

phone number: (000) 000-0000

years in business: -

Example 5:

Hibbett Sports 4.2(51) · Sporting goods store 4.2 (51) Independence, KS Closed ⋅ Opens 10 AM · (000) 000-0000 Closed ⋅ Opens 10 AM Closed Athletic shoes & activewear

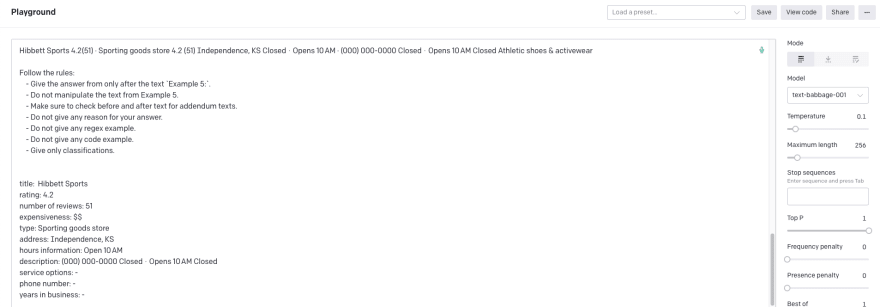

Follow the rules:

- Give the answer from only after the text `Example 5:`.

- Do not manipulate the text from Example 5.

- Make sure to check before and after text for addendum texts.

- Do not give any reason for your answer.

- Do not give any regex example.

- Do not give any code example.

- Give only classifications.

title:

Open Source LLM Models

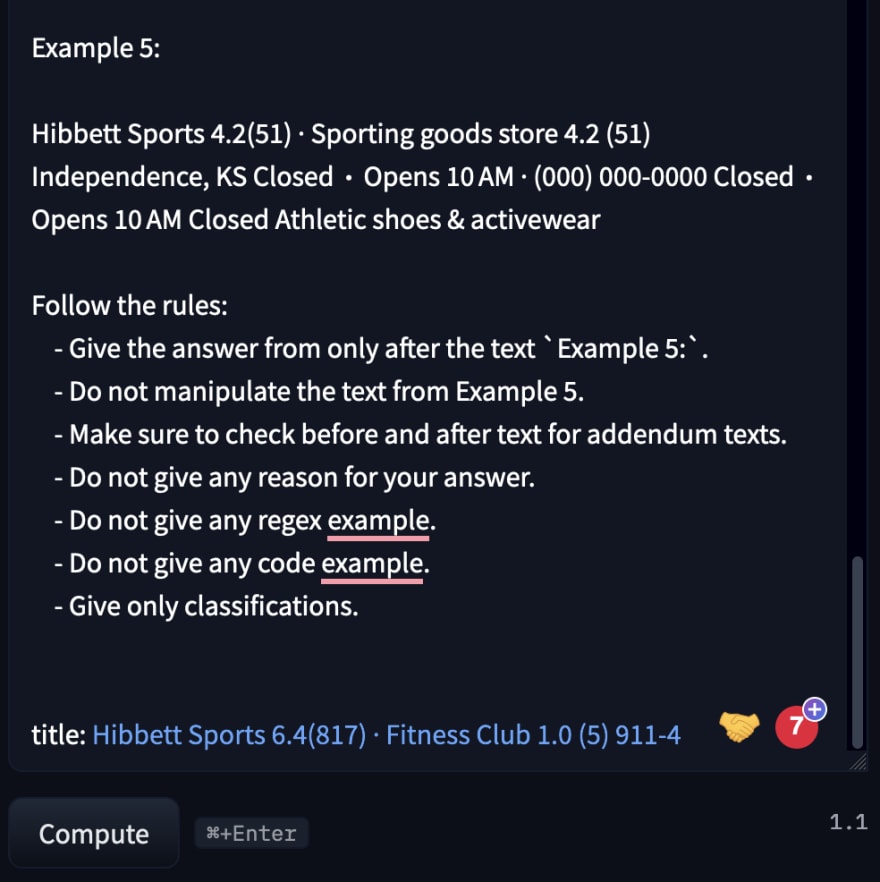

GPT-2

Context: GPT-2 has 124M trainable parameters.

Answer:

title: Hibbett Sports 6.4(817) · Fitness Club 1.0 (5) 911-4

Conclusion: The answer is flawed and contains extra and made-up information. Cannot be used for parsing that requires multiple classifications from the getgo.

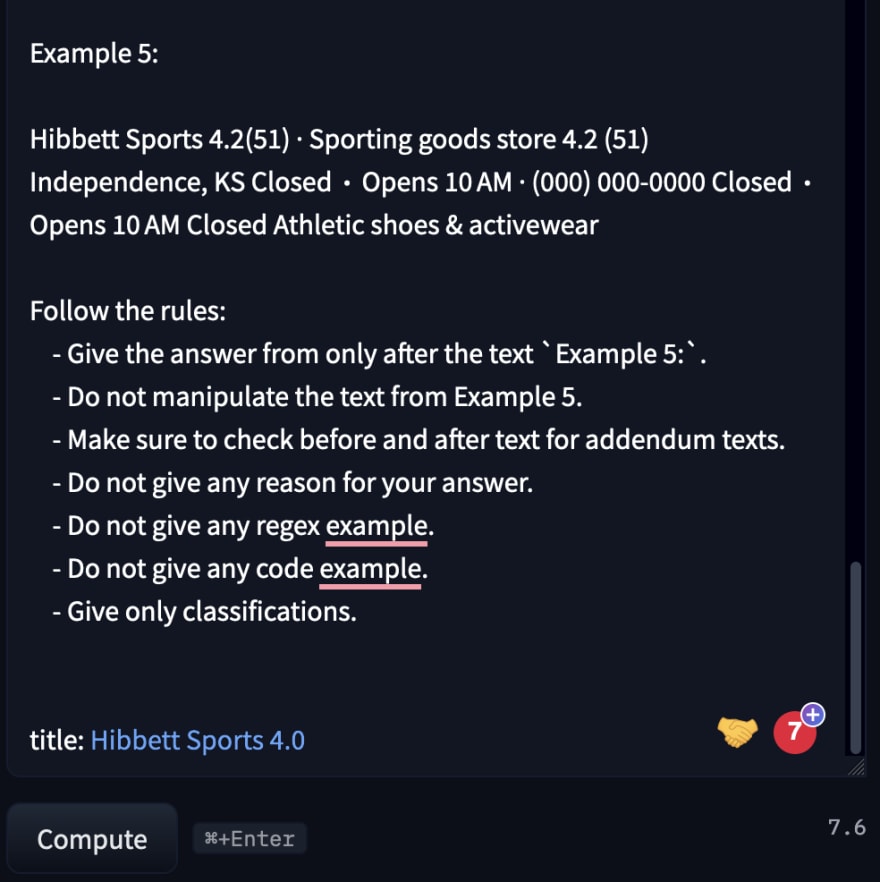

GPT-2 Large

Context: GPT-2 Large has 774M trainable parameters.

Answer:

title: Hibbett Sports 4.3

Conclusion: The answer is flawed and contains extra and made-up information. Cannot be used for parsing that requires multiple classifications from the getgo.

GPT-2 XL

Context: GPT-2 Large has 1.5B trainable parameters.

Answer:

title: Hibbett Sports 4.0

Conclusion: The answer is flawed and contains extra and made-up information. Cannot be used for parsing that requires multiple classifications from the getgo.

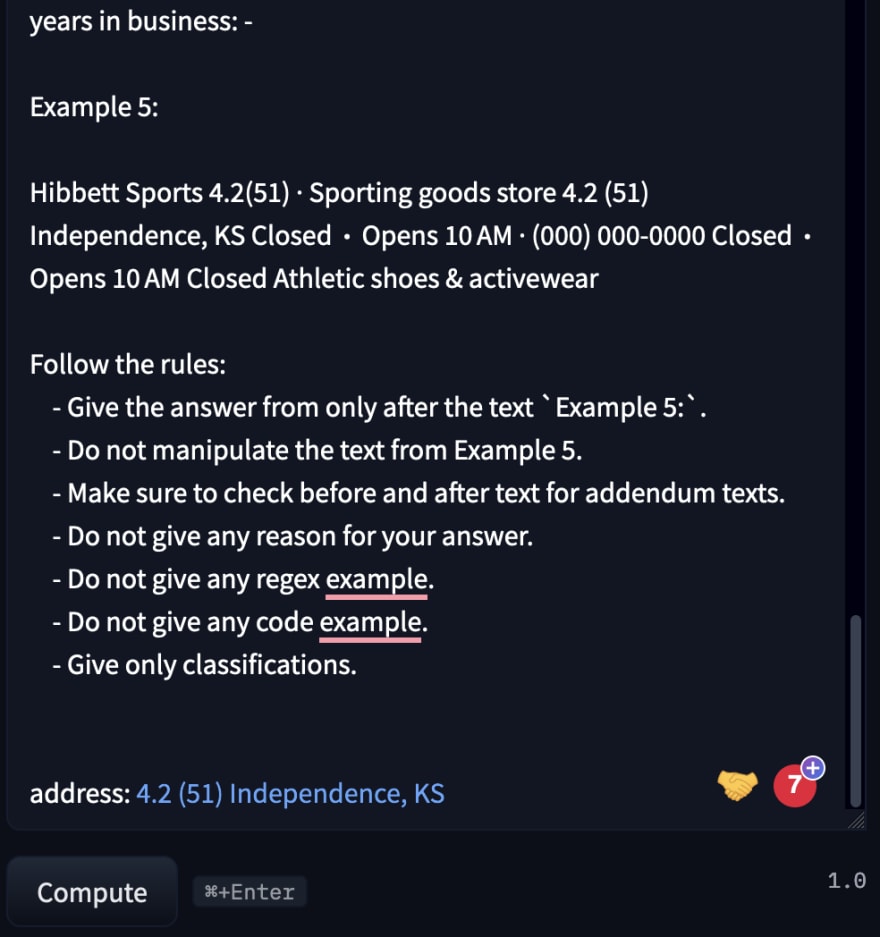

GPT-J 6B

Context: GPT-J 6B has 6B trainable parameters.

Answer:

title: Hibbett Sports

GPT-J 6B is not producing anything other than the requested classification. So I had to ask multiple classes to be sure:

Second Answer:

address: 4.2 (51) Independence, KS

Conclusion: Although the model provided the correct answer for the title, it wasn't able to classify the address. Not suitable for multiple classifications out of the box.

I must confess I was surprised by the speed at which the answer was returned. With proper finetuning, this model has the potential to be used for technical purposes for a relatively cheap price, and fast response times.

BLOOM

Context: Bloom has 176B trainable parameters. This model is a behemoth when it comes to open-source models. It has support for 46 languages and 13 programming languages. A switch between a greedy mode for technical purposes, and a sampling mode for creative purposes.

Answer:

title: Hibbett Sports

rating: 4.2

number of reviews: 51

expensiveness: -

type: Sporting goods store

address: Independence, KS

hours information: Closed ⋅ Opens 10 AM

description: Athletic shoes & activewear

service options: -

phone number: (000) 000-0000

years in business: Closed ⋅ Opens 10 AM

years in business: -

Conclusion: All the answers are correct. This is definitely suitable for technical tasks of engineering.

I must add my further observations about BLOOM. The downside is that it is big in size. As of now, around 330 GB. You also need at least 16 GB of RAM and some real free time in your hand to test this behemoth.

They were kind enough to set up a managed endpoint for testing purposes. But testing for specific purposes of technical nature will most likely take a hard manual prompt creation and a big decision to set it up.

Another thing I have noticed about BLOOM is that if it creates noisy data at the end, you can always use multiple prompts. Somehow this prompt lead the model to show always the right answer on the first line of generated text.

Also, I have tested cross-language prompts to see how it behaves. Sometimes it gives good results for English examples but Arabic classification. But it would be wise to use the same language prompts for the same language classifications just to be on the safe side.

I have to admit though, I am still surprised this is an open-source model. It is truly a behemoth at the standards of the industry.

Paid Models

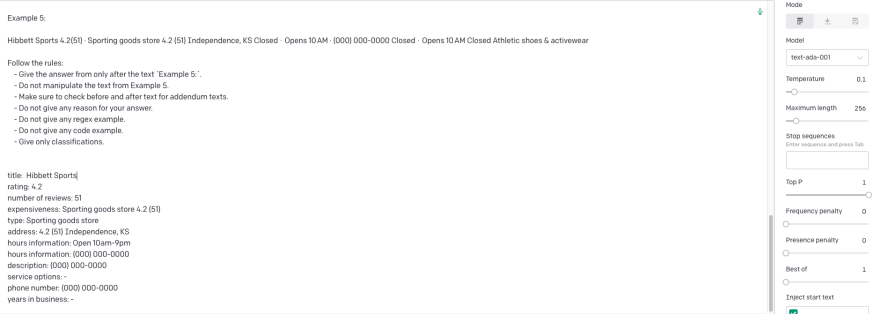

GPT-3 Text-Ada-001

Context: This model is the lowest fee text model of OpenAI, and costs $0.0004 for 1000 tokens. Tokens are recognized parts of strings and letters by models. This model accepts up to 2048 tokens. The prompt we provided is 784 tokens (cost is affected by response token size as well).

Answer:

title: Hibbett Sports

rating: 4.2

number of reviews: 51

expensiveness: Sporting goods store 4.2 (51)

type: Sporting goods store

address: 4.2 (51) Independence, KS

hours information: Open 10am-9pm

hours information: (000) 000-0000

description: (000) 000-0000

service options: -

phone number: (000) 000-0000

years in business: -

Conclusion: This model, even though the generation temperature is quite low (good for consistent classification and minimum made-up text), has failed to produce a healthy classification. I am not sure if finetuning would put it in shape.

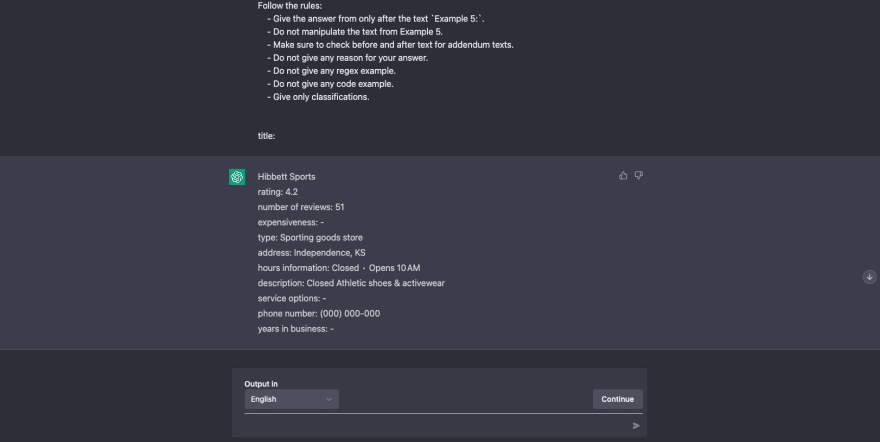

GPT-3 Text-Babbage-001

Context: This model is a low-fee text model that is better than Text-Ada-001 of OpenAI, and costs $0.0.0005 for 1000 tokens. This model also accepts up to 2048 tokens.

Answer:

title: Hibbett Sports

rating: 4.2

number of reviews: 51

expensiveness: $$

type: Sporting goods store

address: Independence, KS

hours information: Open 10 AM

description: (000) 000-0000 Closed ⋅ Opens 10 AM Closed

service options: -

phone number: -

years in business: -

Conclusion: This model missed many parts of the text that is crucial. However, a finetune of this model has the potential to be a good candidate for multiple classifications. However, finetuning costs $0.0006 for 1000 tokens, and using the finetuned version costs $0.0024. This is not really feasible considering the next paid model's base cost is lower than this.

GPT-3 Curie

Context: This model is a text model that is better than Text-Babbage-001 of OpenAI, and costs $0.0.0020 for 1000 tokens. This model also accepts up to 2048 tokens. This model carries the name of one of the most bada** scientists in history, the undisputed star of the Flying Universities.

Answer:

title: Hibbett Sports

rating: 4.2

number of reviews: 51

expensiveness: -

type: Sporting goods store

address: Independence, KS

hours information: Closed ⋅ Opens 10 AM

description: Athletic shoes & activewear

service options: -

phone number: (000) 000-0000

years in business: -

Conclusion: All correct. Without a doubt, this is one of the good candidates for industrial scale. The downside is the token size. You have to carry the context and rules at each call, costing you more. The price for this model is $0.0020 per 100 tokens which is kind of limiting for big scales. Also, the concurrency at OpenAI is 3000 requests per minute for paying users. This is another limitation since the limit of tokens is also increasing the number of requests you must make concurrently.

I would suggest using this model for testing what you already have in place for it. Its precision could help improve your existing solution and provide feedback of its state.

ChatGPT

Context: I don't think this model even needs an introduction. Possibilities using this model seem quite endless. However, it is not open for commercial use now, and we don't really know what the pricing or concurrency limits will be for certain.

title:

Hibbett Sports

rating: 4.2

number of reviews: 51

expensiveness: -

type: Sporting goods store

address: Independence, KS

hours information: Closed ⋅ Opens 10 AM

description: Closed Athletic shoes & activewear

service options: -

phone number: (000) 000-000

years in business: -

Conclusion: It can do basic classification tasks with ease. Moreover, being able to carry out the conversation brings the possibility of using the contextual prompt to be reused with ease. I would have only one concern for this model though, and that is persistence. It would be good to have a temperature option for ChatGPT as well. Since definite pricing and limitations weren't announced, I cannot say something certain about its industrial use.

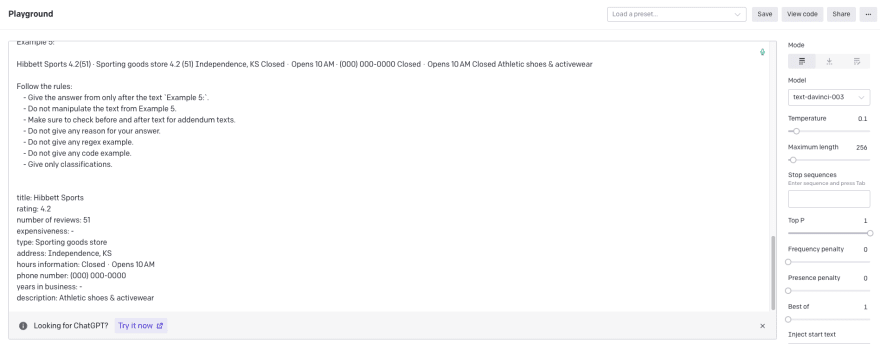

GPT-3 Text-Da-Vinci-003

Context: This is the best text model of OpenAI so far, and costs $0.0.0200 for 1000 tokens. Time will tell if naming it DaVinci was a good choice or if they should've waited for a superior model for that name. But for sure this is the most advanced text model out there. Its precision in understanding the prompt is absolutely beating the 4000 token limit. For many technical tasks, you don't even need finetuning.

Answer:

title: Hibbett Sports

rating: 4.2

number of reviews: 51

expensiveness: -

type: Sporting goods store

address: Independence, KS

hours information: Closed ⋅ Opens 10 AM

phone number: (000) 000-0000

years in business: -

description: Athletic shoes & activewear

Conclusion: This is without a doubt a killer product. However, when you need to correlate between different parts of the HTML to gather deeper info about the feature you want to extract, the 4000 token limit is playing a major blockage.

Another blockage is the price. Google allows 20 local results per page. This means the estimated cost for one page of visible elements will be around $0.16 per page. Now, this is just the price for parsing the features. You may add a proxy and server maintenance to it. When you scale this number, an in-house solution using Text-Da-Vinci-003 only becomes viable when you have a derivative product using the Google Local Results feature that is more valuable than the raw data. This is only possible if the solution is cutting down the maintenance fee, and the error rates are significantly less to a point where you don't have to hire new staff to take care of it.

But I have to point out that this is a very suitable model for testing existing models at this time. Its semantic power in understanding the rules and order in the generation is very suitable for testing the efficiency of technical tasks at this point.

SerpApi Google Local Results Parser

Context: SerpApi is a real-time API to access Google search results. It handles proxies, solves captchas, and parses all rich structured data for you. So any kind of preprocessing and parsing you have to do with an LLM model is actually handled by SerpApi. Apart from that, SerpApi can easily parse data invisible to the eye as well like Place ID or GPS coordinates.

Answer:

{

"position": 1,

"title": "Hibbett Sports",

"place_id": "5028461961943251508",

"place_id_search": "https://serpapi.com/search.json?device=desktop&engine=google&gl=us&google_domain=google.com&hl=en&ludocid=5028461961943251508&q=Sports+Shop&tbm=lcl",

"lsig": "AB86z5USlyxNPnHhPC2QbT2VYbMc",

"rating": 4.2,

"reviews_original": "(51)",

"reviews": 51,

"type": "Sporting goods store",

"address": "Independence, KS",

"phone": "(000) 000-0000",

"hours": "Open ⋅ Closes 8 PM",

"description": "Athletic shoes & activewear",

"thumbnail": "https://serpapi.com/searches/63d036577bea270d77944e2d/images/581525d33cb22a3571e8ea251d12f5f818ab21466a5be2cfc24aa6e2784524e7.jpeg",

"gps_coordinates": {

"latitude": 37.2243156,

"longitude": -95.7405395

}

},

Conclusion: SerpApi's biggest power comes from its dedication to its mission. Because it has a team dedicated to fixing errors and adding new features, you are getting the most out of what you expect. Moreover, not worrying about proxies, server maintenance, and many other things is another bonus. It beats the pricing point by a good margin, and it is fast. You can see the Pricing Page to make a comparison in pricing, and API Status Page to get more information on speed. It also guarantees up to 20% of your plan searches per hour as a throughput. You can also Register to Claim Free Credits.

All of this information is enough to see that some technical tasks are still yet not feasible to achieve by LLMs.

Final Thoughts

I can see that the companies that have a tradition of implementing AI have a better advantage in the future. the capabilities of such tools as shown above are truly impressive. Considering these are just baby steps of AI, one has to be ready for the point at which AI can beat traditional methods. This is only possible via an existing culture around AI.

If we go from an example, in my previous blog posts, I have parsed the inline version of Google Local Results with a CNN structured Hybrid AI and compared the results. That was a definite win in the long run in terms of AI. But considering there is a dedicated page for local results, and it is completely parsable via traditional methods, it nullifies the advantages of AI until it is faster and cheaper. I know that in the future, prices will be more accessible for the industry, and the speed will be much more efficient. It will make the entire process more interesting and efficient.

I am grateful to the reader for their time and attention. The contents of this writing only contain a portion of my observations. In my opinion, some fields, especially fields requiring technical precision, are not viable for LLMs at this point.

Top comments (0)