AWS: EKS Pod Identities — a replacement for IRSA? Simplifying IAM access management

Another very interesting new feature from the latest re:Invent is the EKS Pod Identities: a new ability to manage Pod access to AWS resources.

The current state: IAM Roles for Service Accounts

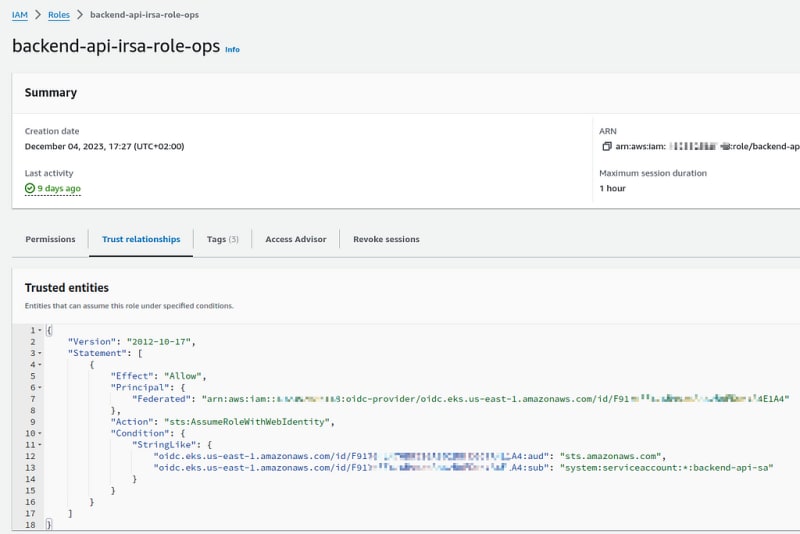

Before that, we used the IAM Roles for Service Accounts (IRSA) model, where in order to give a Pod access to, for example, S3, we had to create an IAM Role with the appropriate IAM Policy, configure its Trust Policy to allow AssumeRole to be executed only from the appropriate cluster, then create a Kubernetes ServiceAccount with the ARN of this role in its annotations.

We had a few “error-prone” moments with this scheme:

- The most common problem that I have encountered many times is errors in the Trust Policy, where it was necessary to specify the cluster OIDC

- errors in the ServiceAccount itself, where it was possible to make a mistake in the ARN of the role

See AWS: EKS, OpenID Connect, and ServiceAccounts.

The f(ea)uture state: EKS Pod Identities

However, now EKS Pod Identities allows us to create an IAM Role once, not restrict it to a specific cluster, and connect this role to Pods (again, via ServiceAccount) directly by using AWS CLI, AWS Console, or via the AWS API (Terraform, CDK, etc.).

How it looks like:

- add a new controller to EKS — the Amazon EKS Pod Identity Agent add-on

- create an IAM Role, in the Trust Policy of which we now use

Principal: pods.eks.amazonaws.com - and from the AWS CLI, AWS Console, or via the AWS API, connect this role directly to the desired ServiceAccount

Let’s try it!

Creating an IAM Role

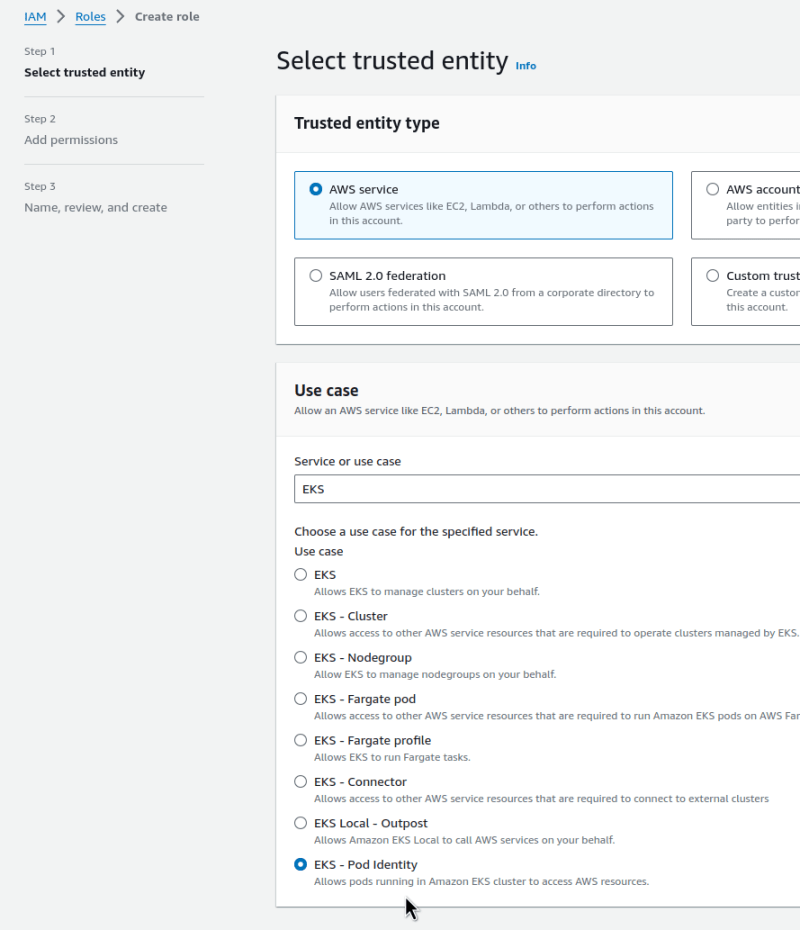

Go to IAM and create a role. In the Trusted entity type, select EKS and a new type — EKS — Pod Identity:

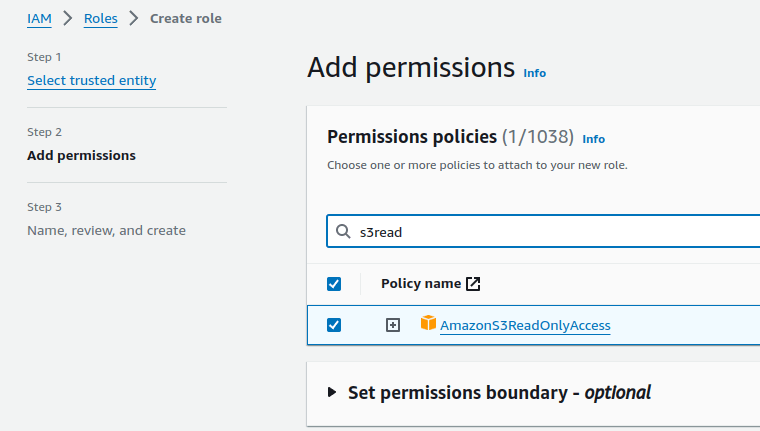

In Permissions, let’s take an existing Amazon managed policy for S3ReadOnly:

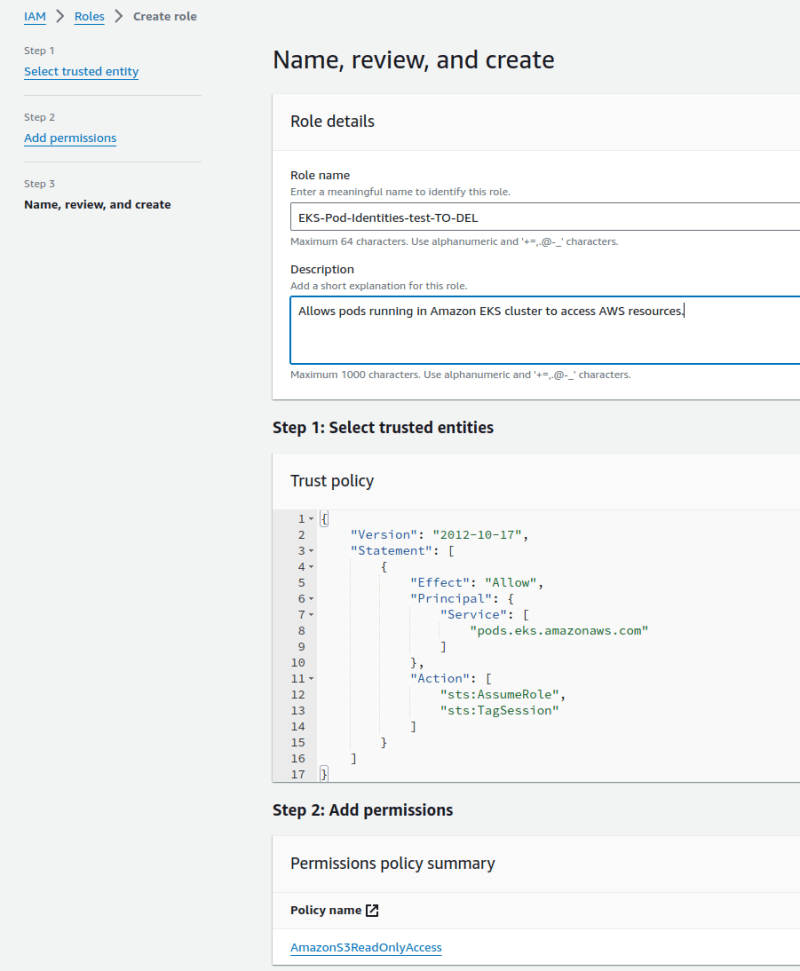

Set the name of the role, and here we can see the new Trust Policy:

And let’s compare it with a Trust Policy for the IRSA role:

It’s much simpler, which means there’s less chance for errors, and it’s generally easier to manage. In addition, we are no longer tied to the OIDC Cluster Provider.

By the way, with EKS Pod Identities, we can also use the session tags role.

Okay, let’s move on.

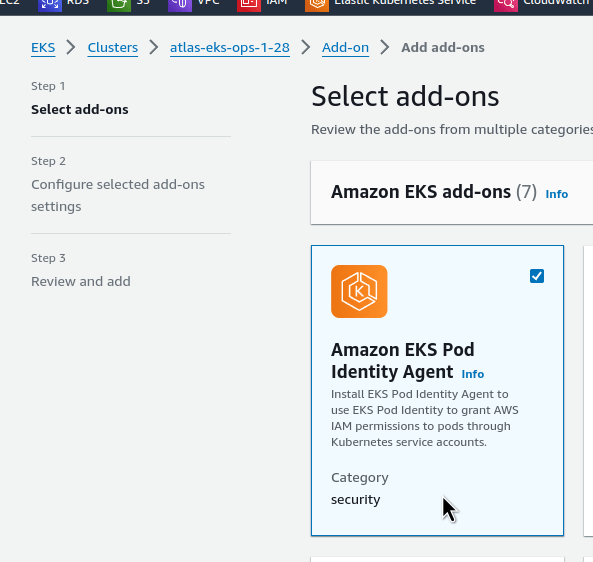

Amazon EKS Pod Identity Agent add-on

Go to our cluster, install a new component — Amazon EKS Pod Identity Agent add-on:

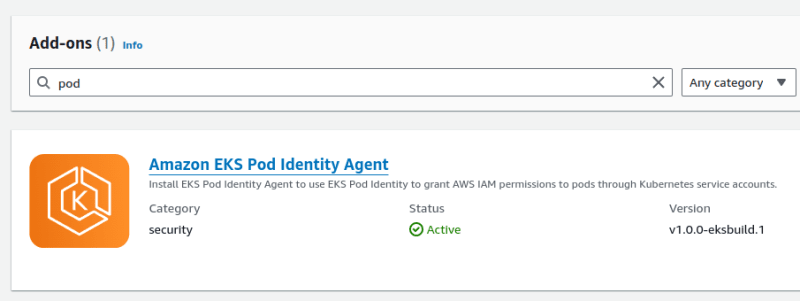

Wait a minute and it’s ready:

The Pods of this controller:

$ kk -n kube-system get pod | grep pod

eks-pod-identity-agent-d7448 1/1 Running 0 91s

eks-pod-identity-agent-m46px 1/1 Running 0 91s

eks-pod-identity-agent-nd2xn 1/1 Running 0 91s

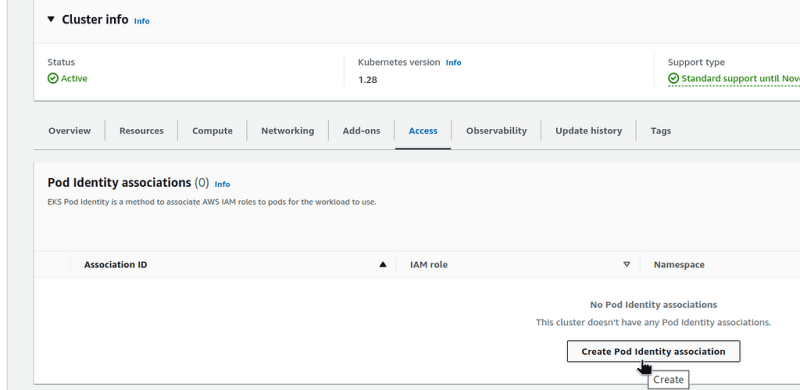

Підключення IAM Role до ServiceAccount

Go to the Access tab, and click Create Pod Identity Association:

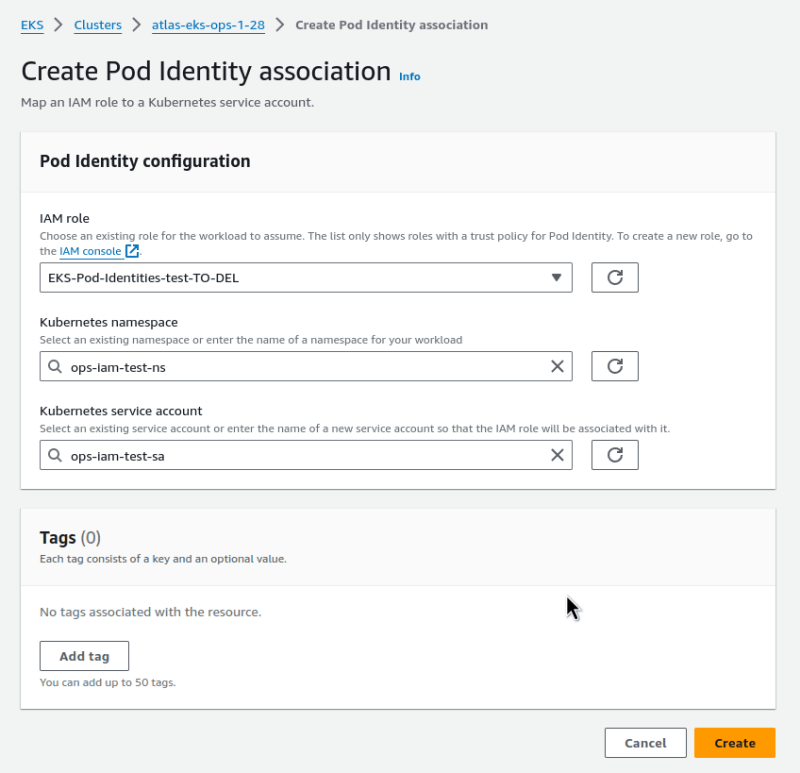

Select the Role you created above.

Next, set a namespace — either choose from the list of existing ones or specify a new one.

The same for the ServiceAccount name — you can specify an already created SA, or a new name:

Creating a Pod and a ServiceAccount

Create a manifest for the new resources:

apiVersion: v1

kind: Namespace

metadata:

name: ops-iam-test-ns

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: ops-iam-test-sa

namespace: ops-iam-test-ns

---

apiVersion: v1

kind: Pod

metadata:

name: ops-iam-test-pod

namespace: ops-iam-test-ns

spec:

containers:

- name: aws-cli

image: amazon/aws-cli:latest

command: ['sleep', '36000']

restartPolicy: Never

serviceAccountName: ops-iam-test-sa

Deploy them:

$ kubectl apply -f iam-sa.yaml

namespace/ops-iam-test-ns created

serviceaccount/ops-iam-test-sa created

pod/ops-iam-test-pod created

And check access to the S3:

$ kk -n ops-iam-test-ns exec -ti ops-iam-test-pod -- bash

bash-4.2# aws s3 ls

2023-02-01 11:29:34 amplify-staging-112927-deployment

2023-02-02 15:40:56 amplify-dev-174045-deployment

...

However, if we try another operation for which we have not connected the policy, for example, EKS, we will get a 403:

bash-4.2# aws eks list-clusters

An error occurred (AccessDeniedException) when calling the ListClusters operation: User: arn:aws:sts::492 ***148:assumed-role/EKS-Pod-Identities-test-TO-DEL/eks-atlas-eks--ops-iam-te-cc662c4d-6c87-44b0-99ab-58c1dd6aa60f is not authorized to perform: eks:ListClusters on resource: arn:aws:eks:us-east-1:492*** 148:cluster/*

Problems?

At the moment, I see one potential issue, not a problem, but an issue worth keeping in mind: if before we used to configure access at the service level, then with EKS Pod Identities it is done at the cluster management level.

I.e: I have a service, the Backend API. It has its own repository, in which there is a directory terrafrom, in which the necessary IAM roles are created.

Next, there is the helm directory, in which we have a manifest with a ServiceAccount, in which the ARN of this IAM role is passed through variables.

And that's it - I (or rather, the CI/CD of the pipeline that performs the deployment) need access only to IAM, and to an EKS cluster to create Ingress, Deployment, and ServiceAccount.

But now we'll have to think about how to grant access to EKS at the AWS level, because we'll need to perform an additional operation in the AWS API to the Create Pod Identity Association.

By the way, Terraform has a new resource for this - aws_eks_pod_identity_association.

Still, anyway it looks really cool, and it can make life a lot easier for EKS and IAM management.

EKS Pod Identity restrictions

It is worth paying attention to the documentation, because in the EKS Pod Identity restrictions it says that EKS Pod Identities are available only on Amazon Linux:

EKS Pod Identities are available on the following:

- Amazon EKS cluster versions listed in the previous topic EKS Pod Identity cluster versions.

- Worker nodes in the cluster that are Linux Amazon EC2 instances.

Originally published at RTFM: Linux, DevOps, and system administration.

Top comments (0)