In the GitHub Actions: deploying Dev/Prod environments with Terraform blog I’ve already described how we can implement CI/CD for Terraform with GitHub Actions, but there is one significant drawback to that solution: there is no way to review changes before applying them with terraform apply.

GitHub Actions has the ability to use Reviewing deployments with Required reviewers, but this feature is only available on GitHub Enterprise.

So how can we solve this problem?

Of course, we can use solutions like Atlatis or Gaia — but when you have only a few Terraform repositories on a project, such solutions will be a bit overkill.

Alternatively, we can continue using GitHub Actions from hashicorp/setup-terraform, but do a terraform plan when creating a Pull Request, and put its output in the PR comments. Then, before confirming Merge, we can see what changes will be applied and execute terraform apply after the feature/fix branch is merged into master.

However, this is not an ideal solution either, because between creating a Pull Request and executing Terraform Plan and a merge and executing Terraform Apply, time may pass, and some changes will be done in the infrastructure, and that Plan is no longer relevant. So, we have to deal with this as well.

So, what will be doing today?

- create a Terraform test code

- create two GitHub Workflow Actions:

- test on Pull Request created

- deploy on Pull Request merged

- and look at options for how to solve the problem with the outdated Terraform Plan

Preparation

First, we will create a Terraform project, then prepare a GitHub repository.

Terraform — test resources

In a test repository, create a terraform directory, and add a terraform.tf file in there:

terraform {

backend "s3" {

bucket = "tf-state-backend-atlas-test"

key = "atlas-test.tfstate"

region = "us-east-1"

encrypt = true

}

}

terraform {

required_version = "~> 1.5"

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.14"

}

}

}

provider "aws" {

region = "us-east-1"

default_tags {

tags = {

component = "devops"

created-by = "terraform"

environment = "test"

project = "atlas-test"

}

}

}

For the test, we’ll create an S3 bucket — add main.tf:

resource "aws_s3_bucket" "example" {

bucket = "atlas-test-bucket-ololo"

tags = {

Name = "atlas-test-bucket-ololo"

}

}

Error: creating S3 Bucket (atlas-test-bucket): operation error S3: CreateBucket — AuthorizationHeaderMalformed

Jumping ahead a bit, and a bit off-topic: when I was doing terraform apply, I caught an error:

Error: creating S3 Bucket (atlas-test-bucket): operation error S3: CreateBucket, https response error StatusCode: 400, api error AuthorizationHeaderMalformed: The authorization header is malformed; the region ‘us-east-1’ is wrong; expecting ‘us-west-2’

The text is a bit misleading, because why it says about regions? In the provider "aws" we have explicitly set us-east-1, and the AIM Role is also fine. Where does us-west-2 come from?

If we enable a debug with export TF_LOG=debug, we see that it is true:

http.response.header.server=AmazonS3 http.response.header.x_amz_bucket_region=us-west-2".

And this can be fixed by the — as we know — the name of the bucket must be unique for the entire AWS Region. That is, if you add the suffix “ololo”, everything works as it should:

http.response.header.server=AmazonS3 http.response.header.x_amz_bucket_region=us-east-1

It seems that either AWS itself or Terraform is trying to create a bucket in another region, if someone already has one in the specified one.

Okay, let’s move on.

Run cd terraform/ && terraform fmt && terraform init && terraform plan:

Everything is ready here, we can move on to the GitHub Actions.

GitHub Actions — repository environment variables

To launch Terraform in GitHub Actions, we need one variable in which we will pass an AWS IAM Role, which will allow GitHub to perform actions in our AWS account — see Configuring OpenID Connect in Amazon Web Services.

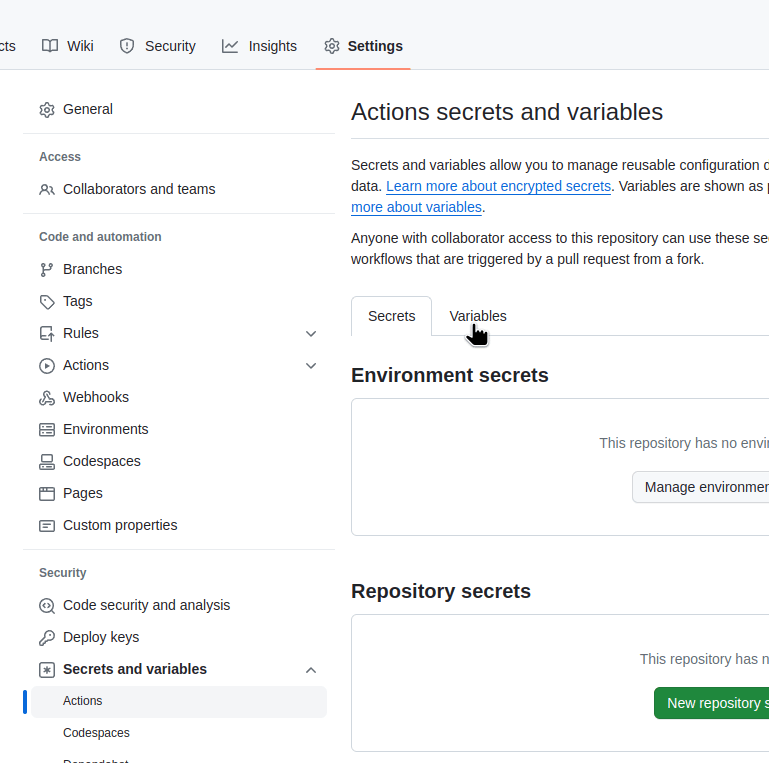

Go to Settings > Actions secrets and variables, go to Variables:

Add a new variable TF_AWS_ROLE to the Repository variables - it will be used in theaws-actions/configure-aws-credentials Action:

And basically, that’s all we need, now we can start creating our GitHub Actions Workflow.

GitHub Actions — creating Workflows

In order for Actions to run our Terraform code, we need to:

- execute the checkout code on the GitHub Runner, which will run the flow: use actions/checkout

- perform authentication in AWS: use aws-actions/configure-aws-credentials — it will execute AssumeRole from the

TF_AWS_ROLEvariable and will create environment variables with AWS keys andAWS_SESSION_TOKEN - run

terraform initand others: use hashicorp/setup-terraform - it will add Terraform itself

We can use the same approach with separate Actions for each step, as described in Creating a test-on-push Workflow, but for the sake of clarity and simplicity, I'll describe everything directly in the workflow file.

Workflow with Terraform Validate and Terraform Plan

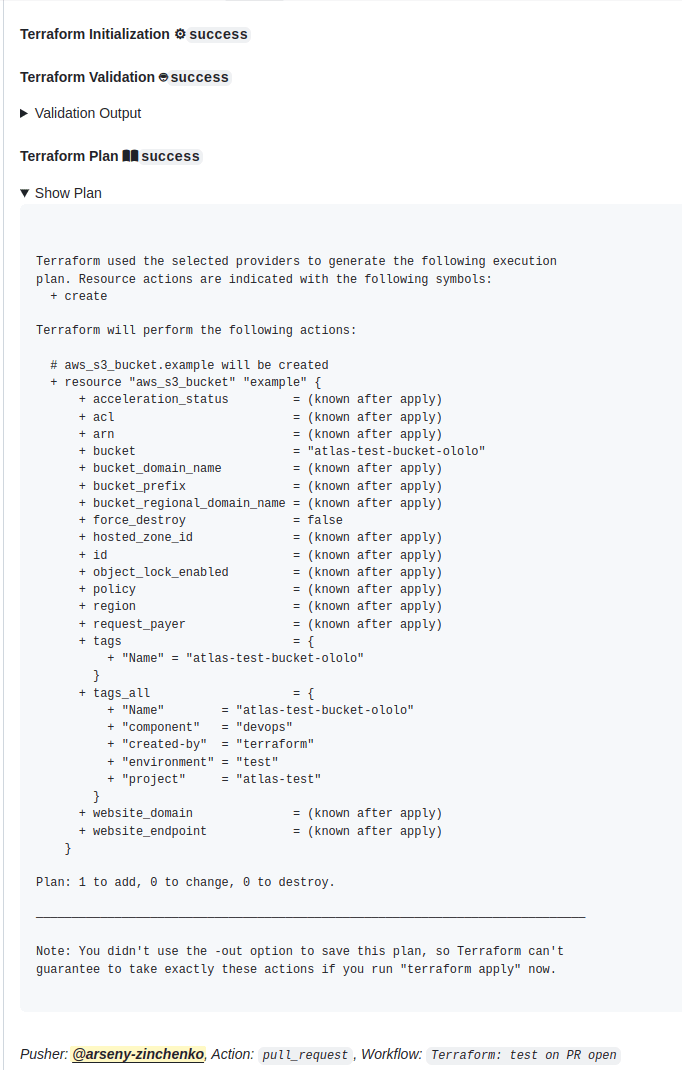

First, let’s create a Workflow that will be run when a PR is created and will perform checks with terraform validate, tflint, etc., and then will execute terraform plan, the result of which will be added to the comments of the Pull Request that triggered this workflow.

In the root of the repository, create a directory .github/workflows, and terraform-test-on-pr.yml file in it:

name: "Terraform: test on PR open"

# set other jobs with the same 'group' in a queue

concurrency:

group: deploy-test

cancel-in-progress: false

on:

# can be ran manually

workflow_dispatch:

# run on pull-requests

pull_request:

types: [opened, synchronize, reopened, ready_for_review]

# only if changes were in the 'terraform' directory

paths:

- terraform/**

permissions:

# create OpenID Connect (OIDC) ID token

id-token: write

# allow read repository's content by steps

contents: read

# allow adding comments in a Pull Request

pull-requests: write

jobs:

terraform:

name: "Test: Terraform"

runs-on: ubuntu-latest

# to avoid using GitHub Runners time

timeout-minutes: 10

defaults:

run:

# run all steps in the 'terraform' directory

working-directory: ./terraform

steps:

# get repository's content

- name: "Setup: checkout"

uses: actions/checkout@v4

# setup 'env.AWS_*' variables to run Terraform

- name: "Setup: Configure AWS credentials"

uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: ${{ vars.TF_AWS_ROLE }}

role-session-name: github-actions-terraform

role-duration-seconds: 900

aws-region: us-east-1

# terraform formatting check

- name: "Test: Terraform fmt"

id: fmt

run: terraform fmt -check -no-color

# do not throw error, just warn

continue-on-error: true

# use TFLint to check the code

- name: "Setup: TFLint"

uses: terraform-linters/setup-tflint@v3

with:

tflint_version: v0.48.0

- name: "Test: Terraform linter"

run: tflint -f compact

shell: bash

# use official Action

- name: "Setup: Terraform"

uses: hashicorp/setup-terraform@v3

# get modules, configure backend

- name: "Test: Terraform Init"

id: init

run: terraform init -no-color

# verify whether a configuration is syntactically valid

- name: "Test: Terraform Validate"

id: validate

run: terraform validate -no-color

# create a Plan to see what will be changed

- name: "Test: Terraform Plan"

id: plan

run: terraform plan -no-color

In the concurrency block, we prohibit the simultaneous launch of several workflows, see Using concurrency. Also, we will need this later when we do a Workflow with terraform apply.

For Terraform commands, we set the -no-color parameter so that later in the comments to the Pull Request there will be no extra characters:

Add .gitignore:

**/.terraform/*

See the full example in Terraform.gitignore.

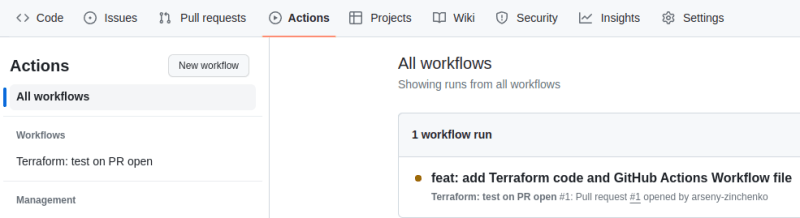

Commit all the changes, push them to the repository. If the changes were made in a separate brunch, then merge them to master so that GitHub Actions “sees” the new Workflow file, and we have a running Workflow:

Adding Terraform Plan to comments to a Pull request

Next, let’s use actions/github-script, which can add comments to our PR via the GitHub API.

And for the comment, we will use outputs from our steps.plan.

Add another step:

...

# generate comment to the Pull Request using 'steps.plan.outputs'

- name: "Misc: Post Terraform summary to PR"

uses: actions/github-script@v6

if: github.event_name == 'pull_request'

env:

PLAN: "${{ steps.plan.outputs.stdout }}"

with:

github-token: ${{ secrets.GITHUB_TOKEN }}

script: |

const output = `#### Terraform Format and Style 🖌\`${{ steps.fmt.outcome }}\`

#### Terraform Initialization ⚙️\`${{ steps.init.outcome }}\`

#### Terraform Validation 🤖\`${{ steps.validate.outcome }}\`

<details><summary>Validation Output</summary>

\`\`\`\n

${{ steps.validate.outputs.stdout }}

\`\`\`

</details>

#### Terraform Plan 📖\`${{ steps.plan.outcome }}\`

<details><summary>Show Plan</summary>

\`\`\`\n

${process.env.PLAN}

\`\`\`

</details>

*Pusher: @${{ github.actor }}, Action: \`${{ github.event_name }}\`, Workflow: \`${{ github.workflow }}\`*`;

github.rest.issues.createComment({

issue_number: context.issue.number,

owner: context.repo.owner,

repo: context.repo.repo,

body: output

})

Here:

- execute the

stepifgithub.event_name == 'pull_request' - create an environment variable

PLANwith the content fromsteps.plan.outputs.stdout - run

actions/github-script: - pass

GITHUB_TOKENfor authentication (usespermissions.pull-requests: write) - pass the

scriptargument, in which: - create the

const output, where we generate the text for the comment - call the

github.rest.issues.createCommentfunction, which: - in the

bodypasses the valueconst output - passes a value from GitHub Actions

context, which is generated from the webhook payload - see.context.tsand Webhook payload object for pull_request

I was a little surprised that github.rest.issues.createComment is called - because why issues when we're dealing with a Pull Request?

But in the Sending requests to the GitHub API documentation we can see that “issues and PRs are treated as one concept by the Octokit client”.

And in the client’s own documentation:

You can use the REST API to create comments on issues and pull requests. Every pull request is an issue, but not every issue is a pull request.

If necessary, you can get all the data from the contexts as follows:

...

- name: Dump Job Context

env:

JOB_CONTEXT: ${{toJson(github)}}

run: echo "$JOB_CONTEXT"

- name: View context attributes

uses: actions/github-script@v7

with:

script: console.log(context)

...

Okay.

Commit the changes, push them, and wait for the job to execute (remember that our trigger is changes in the terraform directory):

And we have a new comment in Pull Request:

What else you can do here is to update an existing comment instead of creating a new one in Pull Request.

To do so, you can use the github.rest.issues.listComments function to find all the comments with comment.body.includes('Terraform Format and Style'), and if one is found, run github.rest.issues.updateComment, and if not, run github.rest.issues.createComment as above:

...

- name: "Misc: Post Terraform summary to PR"

uses: actions/github-script@v6

if: github.event_name == 'pull_request'

env:

PLAN: "${{ steps.plan.outputs.stdout }}"

with:

github-token: ${{ secrets.GITHUB_TOKEN }}

script: |

// 1. Retrieve existing bot comments for the PR

const { data: comments } = await github.rest.issues.listComments({

owner: context.repo.owner,

repo: context.repo.repo,

issue_number: context.issue.number,

})

const botComment = comments.find(comment => {

return comment.user.type === 'Bot' && comment.body.includes('Terraform Format and Style')

})

// 2. Prepare format of the comment

const output = `#### Terraform Format and Style 🖌\`${{ steps.fmt.outcome }}\`

#### Terraform Initialization ⚙️\`${{ steps.init.outcome }}\`

#### Terraform Validation 🤖\`${{ steps.validate.outcome }}\`

<details><summary>Validation Output</summary>

\`\`\`\n

${{ steps.validate.outputs.stdout }}

\`\`\`

</details>

#### Terraform Plan 📖\`${{ steps.plan.outcome }}\`

<details><summary>Show Plan</summary>

\`\`\`\n

${process.env.PLAN}

\`\`\`

</details>

*Pusher: @${{ github.actor }}, Action: \`${{ github.event_name }}\`, Workflow: \`${{ github.workflow }}\`*`;

// 3. If we have a comment, update it, otherwise create a new one

if (botComment) {

github.rest.issues.updateComment({

owner: context.repo.owner,

repo: context.repo.repo,

comment_id: botComment.id,

body: output

})

} else {

github.rest.issues.createComment({

issue_number: context.issue.number,

owner: context.repo.owner,

repo: context.repo.repo,

body: output

})

}

Although it’s up to you, because it may be more informative to have a history of plans.

Okay.

Now we have a GitHub Actions Workflow that performs Terraform checks and posts the Plan result in the comment of the Pull Request.

The only other thing to keep in mind is that the limit for the number of characters in a Pull Request comment is 65535, and some Plans may not fit.

The next thing we need to do is add a Workflow that will execute Terraform Apply when PR is measured.

Workflow with Terraform Apply

Create file terraform-deploy-on-pr-merge.yml:

name: "Terraform: Apply on push to master"

# set other jobs with the same 'group' in a queue

concurrency:

group: deploy-test

cancel-in-progress: false

on:

# run on PR merge, e.g. 'push' changes to the 'master'

push:

branches:

- master

# only if changes were in the 'terraform' directory

paths:

- terraform/**

permissions:

# create OpenID Connect (OIDC) ID token

id-token: write

# allow read repository's content by steps

contents: read

jobs:

deploy:

name: "Deploy: Terraform"

runs-on: ubuntu-latest

# to avoid using GitHub Runners time

timeout-minutes: 30

defaults:

run:

# run all steps in the 'terraform' directory

working-directory: ./terraform

steps:

# get repository's content

- name: "Setup: checkout"

uses: actions/checkout@v4

# setup 'env.AWS_*' variables to run Terraform

- name: "Setup: Configure AWS credentials"

uses: aws-actions/configure-aws-credentials@v4

with:

role-to-assume: ${{ vars.TF_AWS_ROLE }}

role-session-name: github-actions-terraform

role-duration-seconds: 900

aws-region: us-east-1

# use TFLint to check the code

- name: "Setup: TFLint"

uses: terraform-linters/setup-tflint@v3

with:

tflint_version: v0.48.0

- name: "Test: Terraform lint"

run: tflint -f compact

shell: bash

# use official Action

- name: "Setup: Terraform"

uses: hashicorp/setup-terraform@v3

# get modules, configure backend

- name: "Setup: Terraform Init"

id: init

run: terraform init -no-color

# verify whether a configuration is syntactically valid

- name: "Test: Terraform Validate"

id: validate

run: terraform validate -no-color

# create a Plan to use with the 'apply'

- name: "Deploy: Terraform Plan"

id: plan

run: terraform plan -no-color -out tf.plan

# deploy changes using Plan's file

- name: "Deploy: Terraform Apply"

id: apply

run: terraform apply -no-color tf.plan

Here everything is almost the same as in the previous workflow, only the trigger will be push to the master, and the result of terraform plan is saved to the file tf.plan, and then we can execute terraform apply with that file.

Push it to the repository, merge to the master branch, and we have a completed apply:

Okay. Looks good?

But there is a problem.

What if? Outdated Terraform Plan

And the problem is that between the time when we saw the result of terraform plan in the comments to the PR, and the time when it is merged and executed, some resources may change - either someone will run the deploy job from another PR, or someone will deploy their own changes from their own machine (we still allow this).

And then the Plan that we saw in the results of the Terraform Test workflow will no longer be relevant.

The second issue is that terraform apply does not execute the Plan that we saw in the comments and which we have updated. Instead, a new one that is generated during the execution of the deploy workflow.

So what can we do to prevent this from happening?

Terraform: “Saved plan is stale”

As a solution, we can save the results of terraform plan to a file, and then pass the same file to terraform apply.

Then, if there are changes in the Terraform State, the file from Plan that we pass to apply will fail, see Manual State Pull/Push.

How can we check how it works (e.g. how it fails):

- deploy our bucket

- add a tag

- run

terraform plan -out test.plan - add another tag

- run

terraform apply - and then run

terraform applyonce again, but from thetest.planfile - to simulate the changes that occurred between the launch of our workflows

So we have a bucket:

resource "aws_s3_bucket" "example" {

bucket = "${var.project_name}-bucket-ololo"

tags = {

Name = "${var.project_name}-bucket"

}

}

Deploy it with terraform apply:

$ terraform apply

...

aws_s3_bucket.example: Creating...

aws_s3_bucket.example: Creation complete after 3s [id=atlas-test-bucket-ololo]

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.

Add a tag:

resource "aws_s3_bucket" "example" {

bucket = "${var.project_name}-bucket-ololo"

tags = {

Name = "${var.project_name}-bucket"

NewTag = "NewTag"

}

}

Run terraform plan, save the result to a file:

$ terraform plan -out=test.plan

...

Plan: 0 to add, 1 to change, 0 to destroy.

Saved the plan to: test.plan

Add another tag:

resource "aws_s3_bucket" "example" {

bucket = "${var.project_name}-bucket-ololo"

tags = {

Name = "${var.project_name}-bucket"

NewTag = "NewTag"

NewTag2 = "NewTag2"

}

}

Deploy without test.plan:

$ terraform apply

...

aws_s3_bucket.example: Modifying... [id=atlas-test-bucket-ololo]

aws_s3_bucket.example: Modifications complete after 3s [id=atlas-test-bucket-ololo]

Apply complete! Resources: 0 added, 1 changed, 0 destroyed.

And now let’s try to deploy from the file:

$ terraform apply test.plan

Acquiring state lock. This may take a few moments...

╷

│ Error: Saved plan is stale

│

│ The given plan file can no longer be applied because the state was changed by another operation after the plan was created.

Great — our deployment failed.

GitHub Actions and Artifacts: transferring a file from Terraform Plan

Now let’s try to implement a mechanism for transferring a plan file between workflows. First, let’s save it to artifact.

Note : here you also need to consider the Security, because the plan may contain confidential data. On the other hand, if someone got unauthorized access to your CI, you’re already in trouble.

Save Plan output as an Artifact

So what do we need to do?

- when creating a Pull Request, run

terraform plan -out name.tfplan - save the

name.tfplanfile as an artifact - with the Pull Request merged, upload this

name.tfplanto the GitHub Runner - and execute

terraform apply name.tfplan

Look simple enough? Well, no.

Let’s start with uploading the artifact after the test — here, everything is really simple.

To use an artifact from a particular PR when executing terraform apply, add the PR number to the file name.

Update the terraform-test-on-pr.yml file:

...

# create a Plan to see what will be changed

# save it to the file with a PR number

- name: "Test: Terraform Plan"

id: plan

run: terraform plan -no-color -out env-test-${{ github.event.pull_request.number }}.tfplan

# save as an artifact to this workflow run

- name: Upload Terraform Plan

uses: actions/upload-artifact@v4

with:

name: env-test-${{ github.event.pull_request.number }}.tfplan

path: "terraform/env-test-${{ github.event.pull_request.number }}.tfplan"

# throw an error if we can't find the Plan's file

if-no-files-found: error

# replace if an existing one is found

overwrite: true

...

(in the path set the terraform/ directory, because “upload-artifact action does not use the working-directory setting”, see No files were found with the provided path: build. No artifacts will be uploaded.)

Push the changes, and check the job:

Use Terraform Plan’s Artifact for the Terraform Apply

Next, we need to use this file in another workflow where the deployment takes place — and here we have two problems:

- the official actions/download-artifact does not support downloading files from other workflows

- our workflow with

terraform applyis triggered by thepushevent, notpull_request- and in thegithubcontext we no longer have thegithub.event.pull_request.number

The first problem can be solved with another Action — dawidd6/action-download-artifact, to which you can pass the file name of another workflow that will contain the required artifact.

And the second problem can be solved with the help of the jwalton/gh-find-current-pr Action, which makes a request to the GitHub API and returns a PR number from which the Merge was made.

So, update our terraform-deploy-on-pr-merge.yml - add permissions.actions: read, permissions.pull-requests: read, and two new steps - remove Terraform Plan, get the PR number, and update Terraform Apply - pass the file with the plan:

...

permissions:

# create OpenID Connect (OIDC) ID token

id-token: write

# allow read repository's content by steps

contents: read

# get PR number

pull-requests: read

# allow download artifacts

actions: read

...

# get a PR number used to make the 'push' when merging

- name: "Misc: get PR number"

uses: jwalton/gh-find-current-pr@master

id: findpr

with:

state: all

# download artifact witjh the Terraform Plan file of the 'Terraform Test' workflow

- name: "Misc: Download Terraform Plan"

uses: dawidd6/action-download-artifact@v3

with:

github_token: ${{secrets.GITHUB_TOKEN}}

# the Workflow to look for the artifact

workflow: terraform-test-on-pr.yml

# PR number used to generate the artifact and trigger this workflow

pr: ${{ steps.findpr.outputs.pr }}

# artifact's name

name: env-test-${{ steps.findpr.outputs.pr }}.tfplan

path: terraform/

# ensure we have the file in the workflow

check_artifacts: true

- name: "Deploy: Terraform Apply"

id: apply

run: terraform apply -no-color env-test-${{ steps.findpr.outputs.pr }}.tfplan

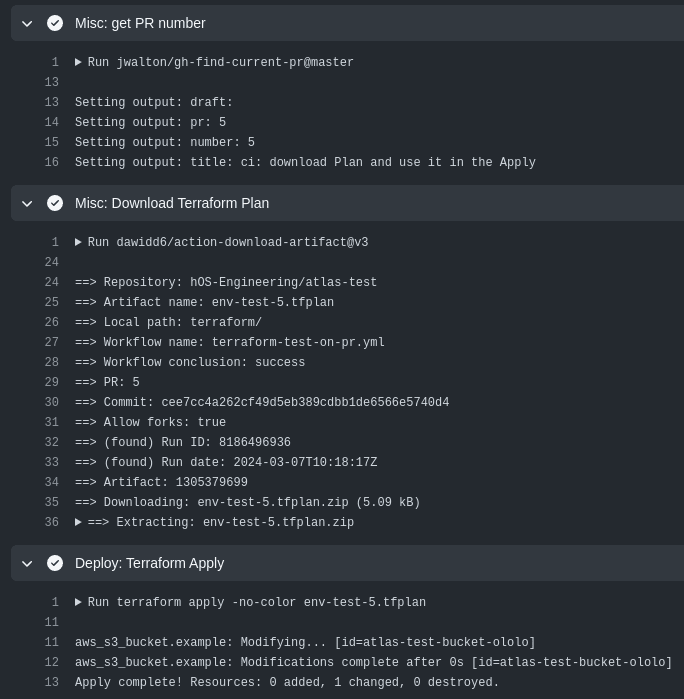

Push the changes, and we have a Terraform Apply executed using the file from the Terraform Plan of the previous workflow:

Another important thing is that thanks to the same value in concurrency.group in both Workflows, our deployment will always wait for the Plan to execute, which is useful when a PR is created and immediately merged:

...

# set other jobs with the same 'group' in a queue

concurrency:

group: deploy-test

cancel-in-progress: false

...

The solution looks quite working, and I tested it with several simultaneous PRs. Although I’m a bit concerned about the fact that there are “too many moving parts” and we rely on third-party GitHub Actions.

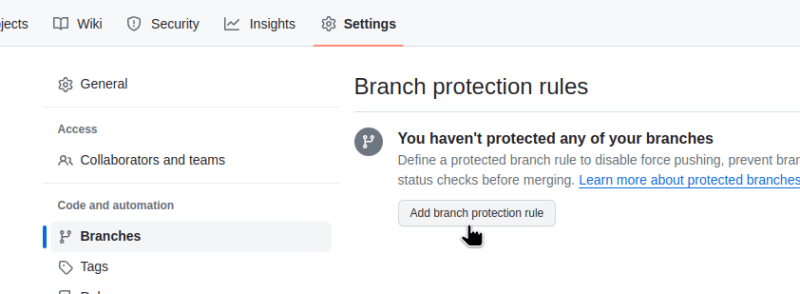

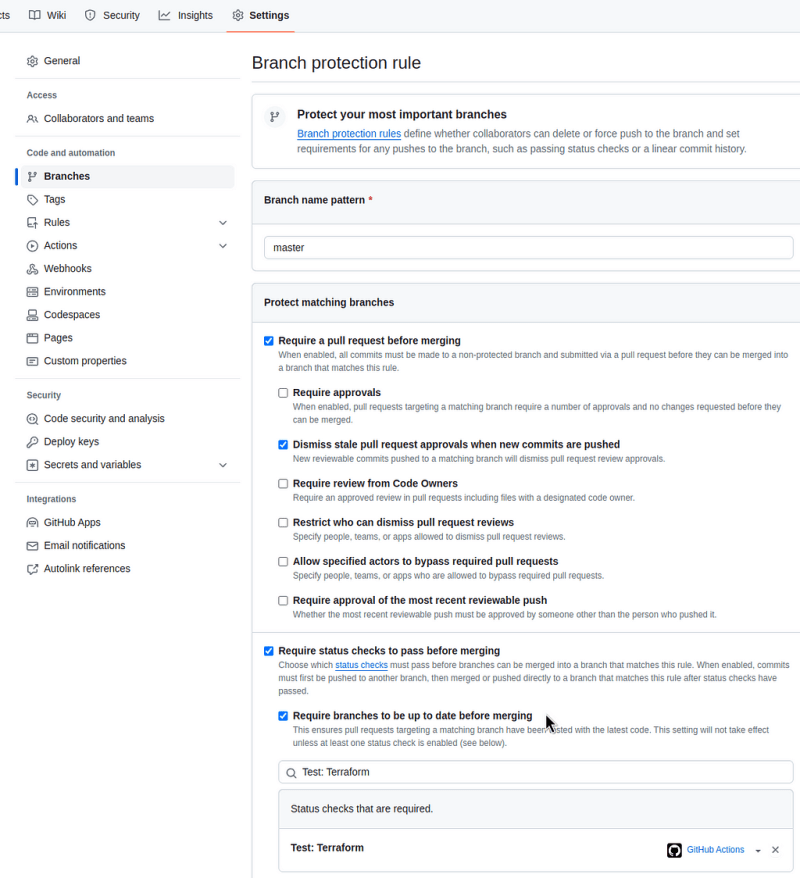

Using GitHub Branch protection rule

There is one more option, and you can either use only it — or the option written above + this one: you can set a Branch protection rule, with a requirement in the branch from which PR is made to have all the changes that are already in the master.

If use only this approach, then we remove the steps with uploads/downloads of the artifact, and instead use terraform plan -out file.tfplan and terraform apply file.tfplan in one job, as was did before the solution with artifacts.

Although with this approach, you still rely on the fact that the Plan that will be created during the execution of the job will be == the Plan that you checked in the PR comments, because the “fuse” with the “Saved plan is stale” will not work here.

However, configuring a Branch protection rule anyway will be useful:

Enable the “Require status checks to pass before merging” and “Require branches to be up to date before merging”:

Then, if a merge was made in the master branch from another branch — GitHub will not allow your PR Merge to be performed until you update your branch, and this will cause another workflow run with the Terraform Test, and that will generate a new Plan, which will be added in the comment:

Click on the Update branch — a new check is launched, and a new artifact is generated with a new Plan in the artifact (if we combine both solutions):

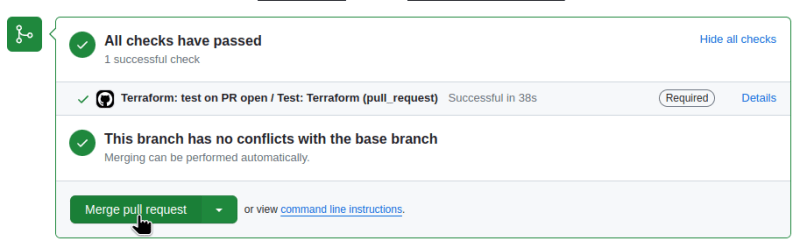

And now we can merge and deploy:

Done.

P.S. And in any case, always have S3 Bucket Versioning to have a backup of your state files.

Originally published at RTFM: Linux, DevOps, and system administration.

Top comments (0)