Contents

- Summary

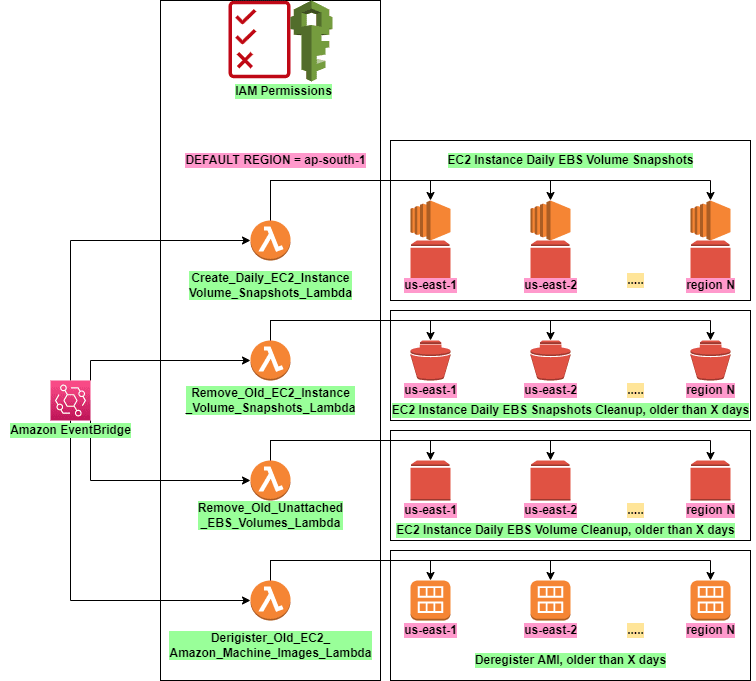

- Architecture diagram and high level overview of all the solutions

- AWS IAM permissions

- AWS EventBridge and Lambda

- EC2 instance daily ebs volume snapshots solution

- Coming up next

Summary

Welcome to PART-1 of a four part tutorial, on how to leverage AWS services like Lambda and EventBridge, to perform housekeeping on your AWS infrastructure on a schedule automatically. I am going to perform the following using AWS Lambda, EventBridge and python🐍.

- [PART-1] Create EBS volume snapshots of all our AWS EC2 instances in all regions, and tag them.

- [PART-2] Cleanup EBS volume snapshots from all our regions, whose age is greater than X days.

- [PART-3] Cleanup EBS volumes from all our regions, which is not attached to a resource, and whose age is greater than X days.

- [PART-4] Deregister Amazon Machine Images from all our regions whose age is greater than X days.

I am going to do all those using AWS EventBridge, AWS Lambda and python. This is going to automate any manual effort of going to every region and finding artifacts related to these and performing maintenance on them.

Architecture diagram and high level overview of all the solutions

Architecture diagram

EC2 instance daily ebs volume snapshots

I am going to list out all instances in all of our regions, and its associated volumes, and create EBS snapshots from them. Also I am going to add custom tags to these snapshots.

EC2 instance daily ebs snapshot cleanup after X days

I am going to list all the EBS snapshots that we own in our regions, and then delete those older than X days.

EC2 instance daily unattached ebs volume cleanup after X days

I am going to list all the EBS volumes that we own in our regions, and then delete those which are not attached to any resource and older than X days.

Deregister old EC2 Amazon machine images after X days

I am going to list all the Amazon Machine Images that we own in our regions, and then delete those which are not attached to any resource and older than X days.

AWS IAM permissions

Create an IAM role with the following permissions and trust policy.

Permissions

Attach a permission policy template to your role which will allow for:

- AWS cloudwatch log stream creation and writing.

- Describe EC2 instances, volumes, snapshots and AMI's.

- EC2 snapshot creation and deletion.

- EC2 volume deletion.

- EC2 AMI deregistration.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:CreateLogStream",

"logs:CreateLogGroup",

"logs:PutLogEvents"

],

"Resource": "arn:aws:logs:*:*:*"

},

{

"Effect": "Allow",

"Action": [

"ec2:CreateSnapshot",

"ec2:CreateTags",

"ec2:DeleteSnapshot",

"ec2:Describe*",

"ec2:ModifySnapshotAttribute",

"ec2:ResetSnapshotAttribute",

"ec2:DeleteVolume",

"ec2:DeregisterImage"

],

"Resource": "*"

}

]

}

Trust relationship

Allow the lambda service access to your IAM role.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

AWS EventBridge and Lambda

I am going to use AWS Lambda to run our python code to do run our infrastructure maintenance.

I am going to use AWS EventBridge to run our code via lambda on a schedule. I am going to define our schedule using a cron expression, to trigger daily at midnight, local to our timezone.

You can create an EventBridge rule by going to AWS EventBridge service ➡️ rules ➡️ create rule. The cron expression we will use will be cron(30 18 * * ? *). I am based in India, and this translate to midnight IST timezone, which is 18:30:00 GMT.

I am going to use AWS Cloudwatch logs to log the data from our lambda runs.

EC2 instance daily ebs volume snapshots solution

Workflow and code

- The workflow that I will use is: > 🕛 Midnight ➡️ AWS EventBridge rule triggers ➡️ Runs Lambda code ➡️ Creates EC2 volume snapshots for all instances in each region ➡️ Logs to cloudwatch logs

-

Install boto3

python3 -m pip install boto3 -

Lambda code

- Initializaing a boto3 client

ec2_client = boto3.client('ec2')- Fetching all regions

def get_regions(ec2_client): return [region['RegionName'] for region in ec2_client.describe_regions()['Regions']]- Initializing a boto3 ec2 resource per region

ec2_resource = boto3.resource('ec2',region_name=region)- List all instances in the region using instances.all(). You can use filters also if you want.

instances = ec2_resource.instances.all()- List all volumes of each instance using volumes.all(). You can use filters also if you want.

for instance in instances: volumes = instance.volumes.all()- Create snapshot of the volume using the create_snapshot() method. Will create a tag of 'Instance_Id' and assign it the value of the 'instance id' the volume is attached to and also a tag of 'Device' and assign it the value of the attribute 'Device' from the volume.

desc = f'Backup for instance: {i.id} and volume: {v.id}.' tags = [ { 'Key':'Instance_Id', 'Value':f'{instance.id}' }, { 'Key':'Device', 'Value':f'{volume.attachments[0]["Device"]}' } ] for volume in volumes: snapshot = volume.create_snapshot( Description=desc, TagSpecifications=[ { 'ResourceType':'snapshot', 'Tags': tags } ] ) Putting it all together - Take a look at my GITHUB page for the complete code.

Cloudwatch logs

Coming up next

Part 2 of this tutorial where I will talk about creating code for daily ebs snapshot cleanup older than X days.

![Cover image for AWS Infrastrucutre Maintenance Using AWS Lambda and AWS EventBridge [EC2 instance daily ebs volume snapshots] [Part 1]](https://media2.dev.to/dynamic/image/width=1000,height=420,fit=cover,gravity=auto,format=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Fbjnkkzj9jgsjswkqbhvc.jpg)

Top comments (0)