Why do we need parsers?

The first obvious use case is computer languages. (I use the term computer language because not everybody considers HTML a programming language). This includes:

- compilers

- interpreters

- syntax highlighting

- static analysis tools, like linters and language servers

But also:

- network protocols

- serialization and deserialization

- configuration files

- command-line arguments

- natural language processing

- query languages (search box on the website)

- validation, like email validation

The irony

Parser generators have been studied since the ’60s. In practice, this knowledge is mostly used for compilers and interpreters. I guess this is because the subject typically introduced in course about compilers, for example cs143, 15-411/611, CS-321, 322, COP5621.

For other tasks, people typically use ad-hoc solutions and regular expressions. The obvious problem is that ad-hoc solutions are error-prone and harder to maintain.

Regular expressions (I’m talking about the first implementation - grep; not about the formal system by Kleene) were created to be used within the command-line. The command-line interface typically requires one-liners, hence terse notation (though it is possible to open editor and pipe text from the buffer).

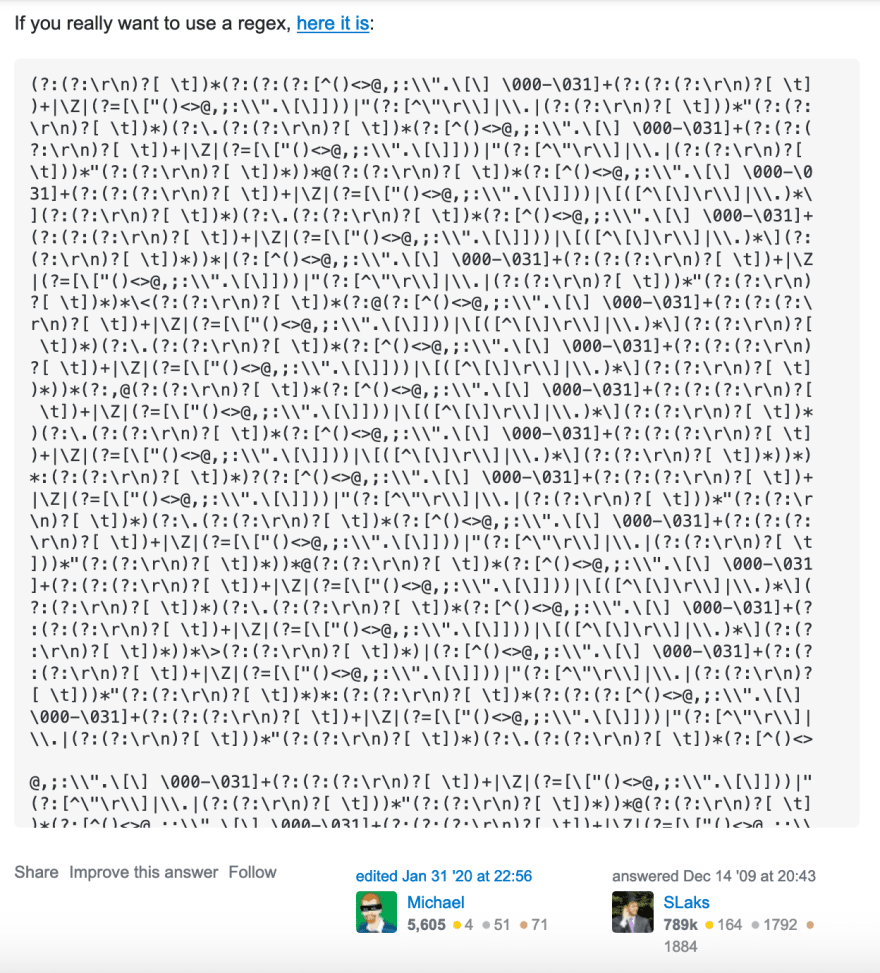

I don’t think that author meant it to be used to validate emails, for example, see this answer on StackOverflow.

Two potentially better solutions:

- rfc5322 provides formal grammar (ABNF), so it should be possible to generate a parser

- Use something like Rosie - the notation is not so terse. Rosie uses PEG, which was introduced only in 2004.

Second example of ad-hoc solution is syntax highlighting with regular expressions, for example TextMatte language grammars. Compare ad-hoc solution (on the left) and real context-free language parser (on the right):

Credit: image taken here.

Even more irony

There is a lot of research about parsing and the most complex problems are:

- handling ambiguous grammar

- handling left-recursive grammar

- clear error reporting and error recovery

- incremental parsing

Most of those problems could be avoided with projectional editing and the source code can be stored as AST, for example, like in unison.

It is relatively easy to create a parser for non-ambiguous, non-left recursive CFG, like s-expressions or JSON, with linear complexity.

PS

I may sound a bit dramatic here, for example, there are a lot of binary parsers, but admit there is a bit of irony in the situation.

Top comments (6)

Since you mentioned projectional editing, did you ever play with jetbrains' mps?

I like the idea of ditching these text based programming systems of ours; no more tabs/spaces wars.

No I haven't

I actually like text as media for code (or I got used to it?). Text has some advantages, for example, it is easy to copy-paste, it is possible to do diffs.

This project looks interesting lamdu.org/. It looks like a text, but it is not free form editing, so there are no syntax errors.

jetbrains.com/mps/ does more or less that: define your language, generate projectional editors to make it look like text, or graphics, while keeping a model underneath.

you may find this interesting voelter.de/data/pub/LWB-ResultsAnd...

Yes! It was actually Markus Völter that I followed an MPS introduction with.

This is very a small world :)