Previously, I wrote about my favorite Mac apps. But I spend half of my time in the terminal, and I have a handful of CLI tools that makes my life easier. Here are some of them:

Tools that I use every day

fish shell

Shell - the most important tool that you use every time you open the terminal. I’ve used Bash and Z shell in the past, and currently, I’m using fish. It’s a great shell with plenty of features out of the box, like the autosuggestions, syntax highlighting, or switching between folders with ⌥+→ and ⌥+←.

On the one hand, this makes it perfect for beginners, because you don’t have to set up anything. On the other hand, because it’s using a different syntax than other shells, you usually can’t just paste scripts from the internet. You either have to change the incompatible commands to fish scripts or start a Bash session to run the bash scripts. I understand the idea behind this change (Bash is not the easiest language to use), but it doesn’t benefit me in any way. I write bash/fish scripts too seldom to memorize the syntax, so I always have to relearn it from scratch. And there are fewer resources for fish scripts than for bash scripts. I usually end up reading the documentation, instead of copy-pasting ready-made scripts from StackOverflow.

Do I recommend fish? Yes! Switching shells is easy, so give it a try. Especially if you don’t like to tinker with your shell and want to have something that works great with minimal configuration.

Fish plugins

You can add more features to fish with plugins. The easiest way to install them is to use a plugin manager like Fisher, Oh My Fish, or fundle.

Right now, I’m using Fisher with just three plugins:

- franciscolourenco/done - sends a notification when a long-running script is done. I don’t have a terminal open all the time. I’m using a Guake style terminal that drops down from the top of the screen when I need it and hides when I don’t. With this plugin, when I run scripts that take longer than a few seconds, I get a macOS notification when they finish.

- evanlucas/fish-kubectl-completions - provides autocompletion for kubectl (Kubernetes command line tool).

- fzf - integrates the fzf tool (see below) with fish.

I had more plugins in the past (rbenv, pyenv, nodenv, fzf, z), but I switched to different tools to avoid slowing down my shell (a mistake that I did in the past with Z shell).

If you want to see more resources for fish, check out the awesome-fish repository. Compared with Z shell and Bash, fish has fewer plugins, so it’s not the best option if you want to tweak it a lot. For me - that’s a plus. It stops me from enabling too many plugins and then complaining that it’s slow 😉.

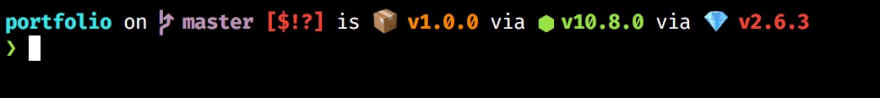

Starship

If I had to choose one favorite tool from this whole list - it would be Starship. Starship is a prompt that works for any shell. You install it, add one line of config to your .bashrc/.zshrc/config.fish, and it takes care of the rest.

It shows:

- git status of the current directory and different symbols, depending on if you have new files, pending changes, stashes, etc.

- Python version if you are in a Python project folder (the same applies to Go/Node/Rust/Elm and many other programming languages)

- How long it took the previous command to execute (if it was longer than a few milliseconds)

- Error indicator if the last command failed

And a bazillion other information. But, in a smart way! If you are not in a git repository, it hides the git info. If you are not in a Python project - there is no Python version (because there is no point in displaying it). It never overwhelms you with too much information, and the prompt stays beautiful, useful, and minimalistic.

Did I mention that it’s fast? It’s written in Rust, and even with so many features, it’s still faster than all my previous prompts! I’m very picky about my prompt, so I was usually hacking my own version. I was taking functions from existing prompts and gluing it together to make sure I only have things that I need and it stays fast. That’s why I was skeptical about Starship. “There is no way that an external tool can be faster than my meticulously crafted prompt!” Well, I was wrong. Give it a try, and I’m sure you are going to love it! Huge kudos to the creators of Starship!

z

“z” lets you quickly jump around your filesystem. It memorizes the folders that you visit, and after a short learning time, you can move between them using z path_of_the_folder_name.

For example, if I often go to folder ~/work/src/projects, I can just run z pro and immediately jump there. z’s algorithm is based on frecency - a combination of frequency and recency that works very well. If it memorizes a folder that you don’t want to use, you can always remove it manually.

It speeds up moving between commonly visited folders on my computer and saves me a lot of keystrokes (and path memorization).

fzf

fzf stands for “fuzzy finder”. It’s a general-purpose tool that lets you find files, commands in the history, processes, git commits, and more using a fuzzy search. You type some letters, and it tries to match those letters anywhere in the list of results. The more letters you type, the more accurate the results are. You probably know this type of search from your code editor - when you use the command to open a file, and you type just part of the file name instead of the full path - that’s a fuzzy search.

I use it through the fish fzf plugin, so I can search through command history or quickly open a file. It’s another small tool that saves me time every day.

fd

Like the find command but much simpler to use, faster, and comes with good default settings.

You want to find a file called “invoice,” but you are not sure what extension it has? Or maybe it was a directory that was holding all your invoices, not a single file? You can either roll up your sleeves and start writing those regex patterns for the find command or just run fd invoice. For me, the choice is easy 😉.

By default, fd ignores files and directories that are hidden or listed in the .gitignore. Most of the time - that’s what you want, but for those rare cases when I need to disable this feature, I have an alias: fda='fd -IH'.

The output is nicely colorized and, according to the benchmarks (or the GIF above), it’s even faster than find.

ripgrep

In a similar manner to fd mentioned above, ripgrep is an alternative to the grep command - much faster one, with sane defaults and colorized output.

It skips files ignored by .gitignore and hidden ones, so you will probably need this alias: rga='rg -uuu'. It disables all smart filtering and makes ripgrep behave as standard grep.

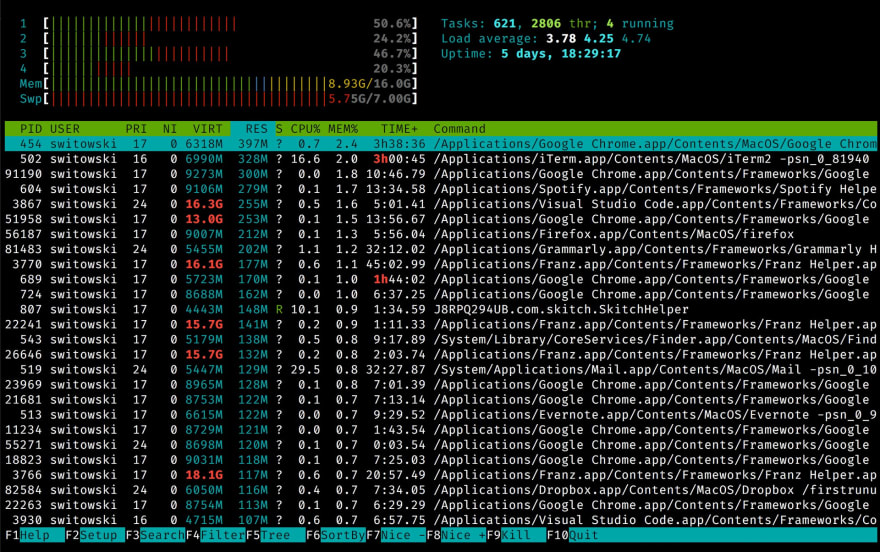

htop and glances

The most common tool to show information about processes running on Linux or Mac is called top. It’s the best friend of every system administrator. And, even if you are mostly doing web development like me, it’s useful to see what’s going on with your computer. You know, just to see if it was Docker or Chrome that ate all your RAM this time.

top is quite basic, so most people switch to htop. htop is top on steroids - colorful, with plenty of options, and overall more comfortable to use.

glances is a complementary tool to htop. Apart from listing all the processes with their CPU and memory usage, it also displays additional information about your system.

You can see:

- network or disks usage

- used and total space on your filesystem

- data from different sensors (like the battery)

- and a list of processes that recently consumed an excessive amount of resources

I still use htop for faster filtering and killing processes, but I use glances to quickly glance at what’s going on with my computer. It comes with API, Web UI, and various export formats, so you can take system monitoring to the next level. I highly recommend it!

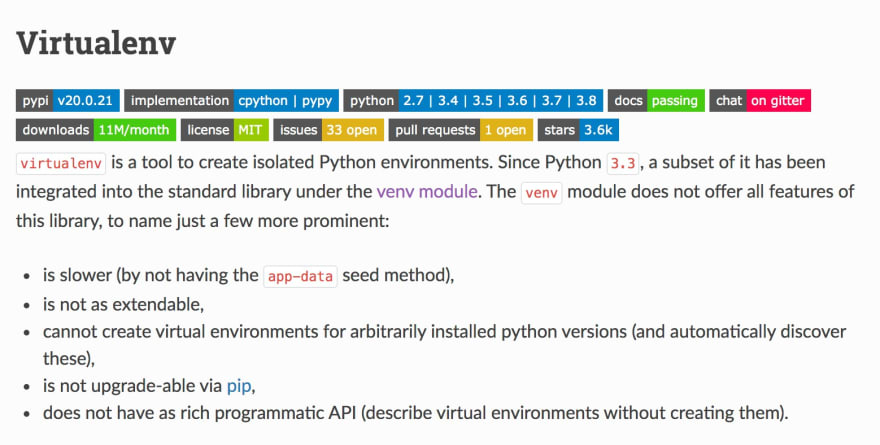

virtualenv and virtualfish

Virtualenv is a tool for creating virtual environments in Python (I like it more than the built-in venv module).

VirtualFish is virtual environment manager for the fish shell (if you are not using fish, check out virtualenvwrapper). It provides a bunch of additional commands to create, list, or delete virtual environments quickly.

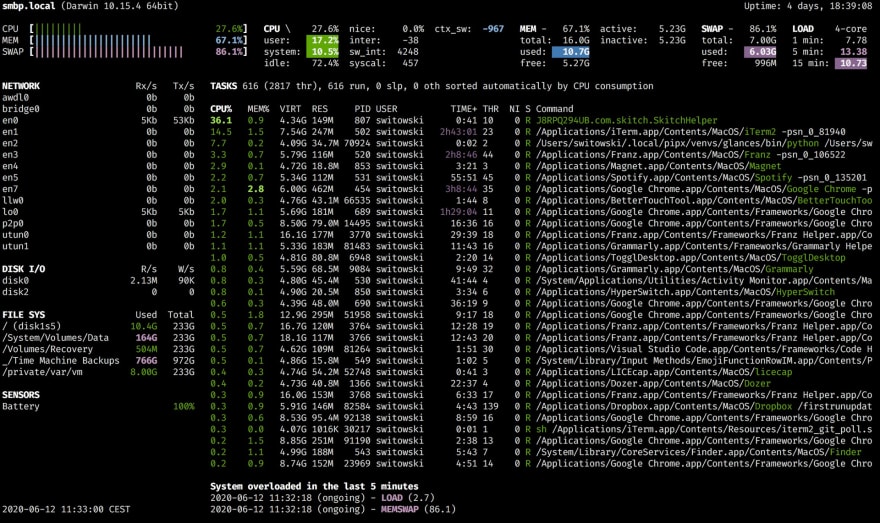

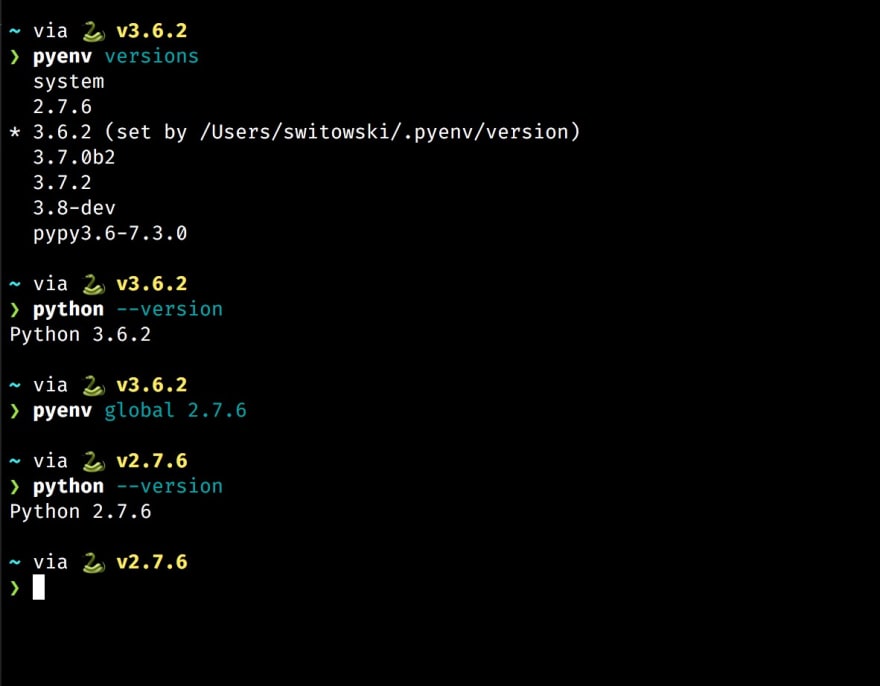

pyenv, nodenv, and rbenv

Pyenv, nodenv, and rubyenv are tools for managing different versions of Python, Node, and Ruby on my computer.

Let’s say you want to have two versions of Python on your computer. Maybe you are working on two different projects, or you still need to support Python 2. Managing different Python versions is hard. You need to make sure that different projects install packages with the correct version. If you are not careful, it’s easy to mess up this fragile setup and overwrite binaries used by other packages.

Version manager helps a lot and turns this nightmare into a pretty manageable task. Good version manager can swap the Python version globally or “per folder”. And every version is isolated from others.

I’ve recently found a tool called asdf that can replace pyenv, nodenv, rbenv, and other *envs with one tool to rule them all. It provides version management for pretty much any programming language, and I will definitely give it a try next time I need to set up a version manager for a programming language.

pipx

Virtualenv solves many problems with package management in Python, but there is one more use case to cover. If I want to install a Python package globally (because it’s a standalone tool, like glances mentioned before), I have a problem. Installing packages outside of a virtual environment is a bad idea and can lead to problems in the future. On the other hand, if I decide to use a virtual environment, then I need to activate that virtual environment each time I want to run my tool. Not the most convenient solution either.

It turns out that pipx tool can solve this problem. It installs Python packages into separate environments (so there is no risk that their dependencies will clash). But, at the same time, CLI commands provided by those tools are available globally. So I don’t have to activate anything - pipx will do this for me!

ctop and lazydocker

Both of those tools are useful when you are working with Docker. ctop is a top-like interface for Docker containers. It gives you:

- A list of running and stopped containers

- Statistics like memory usage, CPU, and an additional detailed window for each container (with open ports and other information)

- A quick menu to stop, kill, or show logs of a given container

It’s so much nicer than trying to figure out all this information from docker ps.

And if you think that ctop was cool, wait until you try lazydocker! It’s a full-fledged terminal UI for managing Docker with even more features. My favorite tool when it comes to Docker!

Tools that I don’t use every day

Apart from the tools that I use almost every day, there are some that I collected over the years and found them particularly useful for specific tasks. There is something to record GIFs from the terminal (that you can pause and copy text from!), list directory structure, connect to databases, etc.

Homebrew

Homebrew needs no introduction if you are using a Mac. It’s a de facto package manager for macOS. It even has a GUI version called Cakebrew.

asciinema

asciinema is a tool that you can use to record your terminal sessions. But, unlike recording GIFs, it will let your viewers select and copy the code from those recordings!

It’s a great help for recording coding tutorials - not many things are as frustrating as typing long commands because the instructor didn’t provide you with code snippets.

colordiff and diff-so-fancy

I rarely do diffs (compare differences between two files) in the terminal anymore, but if you need to do one, use colordiff instead of the unusable diff command. colordiff colorizes the output, so it’s much easier to see the changes instead of trying to follow all the “<” and “>” signs.

For running git diff and git show commands, there is an even better tool called diff-so-fancy. It further improves how the diff looks like by:

- highlighting changed words (instead of the whole lines)

- simplifying the headers for changed files

- stripping the + and - symbols (you already have colors for this)

- clearly indicating new and deleted empty lines

tree (brew install tree)

If you want to present the content of a given directory, tree is a go-to tool to do that. It displays all the subdirectories and files in a nice, tree-like structure:

$ tree .

.

├── recovery.md

├── README.md

├── archive

├── automator

│ ├── Open\ Iterm2.workflow

│ │ └── Contents

│ │ ├── Info.plist

│ │ ├── QuickLook

│ │ │ └── Thumbnail.png

│ │ └── document.wflow

│ └── Start\ Screen\ Saver.workflow

├── brew-cask.sh

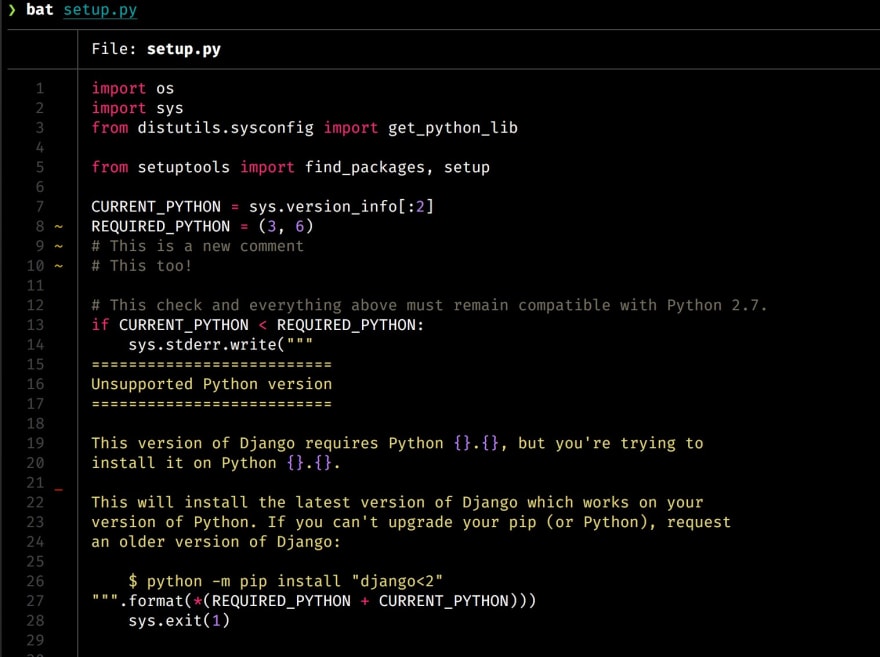

bat

Like cat (command most commonly used to display the content of a file in a terminal) but better.

Adds syntax highlighting, git gutter marks (when applicable), automatic paging (if the file is large), and in general, makes the output much more enjoyable to read.

httpie

If you need to send some HTTP requests and you find curl unintuitive to use, try httpie.

It’s an excellent alternative. It’s easier to use with sensible defaults and simple syntax, returns a colorized output, and even supports installing additional plugins (for different types of authentication).

tldr

Simplified man pages. “man pages” contain manuals for Linux software that explain how to use a given command. Try running man cat or man grep to see an example. They are very detailed and sometimes can be difficult to grasp. So tldr is a community effort to extract the essence of each man page into a brief description with some examples.

tldr works for the most popular software. As I said, it’s a community effort, and there is a slim chance that someone will document an obscure package for you. But when it works, the information it provides usually contains what you are looking for.

For example, if you want to create a gzipped archive of a few files, man tar will overwhelm you with the possible options. tldr tar will instead list some common examples - the second one being exactly the thing that you want to do:

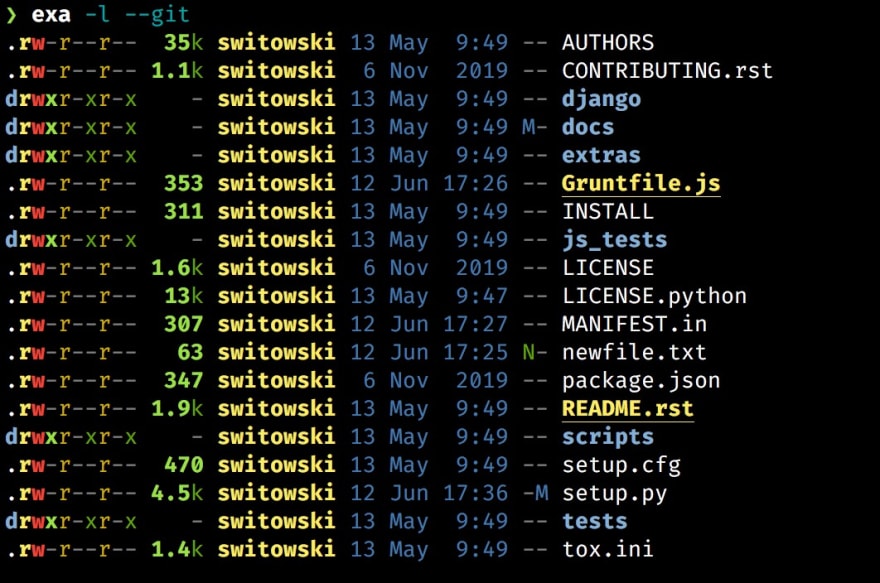

exa

exa can be a replacement for the ls command.

It’s colorful, displays additional information like the git status, automatically converts file size to human-readable units, and all that while staying equally fast to ls.

Even though I like it and recommend it, for some reason, I still stick with ls instead. Muscle memory, I guess?

litecli and pgcli

My go-to CLI solutions for SQLite and PostgreSQL. With the auto-completion and syntax highlighting, they are much better to use than the default sqlite3 and psql tools.

mas

mas is a CLI tool to install software from the App Store. I used it once in my life - when I was setting up my Macbook. And I will use it to set up my next Macbook too.

mas lets you automate the installation of software in macOS. It saves you from a lot of clicking. And, since you are reading an article about CLI tools, I assume that - just like me - you don’t like clicking.

I keep a list of apps installed from the App Store in my “disaster recovery” scripts. If something bad happens, I hopefully should be able to reinstall everything with minimal hassle.

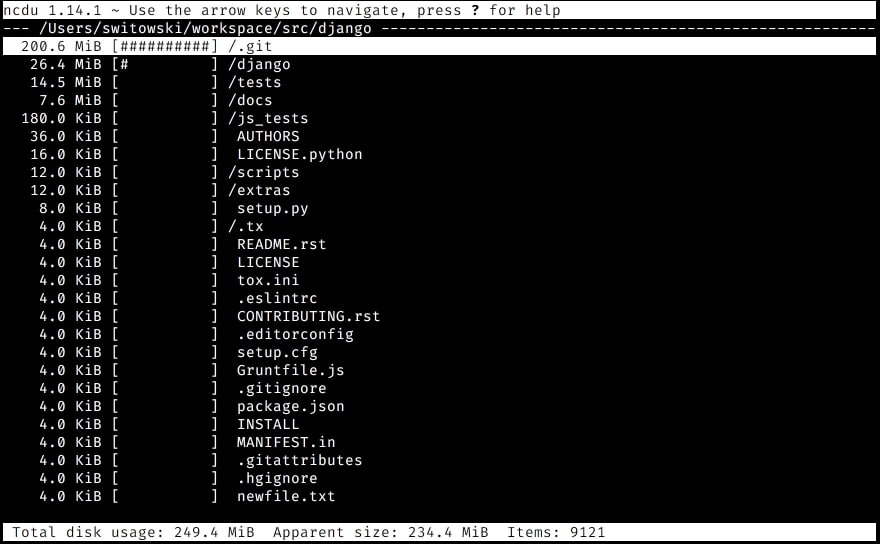

ncdu

Disk usage analyzer for the terminal. Fast and easy to use. My default tool when I need to free some space (“Ohh, I’m sure that 256GB of disk space will be plenty!”).

That’s all folks

It was a long list, but hopefully, you discovered something new today.

Some of the tools like the fd, ripgrep, or httpie are improved versions of things that you probably already know. Except that the new versions are easier to use, they provide better output, and sometimes are actually faster. So don’t cling to old tools only because everyone else is using them.

A common argument for sticking with the “standard Linux tools” that I hear is:

But what if you need to log in to a Linux server and do some work there? You won’t have access to your fancy tools. It’s better to learn how to use tools that come built-in with most Linux distributions.

When was the last time you had to log in to a Linux server? One where you can’t install software, but you had to debug some issues manually? I don’t even remember. Not many people do that anymore. Maybe it’s time to rethink how you do the deployment and move away from manual work into something more scalable?

Don’t let your tool-belt get rusty and add some new CLI tools there!

Many of the tools that I mentioned are related to Python programming. If you want to learn more and see how I use them, I’ve made a free video for PyCon 2020 conference called "Modern Python Developer's Toolkit".

It's a two-hour-long tutorial on how to set up a Python development environment, which tools to use, and finally - how to make a TODO application from scratch (with tests and documentation). You can find it on YouTube.

Top comments (32)

Dito, i also have a similar tool collection plus a bunch more and a one liner install script to have it installed whenever i encounter an empty new server i have to work with: kakulukia.github.io/dotfiles/

Great things to check :)

More for terminal lovers (mac and linux):

The theme for zsh I love most is powelevel 10k github.com/romkatv/powerlevel10k

Ah oh my zsh have lot of intesting plugins as well ohmyz.sh/

Oh-my-zshell is truly amazing if you are on Z shell. Highly recommend!

Nice list. Plenty to try there! What are your thoughts on pipenv? I’m fairly new to python and coming from ruby pipenv seems very natural to me but it doesn’t seem to be widely adopted.

I haven't actually used pipenv. If you asked me 2 months ago, I would say: "don't use it, it's abandoned", as there was no release between 2018 and 2020.04.29. But it seems to be back on track again.

With my clients, I use requirements.txt + pip-tools to pin versions. That's an old-fashioned but pretty bulletproof solution. In my personal projects, I used poetry, and I liked it. But I would not use it in a commercial solution (unless the client insists, which never happened) - mainly because of the bus factor (it's still maintained by a small group of people).

Also, keep in mind that I write applications, not Python packages. For writing a Python package, I would go with Poetry (it takes away a lot of hassle with packaging, etc.)

Ah, OK. I saw a lot of places where people said it was the "right/standard" way of doing things going forward. I guess that could have been wishful thinking. My main use case is writing AWS lambda functions and I like the way I can define dev dependencies separately so they don't get packaged up with the final build and impact startup time. I've never been able to find a way to do that in a requirements.txt file though. Is there an ideomatic way to deal with this? Maybe a separate requirements file?

Your intuition is correct - it can be achieved through separate requirements files.

I usually have 4 of them (2 maintained by hand, 2 generated automatically):

requirements.in- list of packages that you want to include in the final build, e.g. "django". You can be even more specific: "django<3.0"requirements.txt- pinned versions - this will be generated by the pip-tools, so you never touch this file by hand, e.g. "django==2.2.2"requirements-dev.in- packages used by developers (not included in the final build), e.g.: "pytest"requirements-dev.txt- pinned versions of packages for developers generated by pip-tools.You add packages to the

*.infiles, generate the*.txtfiles with, let's say a Makefile command, and use them to install packages on the destination servers.Awesome! Thanks for your help. I'll definitely try this as I feel like I'm swimming against the tide with pipenv.

Thanks for the recommendations. I used

thefuckin the past, but when I was switching to fish shell I realized that I never got used to using it. The most common aliases in my terminal are usually 1-3 letter-long ("gco" for "git commit"), I use "z" to move around the filesystem, and fish also adds some autocompletion, so there is little room for mistakes anymore.xxhlooks amazing! That's exactly what I needed so many times in the past! I don't ssh to servers that much anymore (damn you kubernetes taking our jobs!), but I will definitely give it a try!As a note/warning: fancy prompts are nice, but if you're working on a distributed filesystem like Lustre, GPFS, BeeGFS, NFS, MSDFS, or Panasas, the large volume of silent file operations the prompt engines do behind the scenes combined with the dramatically increased latency of basic filesystem calls like

stat()can make them reaaaaaaally slow.I tried some fancy Powerline stuff on one of our compute clusters once, and it was just too frustrating -- I had to remove it after less than half an hour.

I believe you can run python scripts from a virtual environment without activating the environment by calling the script by it's full path. If you have a script named

mygreatscriptin a virtual environment in~/Development/env/you can run it with~/Development/env/bin/mygreatscriptThis was very useful! I learned about several new tools from your post. Thank you!

Very nice! It's not often I see pgcli!

For those interested, I wrote an article a while back about mycli, which is very, very similar for MySQL database.

The shell is a very powerful tool! I could not live without it.

The best though is to learn a bit of bash and write scripts to automate everything boring and we do a bit too often.

Let me add my own project I use quite often, DevDash, to monitor my other side projects / landing pages / github pages from the terminal.

If I were still using MySQL, I would definitely use

mycli, those are all awesome SQL shells.Great job on the DevDash! It looks amazing!

This is great, thanks for sharing. I hadn't heard of exa before, very useful to be able to see git status like this :)

Thanks! delta and broot looks great, I will definitely check them out!

I was expecting to just share what I'm using, but instead I'm getting a whole new collection of tools to test 😁 This is awesome!