Summary:

This article describes the step-by-step process of Appending a file data to the Microsoft Azure Data Lake Gen 2 in On-premise environment.

Pre-requisites:

• The user needs to have a working Microsoft Azure Data Lake Subscription & API Access.

• License for the Integration server 10.5 or above.

Contents:

• How to append a file data to the Microsoft Azure Data Lake Gen2.

Note:

Any coding or configuration examples provided in this document are only examples and are not intended for use in a production system without verification. The example is only done here to better explain and visualize the possibilities.

Steps:

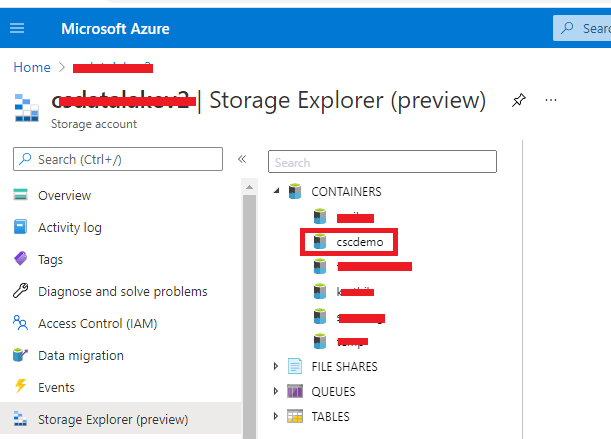

- Microsoft Azure Data Lake Gen2 is a Container based storage system, we will create a storage first to append the data. Ex: - Let’s create the Container named: - cscdemo.

- Create the ccs (cloud connector service) & choose the “Filesystem” service, inside the services, choose “Create Filesystem” operation.

- Run the ccs & provide the filesystem name as: - “cscdemo” then click “OK”.

- Successful response shows the below messages. In the Azure UI, this container will be visible.

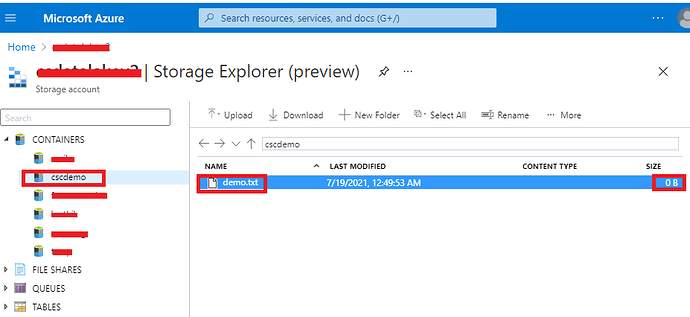

- Now container is ready for use, we will create an empty text file. This empty test file will act like a place holder. Once we push any data to this file, all the data will reside in this text file.

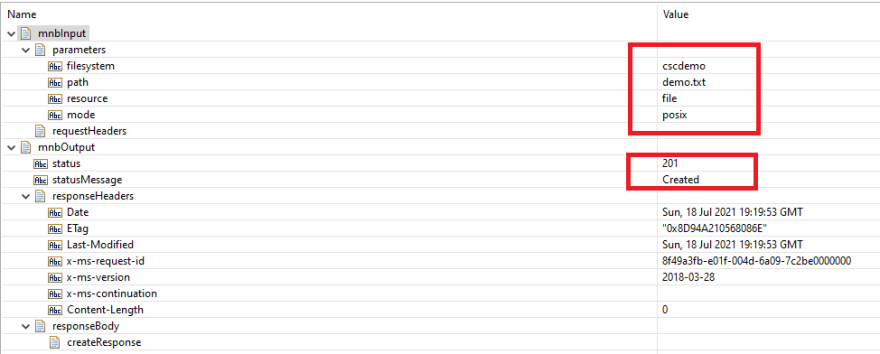

- Create new ccs & choose the “Path” service, then choose the “Create or Rename a file or Directory” operation.

- Run the ccs, Fill the details like:- filesystem name (Container name), path (Name of the text file, user wants to create), resource (Path:- text file, or a directory , means folder) then click “OK”.

- The successful api call shows the below response & same text file or folder will reflect in the Azure UI.

- As user can see in the above images, the text file is getting created successfully in the Azure UI, the size of this text file is “0B”, i.e.:- the file is empty & there is no any data inside this file.

- Now will append some data to this text file, create a new ccs & choose the “Path” service then choose “Append files” operation, click “finish” then choose the parameter :- “position” as well then save the ccs.

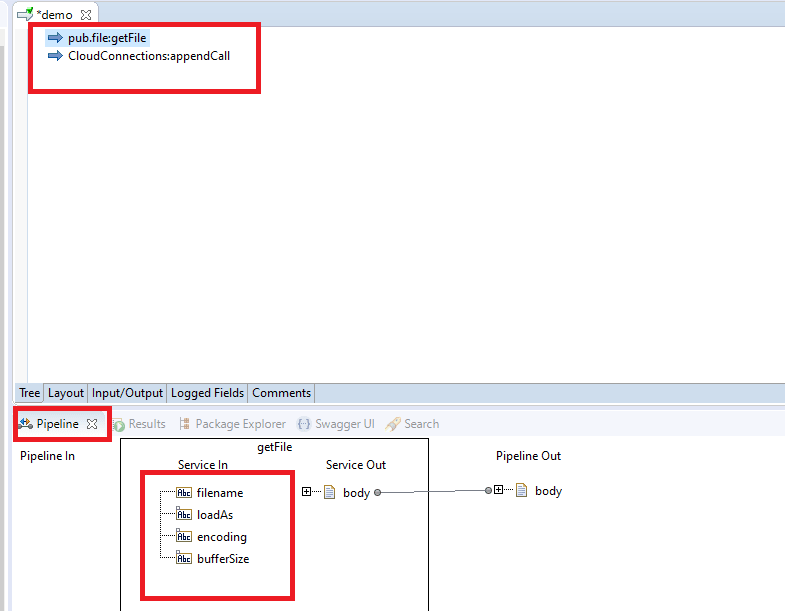

- Now create the demo flow service as mentioned below.

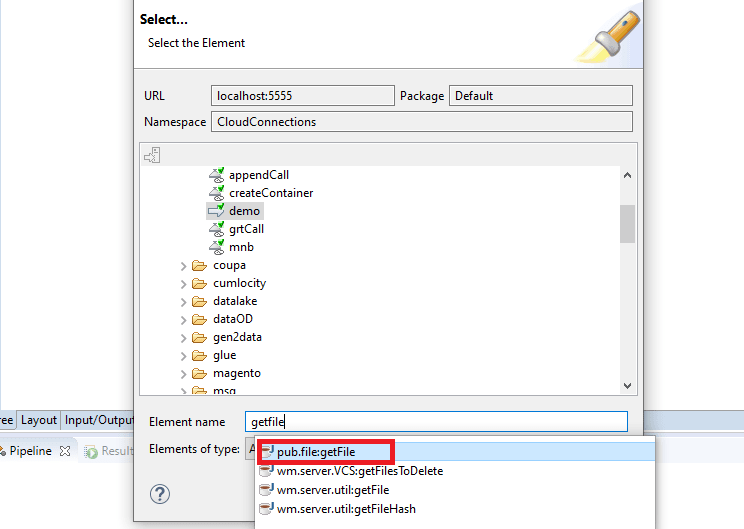

- Inside this demo flow service, invoke the “pub. getFile” service in-order to read some text file from your local system.

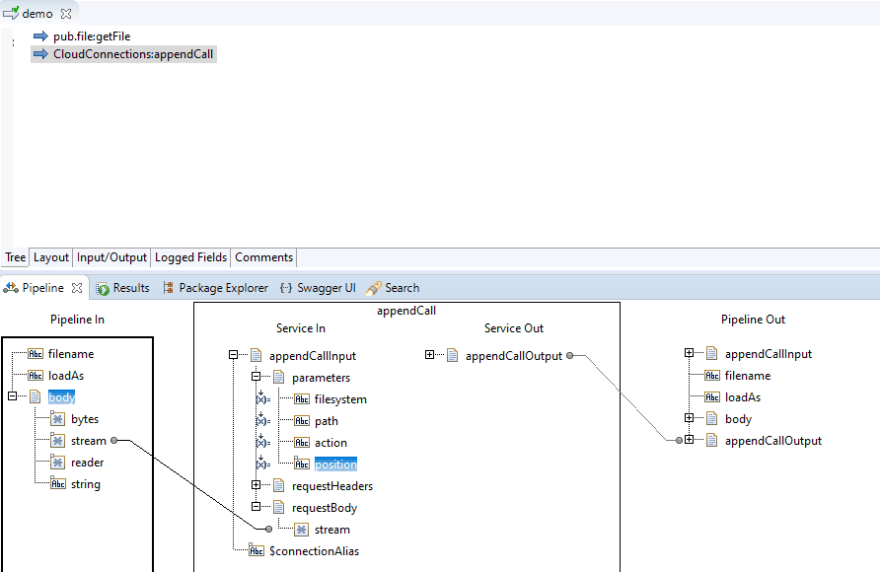

- Now, drag & drop the “Append file” ccs, that we have created at the step 10. Now click on the pipeline tab & choose the “getFile” service.

- Let’s create a text file in the local system, that contains 5 letters ex:- “Hello” then we will map the path of local text file to the “filename” parameters of the pub.getFile service, so that it reads the local system text file successfully. Ex: - C:\licenses\cscdemo.txt (I have created a local text file csc_demo.txt that contains 5 letters “Hello”)

- Fill the above parameters like: - “filename”, “LoadAs” as mentioned below. Filename: - C:\licenses\cscdemo.txt LoadAs: - stream.

- Apply the mapping as mentioned below. Fill the parameters of “appendCallInput” as below: -

Filesystem: - container name created in step 3.

Path: - text file name created in the step 7,8.

Action: - append.

Position: - 0 (As we want to append the data from the beginning of the file)

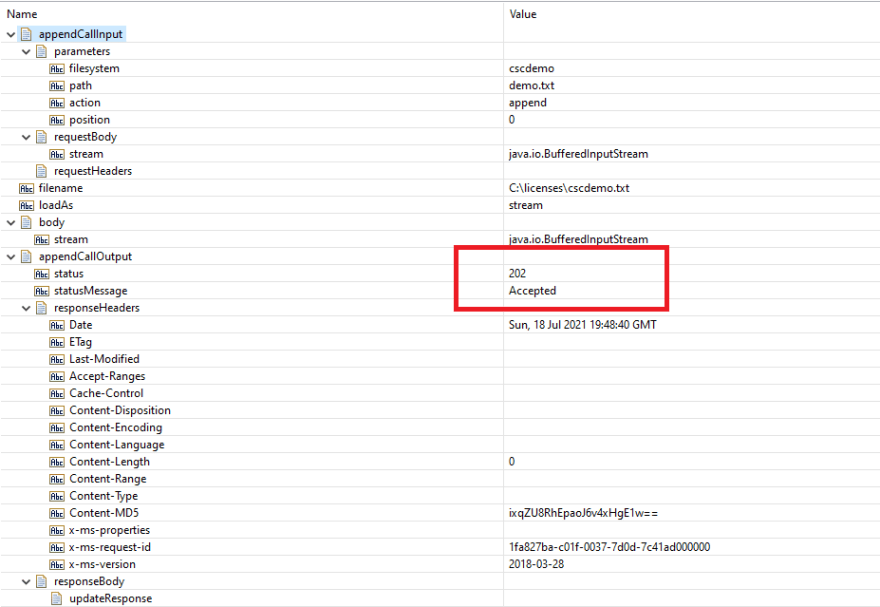

- Once all the mappings & data applied successfully, run this demo flow, a successful response show like below.

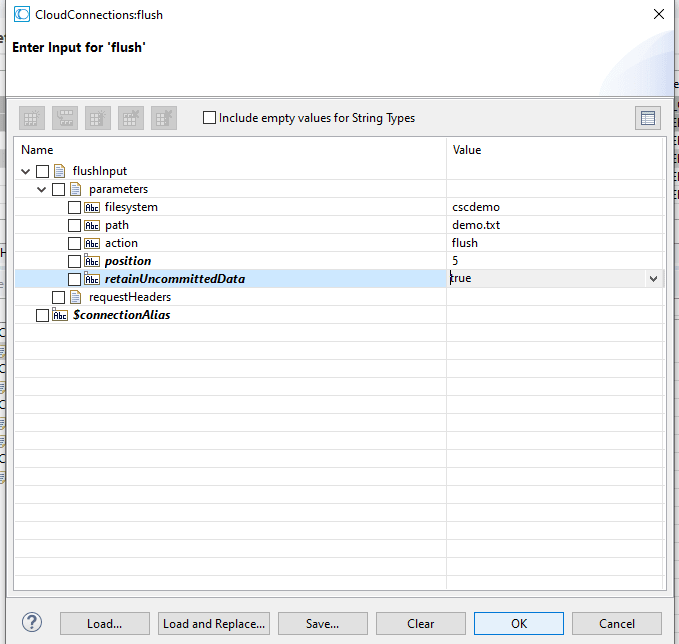

- Now the data sent to azure active directory, but still, it’s not committed yet on Azure text file. To commit this data, we need to perform the flush operation. Create the ccs, choose the “Path” service, choose “Flush Data, Set ACL and properties” operation. Choose the additional parameters like: - “position”, “retainUncommitedData”.

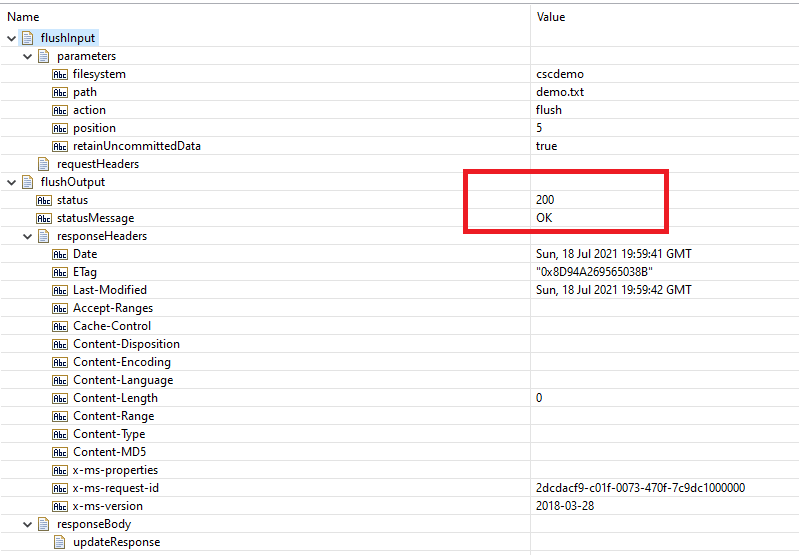

- Run the ccs, with the correct parameter’s values: -

Position: - 5 (our local text file contains the 5 letters “Hello”)

retainUncommitedData: - true

The successful call shows below response.

The successful call shows below response.

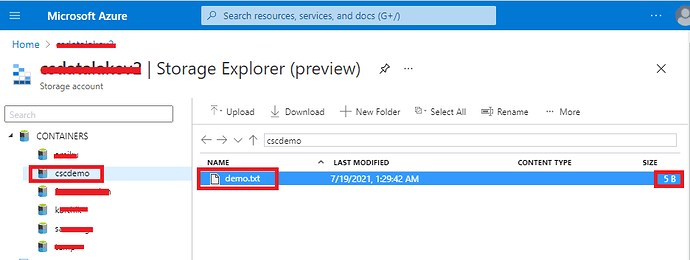

- Now observe the size in the azure UI, its “5B” now. Means the data has appended successfully.

Top comments (0)