Author: Ville Tirronen

Why is our company named Typeable? What does it even mean?

Types are one of the most essential concepts for software developers. They can also be one of the most confusing and multifaceted things in our field. And they are an endless source of arguments on the Internet. Thus, calling a company Typeable might seem like taking a stance. I believe many of the arguments around this central concept are based on different notions of the term and not so much on true disagreement. So, let's discuss a bit what the types even mean (to us anyway) and why software developers talk (and disagree) so much about them. And why Typeable is a great name after all.

Basic meaning of the word "Type"

When computer scientists or programmers use the word "type", what do they mean by it? In the ordinary sense, the word "type" is used in sentences like "he is not my type" or "there are different types of skis". Its synonyms include words like "kind", "sort", "class", "category", "family" etc. (funnily, all these are also keywords in some programming languages). We use this word to express that some things are unlike some other things, usually in some fundamental way. And that, I believe, is also exactly the origin of this term in software development.

We use the word 'type' to differentiate one thing from the other

Software engineers talk about "types" a lot more in their day to day work than they do outside of their work. And it seems that the concept of a "type" is somehow much more essential to programming than to other trades. While civil engineers might occasionally be talking about different types of cement, programmers seem to find daily uses for the word. Why is that?

Why do software developers in particular talk about types?

As you have probably heard, or discovered, everything that is processed with a computer is represented with only sequences of zeroes or ones. While this is not the whole truth, it gives the basic idea of what is going on inside a computer. To give a concrete example, the number 1 is often represented with the bit sequence 00000001 while the number 2 is 00000010 and 3 is 00000011 and so on. Similarly, the letter a is often encoded as 01100001 and b as 01100010.

So, to do proper processing, one has to know how to interpret these sequences of bits. If you are careless and read 01100001 as a number, you will get 97 instead of an a. They are different types of things.

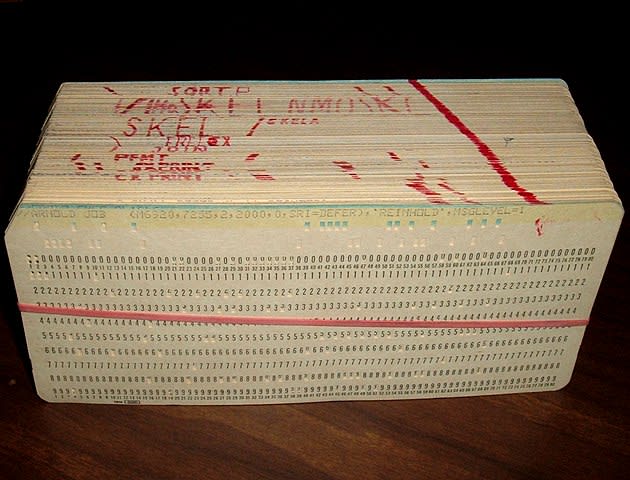

However, after punch-cards went out of fashion (mostly because they were insane pain in the nether regions to use) computer programmers have not really worked directly with bits. Instead, we jumped straight to talking about different kinds of numbers, memory addresses, and strings of characters (all automatically converted to the bits by, and for, the computer). Thinking about things in the memory of a computer as different types of things, like numbers or characters, is much more efficient than thinking about bits. If nothing else, types allow us to have discussions about how to program: you can tell the person next to you: "This routine operates with numbers and you cannot use it with texts".

A deck of punched cards comprising a computer program. Written in PL/1 for the IBM 360. ArnoldReinhold, CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0, via Wikimedia Commons

Further, even if we conceptualize computers working with basic types like numbers and characters etc., we'll find that we might have, for example, different kinds of numbers. One number might be about money on the client account, while the other number represents an hourly fee for some service. These two are of different kinds of things and it does not do to mix them up. Thinking about "types" of things is an inescapable fact in building programs.

Types and Type Checking

Anyone who has tried programming has probably found out that it is really easy to make mistakes when writing programs. While there are lots of ways for programs to go wrong, one big class of mistakes arises from confusing a thing of one type with a thing of another type. Instead of subtracting a value from a number representing a customer's balance, you might subtract the number from the memory address where the customer balance is stored.

At some point someone decided that it would be better to have computers check that this particular mistake would never happen, that is, they enabled computers to do type checking to verify that the most elementary mistakes can't happen. This turned out to be a great idea, and it is pretty hard to find a programming toolkit that doesn't check types in one form or another.

How do we make types checkable? There are two mainstream approaches for computer-based type checking. These are often called dynamic and static typing. We'll give a very brief description of both, not going into details, as a lot has already been said about static and dynamic typing.

A dynamic view

Dynamic typing arises from the idea that it is a bit silly to have the same sequence of bits mean two different things. Conceptually, we could encode an a as 00 01100001 and the number 97 as 01 01100001 instead of representing both with the sequence 01100001 and leaving it ambiguous which one we mean. By doing so, we enable the computer to check the first two bits before executing any routine. If the bits are 00 then we have a letter and if it says 01 then the thing is a number. And in case of a mixup, the computer can safely stop the program from causing a disaster by terminating the processing.

This makes programming safer. If a software developer makes a programming mistake, the programs can now indicate that they have failed to compute a sensible result instead of returning a plausible looking, but the wrong result. The signal indicating that two different things have been mixed up is called a type error.

A static view

Another way to check types is based on inspecting the program code. Instead of tagging values in the memory of a computer with information about their types we attempt to analyze the program code. That is, we look at what the developer has written and attempt to determine whether executing that code would lead to a type error. This is certainly harder to do than doing the same thing with runtime checks, but it offers a seeming advantage of knowing about the errors before the software is even executed.

The inherent problem with the static approach is that if the code can be arbitrarily complex, the process of deciding whether there is an error is very hard or even impossible (see Rice's theorem). Thus, the programmer usually needs to help the process by annotating the code, explicitly naming the types, or by following harder constraints on what the code can be like. Usually, one tends to give up on things like storing both texts and numbers in the same variable or list.

The pros and cons of dynamic typing

From the get-go it seems that static type checking has a great advantage over the dynamic. It can report errors before the program is even executed! That is, you don't need to wait for a user to try invoicing a customer to see if your invoicing routine works at all.

The basic mitigation is, of course, having the developers run their programs before they are given to the actual clients. That is, to try and see if programs do stop on error instead of computing the desired result.

Testing programs well is a hard task. Even simple things can have billions of input value combinations and rarely anything can be fully tested. That is not to say that there aren't occasional serious issues that arise from unexpected changes in types.

However, dynamic typing also offers advantages. Most obviously, it can be much more flexible than the static approach. Static checking, due to being a harder problem to solve, constraints the programmer to write only such programs that can be shown correct by whatever routine is checking them. Dynamic typing has no such limitation: anything you can program is fair game if it does not end up mixing types badly. Further, it is common for dynamic systems to be more resilient to certain changes. Many, if not all, dynamic languages follow a principle of "duck typing": you are free to change which types of things you pass around in the program if those said types happen to support the same routines as the previous types you used.

The pros and cons of static typing

There is only one significant con about static type checking. It is difficult. Surely, routines for doing it have existed for decades, but still, it commonly needs the software developer to pay attention to it while writing programs. There are often all kinds of (type) annotations, like "this variable holds a Number" or "this is a list containing Letters". Also, some things can be either hard or impossible to express. For example, a process that stores either a letter or a number in the same memory address depending on user input is usually not worth the bother of expressing.

Dynamic typing with testing seems nearly equivalent in safety and also perhaps superior in ergonomics, doesn't it? But what are the advantages of static typing then? Without delving into advanced uses of static types, there are some. For one, they are better at guaranteeing some simple conditions than dynamic types. If the program typechecks, we know that some things hold for all inputs without needing to devise tests. For another, there seems to be some cognitive advantage in forcing programmers to explicitly write what types of things they are talking about.

But which is better?

We are now in the argument territory! Both static and dynamic systems have keen proponents who seemingly love to fight about types on the internet. "It is unethical not to use static typing" or "Static typing is for weak minds" they say. "Why would you wait for the program to run to know it is wrong?", "Why would you spend ages convincing the type checker that the program is correct?". But we're not here to argue.

We're not arguing here, but reasoning about types!

Many people have tried to empirically prove one approach to be better than the other, but thus far there seem to be no convincing results for either system being better. Looking at the results of these studies suggests that if one way is better than the other, it is not very much better.

But there is something different about static types that makes some people pursue them more than seems empirically practical.

The naive connection between static and dynamic types

Up to this point, we have discussed types such as numbers and pieces of text, and their only use has been to allow us to name sets of things and alert us on their inconsistent use.

However, most sensible programming languages, whether they are dynamically or statically typed, have facilities for the developer to create their own types for modeling whatever domain they are working with. Like, we aren't thinking about bits when we use numbers, we aren't thinking so much about numbers and letters when we model things like Customer or Order. We can tell the computer that a Customer is represented with two pieces of text and a number and then pass that around as a unit. Like we do when we talk about what our program does: we don't go into technical implementation details, but try to be more declarative.

Likewise, we can often define types that represent the concept of "one of the following things". For example, a customer invoice may be either "valid", "outdated", or "voided (on some date)". A single type represents all these alternatives. Similarly, a routine may return an "error as text" or a "result as a number" and we use a single type to model this. For such types, there exists some run-time metadata that allows the computer to determine which particular case they represent. Types that are "either this or that" are sometimes called sum-types.

As it happens, the sum types provide an interesting insight into the connection between static and dynamic types. Essentially, they allow statically typed systems to represent dynamically typed behavior! For example, if we want to create a routine that can accept either a type Customer or type Address we can simply create a type for containing either of those and have our routine accept that new type. We have no need for dynamic typing for this.

There is a thought experiment in which we use a static language and create a single sum type for representing all of the things found in some dynamic language. Then we can build the same routines as we could in the dynamic language with our static language!

From point of view of static programming languages, dynamically typed languages have only one type: a huge sum of all possible kinds of things in the language. There are no dynamically typed languages, there are only languages that happen to have one static type.

The corollary is of course that statically typed programming languages protect us from errors only up to a point we have applied the type checking to. It is possible to effectively bypass type checking entirely or in parts, and for both good and bad outcomes.

This point of view is perhaps taken to absurdity, but it points out that in one way, there is something more to static types than there is to dynamic types and that in some sense, dynamic typing and static typing talk of subtly different things. Kind of the same but not exactly the same.

But what are static types?

Up to this far, we talked about the type as an attribute of data. That is, there is some sequence of zeroes and ones that is the "thing with the type". This 01100001 is a Number. But then, when we started talking about static type checks we suddenly said that we do type checking without running the program and without even having any data. If types are property of data, what are we checking here? Certainly not types, since if there is no data, there can be no type!

We could be seen to talk about types in sense of "the list customers which, when the program is executed, will contain data with type Customer". But that is a bit of mental gymnastics and certainly not how we seem to be talking about type checking. Instead, we straight out say that customers is a list containing Customer values. We instead talk about the symbols on the screen: "See that expression over here, that is of type Int".

So, the things, in static lingo, that have types are actually not data, but pieces of program code. The expression 1 + 1 is what has the type Int and not as much the of the bit sequence 0000010 stored in the computer's memory. In fact, there are things with types that are never stored in the memory of the computer. For example, constant expressions like 1 + 1 are often eliminated before the program is executed. Moreover, given a sufficiently rich system of types, we can define types like Void (in Haskell sense), which have no run-time representation at all but still help us to express some things about our code.

So, in one very real sense, dynamic typing and static typing, talk of subtly or not so subtly different things.

Mathematics and static types

Software developers are not the only people who talk about types. Mathematicians and modern statically typed languages have both independently grown towards the mathematical meaning of types as well as have drawn great inspiration from them.

Mathematicians precessed computer scientists with a concept of (a static) type for some hundred years. Around the rise of the 20th-century mathematicians started to pursue theories that could serve as solid foundations for mathematics. And during those pursuits, we got probably the first mention of a type that matches the software developers' view of the thing we have in static typing.

There is a nice anecdote about the invention also. Bertrand Russell, one of the mathematicians in pursuit of foundations for their field, accidentally discovered a flaw or paradox in one of their attempts at foundational theory. A Mathematical Set is a thing that either contains or does not contain some other things. You can have a set of basic colors ({red, green, blue}) or even a set of all sets. And by accident, you might also invent a set of all sets that don't contain themselves. Except, that if this set contains itself, it shouldn't and vice versa. A Paradox!

To word this out in non-mathematical terms, consider an island where there is a barber and villagers. Barber shaves everyone who doesn't shave themselves. Does the barber shave himself? Yes if no and no if yes. Or is this just a nonsensical wordplay? Yes, but if you are developing the foundations for all mathematics, being able to write out such nonsense with the said foundations is probably not a very good thing.

Russell's solution to this was to essentially say, "Look, barbers and villagers are different types of things and what you said is nonsense". Similarly, he invented a system of types for sets, where each set had a rank. Each set could only contain sets of smaller rank or the formula was non-sense.

Observe the similarity to static types. Here look at simply what is being said, instead of what it means. We don't need to know the truth or falsity of the statement to determine sensibility. Likewise, software developers look at the written code instead of data and results of program execution to determine whether it is well typed and worth executing.

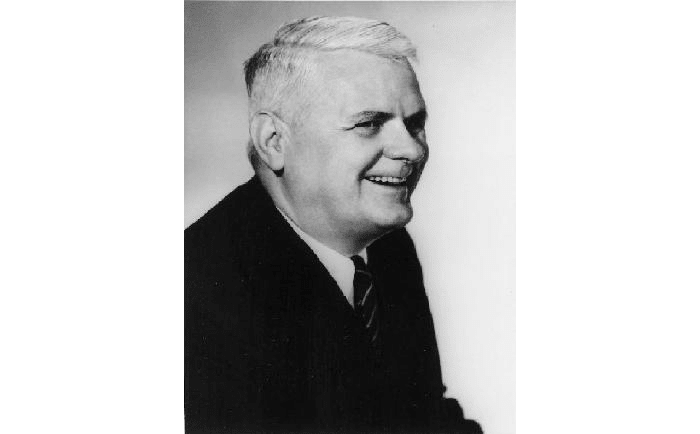

Alonzo Church @ University of Berkeley

Russell's types have little to do with programming, but there is another concept of types invented in the mid-century, which does. Logician Alonzo Church accidentally discovered a minimal computational system that was seemingly able to express all imaginable computations. This system also had similar paradoxes making it unsuitable as a mathematical foundation (software developers today would call those just "infinite loops" or infinite recursion instead). The solution was the same: define a type for each expression in the formula and then inspect it (textually) and from that, determine whether the system is "sensible".

What is interesting about Church's system was that it eventually was turned into an actual, executable by the computer, programming language Lisp. Occurring around 1958 this made Lisp the second (or third) oldest programming language, still in use nowadays. Lisp is a dynamically typed language, but computer scientists must have had an inkling of the mathematical versions of the concept of "type" from very early on even if they did not appear directly in programming languages for some time.

Type theory is an ongoing mathematical pursuit with some deep and interesting results. Some of which occasionally end up in programming languages (or are independently discovered in the context of programming).

Conclusion

When considering the dynamic vs. static typing discussions on the internet, it occasionally seems that one has stepped into the territory of absurdness. One party is talking about types as properties of some expressions in their text editor and the other party is talking about types as properties of data. Some are doing math. No wonder there are arguments! And these arguments are made worse with the reasonable doubt that static and dynamic type checking are too different for rational comparison. For example, I can write Python code with dynamic type checking without significant problems. But I doubt I would be able to write any Haskell code without static type checking. It makes no sense to compare dynamic and static languages and hope to understand if static or dynamic typing is a better choice (for some purpose).

And what is discussed here is certainly not all when it comes to types in programming languages! In F# there are "type providers" that go beyond inspecting just the program code and draw-type information from external sources like a database. For example, you can effectively say: "Hey compiler, go look up what are the possible values for the database field X and call that set type T". There are Gradual types, which aim to build a system where dynamic and static types form a smooth continuum where developers can alternate between one or another, depending on their needs. There are languages like Isabel where you program to prove mathematical theories and languages like Idris where you do proofs to program. Types get drawn into all kinds of unexpected shapes and are used everywhere.

So, why is Typeable a good name? Simply because it alludes to a great many things and a source of interest that is central to software development.

Top comments (0)