NodeTskeleton is a Clean Architecture based template project for NodeJs using TypeScript to implement with any web server framework or even any user interface.

The main philosophy of NodeTskeleton is that your solution (domain and application, “business logic”) should be independent of the framework you use, therefore your code should NOT BE COUPLED to a specific framework or library, it should work in any framework.

The design of NodeTskeleton is based in Clean Architecture, an architecture that allows you to decouple the dependencies of your solution, even without the need to think about the type of database, providers or services, the framework, libraries or any other dependencies.

NodeTskeleton has the minimum tools necessary for you to develop the domain of your application, you can even decide not to use its included tools (you can remove them), and use the libraries or packages of your choice.

Now it has CLI functions 📟

Now you can setup it very easy. For do it you only have to run the next command in a root directory in your terminal and run-tsk will do it all for you behind the scenes:

In the next command change my-awesome-project for your own project name

$ npx run-tsk setup project-name=my-awesome-project

$ cd my-awesome-project

$ npm run dev

With the previous and with the next feature you can easily use TSKeleton, and even create a use cases in a matter of seconds and then add your business logic, yes, it's that simple.

$ run-tsk help

run-tsk CLI available commands:

> validate

- The previous command will validate if the current directory is a root TSK project.

> setup project-name=<project-name-value>

- The previous command will setup the TSKProject ready for you to use it.

- Example: run-tsk setup project-name=<your-awesome-project-name>

> add-use-case api-name=<apiName> use-case=<useCaseName> endpoint=<endpoint> http-method=<METHOD>

- The previous command will create a new UseCase into the project. Arguments can be sent in any order.

- Example: run-tsk add-use-case api-name=auth use-case=Logout endpoint=/v1/auth/logout http-method=GET

Aliases: add-uc

> alias arg=<argName>

- The previous command will show available aliases for the sended argument name.

- Example: run-tsk alias arg=api-name

Philosophy 🧘🏽

Applications are generally developed to be used by people, so people should be the focus of them.

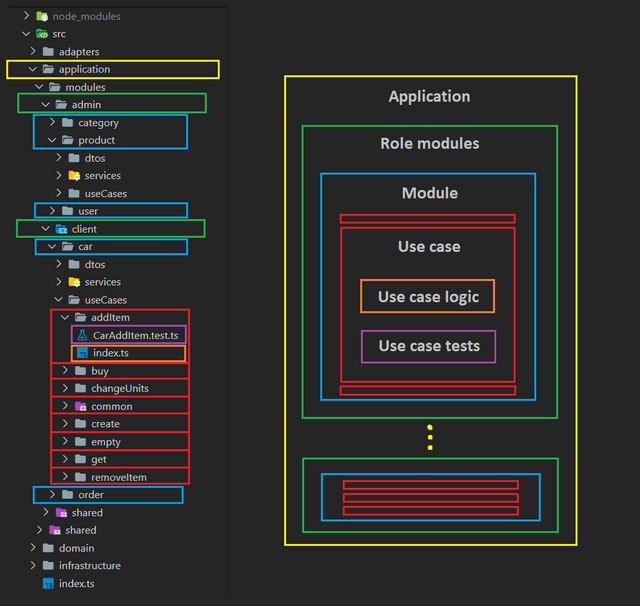

For this reason user stories are written, stories that give us information about the type of user (role), procedures that the user performs in a part of the application (module), important information that serves to structure the solution of our application, and in practice, how is this?

The user stories must be in the src/application path of our solution, there we create a directory that we will call modules and inside this, we create a directory for the task role, for example (customer, operator, seller, admin, ...) and inside the role we create a directory of the corresponding use case module, for example (product, order, account, sales, ...), and in practice that looks more or less like this:

Observations 👀

If your application has no

roles, then there's no mess, it's justmodules. ;)But taking into consideration that if the roles are not yet defined in your application,

the best optionwould be to follow adynamic role strategybased onpermissionsandeach use case within the application (or use case group) would be a specific permissionthat would feed the strategy of dynamic roles.Note that you can

repeatmodules betweenroles, because amodule can be used by different roles, because if they are different roles then the use cases should also be different, otherwise those users would have the same role.This strategy makes the project easy to

navigate, easy tochange,scaleandmaintain, which boils down togood mental health, besides you will be able to integrate new developers to your projects in a faster way.

Included tools 🧰

NodeTskeleton includes some tools in the src/application/shared path which are described below:

Errors

Is a tool for separating controlled from uncontrolled errors and allows you to launch application errors according to your business rules, example:

/*

** context: it's the context where the error will be launched.

*/

export class ApplicationError extends Error {

constructor(

readonly context: string,

readonly message: string,

readonly errorCode: number | string,

readonly stack?: string,

) {

super(message);

this.name = `${context.replace(/\s/g, StringUtil.EMPTY)}_${ApplicationError.name}`;

this.errorCode = errorCode;

this.stack = stack;

}

// ...

}

Is important to note that the name of the CONTEXT is concatenated with the name of the ApplicationError class in order to better identification of the controlled errors.

It's very useful for observability tools in order to filter out real errors from those we are controlling.

The straightforward way to use it is as follows:

throw new ApplicationError(

this.CONTEXT,

resources.get(resourceKeys.ERROR_TO_CREATE_SOMETHING),

applicationStatusCode.BAD_REQUEST,

JSON.stringify(error),

);

Or if the pointer of your program is in the scope of your UseCase, you can use the error control function in the BaseUseCase class:

The dirty way:

if (!someCondition) { // Or any validation result

result.setError(

this.resources.get(this.resourceKeys.PROCESSING_DATA_CLIENT_ERROR),

this.applicationStatus.INTERNAL_SERVER_ERROR,

);

this.handleResultError(result);

}

The clean way one:

// In the UseCase context in Execute method

const user = await this.getUser(result, userId);

if (result.hasError()) return result;

// In the UseCase context in out of Execute method

private async getUser(result: IResult, userId: string): Promise<User> {

const user = await this.userRepository.getById(userId):

if (!user) {

result.setError(

this.resources.get(this.resourceKeys.USER_CAN_NOT_BE_CREATED),

this.applicationStatus.INTERNAL_CONFLICT,

);

}

return user;

}

The clean way two:

// In the UseCase context in Execute method

const { value: userExists } = await result.execute(

this.userExists(user.email?.value as string),

);

if (userExists) return result;

// In the UseCase context in out of Execute method

private async userExists(email: string): ResultExecutionPromise<boolean> {

const userExists = await this.userRepository.getByEmail(email);

if (userExists) {

return {

error: this.appMessages.getWithParams(

this.appMessages.keys.USER_WITH_EMAIL_ALREADY_EXISTS,

{

email,

},

),

statusCode: this.applicationStatus.INVALID_INPUT,

value: true,

};

}

return {

value: false,

};

}

The function of this class will be reflected in your error handler as it will let you know when an exception was thrown by your system or by an uncontrolled error, as shown below:

handle: ErrorHandler = (

err: ApplicationError,

req: Request,

res: Response,

next: NextFunction,

) => {

const result = new Result();

if (err?.name.includes(ApplicationError.name)) {

console.log("Controlled application error", err.message);

} else {

console.log("No controlled application error", err);

}

};

Which use? Feel free, it's about colors and flavours, in fact you can developed your own strategy, but if you are going to prefer to use the The clean way one keep present the next recommendations:

-

Never, but never, use setData or setMessage methods of the result inside functions

out of the UseCase Execute method context, only here (Inside the UseCase Execute method) this functions can be call. - You only must use methods to manage errors in result objects outside of the UseCase Execute method context.

Why?, it´s related to side effects, I normally use the The clean way one and I have never had a problem related to that, because I have been careful about that.

Locals (resources)

It is a basic internationalization tool that will allow you to manage and administer the local messages of your application, even with enriched messages, for example:

import resources, { resourceKeys } from "../locals/index";

const simpleMessage = resources.get(resourceKeys.ITEM_PRODUCT_DOES_NOT_EXIST);

const enrichedMessage = resources.getWithParams(resourceKeys.SOME_PARAMETERS_ARE_MISSING, {

missingParams: keysNotFound.join(", "),

});

// The contents of the local files are as follows:

/*

// en:

export default {

...

SOME_PARAMETERS_ARE_MISSING: "Some parameters are missing: {{missingParams}}.",

ITEM_PRODUCT_DOES_NOT_EXIST: "The item product does not exist.",

YOUR_OWN_NEED: "You are the user {{name}}, your last name is {{lastName}} and your age is {{age}}.",

...

}

// es:

export default {

...

SOME_PARAMETERS_ARE_MISSING: "Faltan algunos parámetros: {{missingParams}}.",

ITEM_PRODUCT_DOES_NOT_EXIST: "El item del producto no existe.",

YOUR_OWN_NEED: "Usted es el usuario {{name}}, su apellido es {{lastName}} y su edad es {{age}}.",

...

}

...

*/

// You can add enriched messages according to your own needs, for example:

const yourEnrichedMessage = resources.getWithParams(resourceKeys.YOUR_OWN_NEED, {

name: firstName, lastName, age: userAge

});

//

For use it in any UseCase you can do something like:

result.setError(

this.appMessages.get(this.appMessages.keys.PROCESSING_DATA_CLIENT_ERROR), // Or this.appMessages.getWithParams(...)...

this.applicationStatus.INTERNAL_SERVER_ERROR,

);

And you can add all the parameters you need with as many messages in your application as required.

The resource files can be local files in JSON format or you can get them from an external service.

Mapper

The mapper is a tool that will allow us to change the entities to the DTOs within our application, including entity changes between the data model and the domain and vice versa.

This tool maps objects or arrays of objects, for example:

// For object

const textFeelingDto = this.mapper.mapObject<TextFeeling, TextFeelingDto>(

textFeeling,

new TextFeelingDto(),

);

// For array objects

const productsDto: ProductDto[] = this.mapper.mapArray<Product, ProductDto>(

products,

() => this.mapper.activator(ProductDto),

);

Activator is the function responsible for returning a new instance for each call, otherwise you would have an array with the same object repeated N times.

Result

export class GetProductUseCase extends BaseUseCase<string> { // Or BaseUseCase<{ idMask: string}>

constructor(private productQueryService: IProductQueryService) {

super();

}

async execute(idMask: string): Promise<IResult<ProductDto>> { // If object input type is (params: { idMask: string}) so you can access to it like params.idMask

// We create the instance of our type of result at the beginning of the use case.

const result = new Result<ProductDto>();

// With the resulting object we can control validations within other functions.

if (!this.validator.isValidEntry(result, { productMaskId: idMask })) {

return result;

}

const product: Product = await this.productQueryService.getByMaskId(idMask);

if (!product) {

// The result object helps us with the error response and the code.

result.setError(

this.appMessages.get(this.appMessages.keys.PRODUCT_DOES_NOT_EXIST),

this.applicationStatus.NOT_FOUND,

);

return result;

}

const productDto = this.mapper.mapObject<Product, ProductDto>(product, new ProductDto());

// The result object also helps you with the response data.

result.setData(productDto, this.applicationStatus.SUCCESS);

// And finally you give it back.

return result;

}

}

The result object may or may not have a type of response, it fits your needs, and the result instance without type cannot be assigned data.

const resultWithType = new Result<ProductDto>();

// or

const resultWithoutType = new Result();

The result object can help you in unit tests as shown below:

it("should return a 400 error if quantity is null or zero", async () => {

itemDto.quantity = null;

const result = await addUseCase.execute({ userUid, itemDto });

expect(result.success).toBeFalsy();

expect(result.error).toBe(

appMessages.getWithParams(appMessages.keys.SOME_PARAMETERS_ARE_MISSING, {

missingParams: "quantity",

}),

);

expect(result.statusCode).toBe(resultCodes.BAD_REQUEST);

});

UseCase

The UseCase is a base class for extending use cases and if you were a retailer you could see it in action in the above explanation of the Result tool.

Its main function is to avoid you having to write the same code in every use case you have to build because it contains the instances of the common tools you will use in the case implementations.

The tools extended by this class are: the mapper, the validator, the message resources and their keys, and the result codes.

import messageResources, { Resources } from "../locals/messages/index";

import { ILogProvider } from "../log/providerContracts/ILogProvider";

export { IResult, Result, IResultT, ResultT } from "result-tsk";

import applicationStatus from "../status/applicationStatus";

import wordResources from "../locals/words/index";

import { Validator } from "validator-tsk";

import mapper, { IMap } from "mapper-tsk";

import { Throw } from "../errors/Throw";

import { IResult } from "result-tsk";

export { Validator, Resources };

export abstract class BaseUseCase<T> {

mapper: IMap;

validator: Validator;

appMessages: Resources;

appWords: Resources;

applicationStatus = applicationStatus;

constructor(public readonly CONTEXT: string, public readonly logProvider: ILogProvider) {

this.mapper = mapper;

this.appMessages = messageResources;

this.appWords = wordResources;

this.validator = new Validator(

messageResources,

messageResources.keys.SOME_PARAMETERS_ARE_MISSING,

applicationStatus.INVALID_INPUT,

);

}

handleResultError(result: IResult): void {

Throw.when(this.CONTEXT, !!result?.error, result.error, result.statusCode);

}

abstract execute(args?: T): Promise<IResult>;

}

Type T in BaseUseCase<T> is a way for the optimal control of the input parameters of your UseCase unit code.

So, you can use it like the next examples:

// UseCase with input params

export class LoginUseCase

extends BaseUseCase<{ email: string; passwordB64: string }>

{

constructor(logProvider: ILogProvider, private readonly authProvider: IAuthProvider) {

super(LoginUseCase.name, logProvider);

}

async execute(params: { email: string; passwordB64: string }): Promise<IResultT<TokenDto>> {

// Your UseCase implementation

}

}

// UseCase without input params

export class ListUsersUseCase extends BaseUseCase<undefined>

{

constructor(logProvider: ILogProvider, private readonly userProvider: IUserProvider) {

super(LoginUseCase.name, logProvider);

}

async execute(): Promise<IResultT<User[]>> {

// Your UseCase implementation

}

}

Or you can use the libraries from NPM directly.

Validator

The validator is a very basic but dynamic tool and with it you will be able to validate any type of object and/or parameters that your use case requires as input, and with it you will be able to return enriched messages to the client regarding the errors or necessary parameters not identified in the input requirements, for example:

/*...*/

async execute(userUid: string, itemDto: CarItemDto): Promise<IResult<CarItemDto>> {

const result = new Result<CarItemDto>();

if (

!this.validator.IsValidEntry(result, {

User_Identifier: userUid,

Car_Item: itemDto,

Order_Id: itemDto?.orderId,

Product_Detail_Id: itemDto?.productDetailId,

Quantity: itemDto?.quantity,

})

) {

/*

The error message on the result object will include a base message and will add to

it all the parameter names that were passed on the object that do not have a valid value.

*/

return result;

}

/*...*/

return result;

}

/*...*/

Validations functions (New feature 🤩)

The validation functions extend the isValidEntry method to inject small functions created for your own needs.

The philosophy of this tool is that it adapts to your own needs and not that you adapt to it.

To do this the isValidEntry function input value key pair also accepts array of small functions that must perform a specific task with the parameter to be validated.

Observation

If you are going to use the validation functions feature, you must send as a parameter an array even if it is only a function.

Important note

The validation functions should return NULL if the parameter for validate is valid and a string message indicating the reason why the parameter is not valid.

// Validator functions created to meet your own needs

function validateEmail(email: string): string {

if (/^\w+([\.-]?\w+)*@\w+([\.-]?\w+)*(\.\w{2,3})+$/.test(email)) {

return null;

}

return resources.getWithParams(resourceKeys.NOT_VALID_EMAIL, { email });

}

function greaterThan(numberName: string, base: number, evaluate: number): string {

if (evaluate && evaluate > base) {

return null;

}

return resources.getWithParams(resourceKeys.NUMBER_GREATER_THAN, {

name: numberName,

baseNumber: base.toString(),

});

}

function evenNumber(numberName: string, evaluate: number): string {

if (evaluate && evaluate % 2 === 0) {

return null;

}

return resources.getWithParams(resourceKeys.MUST_BE_EVEN_NUMBER, {

numberName,

});

}

// Entry in any use case

const person = new Person("Jhon", "Doe", "myemail@orion.com", 21);

/*...*/

const result = new Result();

if (!validator.isValidEntry(result, {

Name: person.name,

Last_Name: person.lastName,

Email: [() => validateEmail(person.email)],

Age: [

() => greaterThan("Age", 25, person.age),

() => evenNumber("Age", person.age),

],

})) {

return result;

}

/*...*/

// result.error would have the following message

// "Some parameters are missing or not valid: The number Age must be greater than 25, The Age parameter should be even."

Dependency injection strategy 📦

For dependency injection, no external libraries are used. Instead, a container dictionary strategy is used in which instances and their dependencies are created and then resolved from container class.

This strategy is only needed in the adapter layer dependencies for controllers like services and providers, and also for the objects used in the use case tests, for example:

// In the path src/adapters/controllers/textFeeling there is a folder called container and the index file have the following code lines:

import { GetHighestFeelingSentenceUseCase } from "../../../../application/modules/feeling/useCases/getHighest";

import { GetLowestFeelingSentenceUseCase } from "../../../../application/modules/feeling/useCases/getLowest";

import { GetFeelingTextUseCase } from "../../../../application/modules/feeling/useCases/getFeeling";

import { Container, IContainerDictionary } from "../../../shared/Container";

import { textFeelingService } from "../../../providers/container/index";

const dictionary = new ContainerDictionary();

dictionary.addScoped(GetHighestFeelingSentenceUseCase.name, () => new GetHighestFeelingSentenceUseCase(textFeelingService));

dictionary.addScoped(GetLowestFeelingSentenceUseCase.name, () => new GetLowestFeelingSentenceUseCase(textFeelingService));

dictionary.addScoped(GetFeelingTextUseCase.name, () => new GetFeelingTextUseCase(textFeelingService));

// This class instance contains the UseCases needed for your controller

export default new Container(dictionary); // *Way One*

// You can also export separate instances if required, like this:

const anotherUseCaseOrService = new AnotherUseCaseOrService();

export { anotherUseCaseOrService }; // *Way Two*

// You can combine the two strategies (Way One and Way Two) according to your needs

Another way to export dependencies is to simply create instances of the respective classes (only recommended with provider and repository services).

// The same way in src/adapters/providers there is the container folder

import TextFeelingService from "../../../application/modules/feeling/serviceContracts/textFeeling/TextFeelingService";

import TextFeelingProvider from "../../providers/feeling/TextFeelingProvider";

import { HealthProvider } from "../health/HealthProvider";

const textFeelingProvider = new TextFeelingProvider();

const textFeelingService = new TextFeelingService(textFeelingProvider);

const healthProvider = new HealthProvider();

export { healthProvider, textFeelingService };

// And your repositories (folder src/adapters/repositories) must have the same strategy

For ioc our container strategy manage the instances of the UseCases for the specific controller and here the necessary dependencies for the operation of those UseCases are injected, then they are exported and into the controller they are imported and used from our container as following:

// For ExpressJs

import { GetFeelingTextUseCase } from "../../../application/modules/feeling/useCases/getFeeling";

import { Request, Response, NextFunction } from "../../../infrastructure/server/CoreModules";

import { TextDto } from "../../../application/modules/feeling/dtos/TextReq.dto";

import BaseController from "../BaseController";

import container, {

anotherUseCaseOrService,

} from "./container/index";

class TextFeelingController extends BaseController {

constructor(serviceContainer: IServiceContainer) {

super(serviceContainer);

}

/*...*/

// *Way One*

getFeelingText = async (req: Request, res: Response, next: NextFunction): Promise<void> => {

try {

const textDto: TextDto = req.body;

this.handleResult(res, await container.get<GetFeelingTextUseCase>(GetFeelingTextUseCase.name).execute(textDto));

} catch (error) {

next(error);

}

};

// *Way Two*

getFeelingText = async (req: Request, res: Response, next: NextFunction): Promise<void> => {

try {

const textDto: TextDto = req.body;

this.handleResult(res, await getFeelingTextUseCase.execute(textDto));

} catch (error) {

next(error);

}

};

/*...*/

}

The Way One delivers a different instance for each UseCase call.

The Way Two delivers the same instance (only one instance) for each useCase call, which can lead to the most common problem, mutations.

As you can see this makes it easy to manage the injection of dependencies without the need to use sophisticated libraries that add more complexity to our applications.

But if you prefer or definitely your project need a library, you can use something like awilix or inversifyJs.

Using NodeTskeleton 👾

In this template is included the example code base for KoaJs and ExpressJs, but if you have a web framework of your preference you must configure those described below according to the framework.

Using with KoaJs 🦋

Go to repo for KoaJs in this Link

And then, continue with the installation step described in the instructions from original project on github.

Controllers

The location of the controllers must be in the adapters directory, there you can place them by responsibility in separate directories.

The controllers should be exported as default modules to make the handling of these in the index file of our application easier.

// Controller example with export default

import BaseController, { Context } from "../BaseController";

import { TextDto } from "../../../application/modules/feeling/dtos/TextReq.dto";

import container, {

anotherUseCaseOrService,

} from "./container/index";

class TextFeelingController extends BaseController {

constructor(serviceContainer: IServiceContainer) {

super(serviceContainer);

}

/*...*/

}

const instance = new TextFeelingController(container);

// You can see the default export

export default instance;

Example of the handling of the controllers in the index file of our application:

/*...*/

// Region controllers

import productController from "./adapters/controllers/product/Product.controller";

import shoppingCarController from "./adapters/controllers/shoppingCart/ShoppingCar.controller";

import categoryController from "./adapters/controllers/category/CategoryController";

/*...*/

// End controllers

const controllers: BaseController[] = [

productController,

shoppingCarController,

categoryController,

/*...*/

];

const app = new AppWrapper(controllers);

/*...*/

Routes

The strategy is to manage the routes within the controller, this allows us a better management of these, in addition to a greater capacity for maintenance and control according to the responsibilities of the controller.

/*...*/

initializeRoutes(router: IRouterType) {

this.reouter = router;

this.router.post("/v1/cars", authorization(), this.Create);

this.router.get("/v1/cars/:idMask", authorization(), this.Get);

this.router.post("/v1/cars/:idMask", authorization(), this.Buy);

this.router.post("/v1/cars/:idMask/items", authorization(), this.Add);

this.router.put("/v1/cars/:idMask/items", authorization(), this.Remove);

this.router.delete("/v1/cars/:idMask", authorization(), this.Empty);

/*...*/

}

/*...*/

Root path

If you need to manage a root path in your application then this part is configured in App, the infrastructure server module that loads the controllers as well:

/*...*/

private loadControllers(controllers: BaseController[]) {

controllers.forEach((controller) => {

// This is the line and the parameter comes from `config`.

controller.router.prefix(config.server.Root);

controller.initializeRoutes(router);

this.app.use(controller.router.routes());

this.app.use(controller.router.allowedMethods());

});

}

/*...*/

Using with ExpressJs 🐛

Clone this repo project or use it as template from github, and then, continue with the installation step described in this guide.

And then, continue with the installation step described in this manual.

Controllers

The location of the controllers must be in the adapters directory, there you can place them by responsibility in separate directories.

The controllers should be exported as default modules to make the handling of these in the index file of our application easier.

// Controller example with default export

import BaseController, { Request, Response, NextFunction } from "../BaseController";

import { TextDto } from "../../../application/modules/feeling/dtos/TextReq.dto";

import container, {

anotherUseCaseOrService,

} from "./container/index";

class TextFeelingController extends BaseController {

constructor(serviceContainer: IServiceContainer) {

super(serviceContainer);

}

/*...*/

}

const instance = new TextFeelingController(container);

// You can see the default export

export default instance;

// Or just use export default new TextFeelingController();

Example of the handling of the controllers in the index file of our application:

/*...*/

// Region controllers

import productController from "./adapters/controllers/product/Product.controller";

import shoppingCarController from "./adapters/controllers/shoppingCart/ShoppingCar.controller";

import categoryController from "./adapters/controllers/category/CategoryController";

/*...*/

// End controllers

const controllers: BaseController[] = [

productController,

shoppingCarController,

categoryController,

/*...*/

];

const app = new AppWrapper(controllers);

/*...*/

Routes

The strategy is to manage the routes within the controller, this allows us a better management of these, in addition to a greater capacity for maintenance and control according to the responsibilities of the controller.

/*...*/

initializeRoutes(router: IRouterType) {

this.router = router();

this.router.post("/v1/cars", authorization(), this.Create);

this.router.get("/v1/cars/:idMask", authorization(), this.Get);

this.router.post("/v1/cars/:idMask", authorization(), this.Buy);

this.router.post("/v1/cars/:idMask/items", authorization(), this.Add);

this.router.put("/v1/cars/:idMask/items", authorization(), this.Remove);

this.router.delete("/v1/cars/:idMask", authorization(), this.Empty);

/*...*/

}

/*...*/

Root path

If you need to manage a root path in your application then this part is configured in App, the infrastructure server module that loads the controllers as well:

/*...*/

private loadControllers(controllers: BaseController[]): void {

controllers.forEach((controller) => {

// This is the line and the parameter comes from `config`.

controller.initializeRoutes(Router);

this.app.use(config.server.Root, controller.router);

});

}

/*...*/

Using with another web server framework 👽

You must implement the configuration made with

ExpressJsorKoaJswith the framework of your choice andinstallall thedependenciesanddevDependenciesfor your framework, You must also modify theServermodule,Middlewareininfrastructuredirectory and theBaseControllerandControllersin adapters directory.

And then, continue with the step installation.

Infrastructure 🏗️

The infrastructure includes a customizable HttpClient with its response model in src/infrastructure/httpClient/TResponse.ts for error control, and at the application level a class strategy src/application/shared/result/... is included as a standardized response model.

Installation 🔥

You must first clone the repo.

Then, we must install the dependencies, run:

npm install

Second, we must update the dependencies, run:

npm update

Third, run project in hot reload mode (Without debug, for it go to Debug instructions)

npm run dev

or

npm run build

node dist/index

Finally, in any web browser go to:

localhost:3003/api/ping

And you can use

PostManas follow:

Try import this request. So, click to Import > Select Raw text, and paste the next code:

curl --location --request POST 'localhost:3003/api/v1/users/login' \

--header 'Content-Type: application/json' \

--data-raw '{

"email": "harvic3@protonmail.com",

"password": "Tm9kZVRza2VsZXRvbio4"

}'

The password is equivalent for "NodeTskeleton*8" in Base64 format.

Application debugger 🔬

If you are using VS Code the easiest way to debug the solution is to follow these instructions:

First go to package.json file.

Second, into package.json file locate the debug command just above the scripts section and click on it.

Third, choose the dev script when the execution options appear.

So, wait a moment and then you will see something like this on the console.

$ npm run dev

Debugger attached.

Waiting for the debugger to disconnect...

Debugger attached.

> nodetskeleton@1.0.0 dev

> ts-node-dev --respawn -- src/index.ts

Debugger attached.

[INFO] 22:52:29 ts-node-dev ver. 1.1.8 (using ts-node ver. 9.1.1, typescript ver. 4.4.3)

Debugger attached.

Running in dev mode

AuthController was loaded

HealthController was loaded

Server running on localhost:3003/api

To stop the debug just press Ctrl C and close the console that was opened to run the debug script.

This method will allow you to develop and have the solution be attentive to your changes (hot reload) without the need to restart the service, VS Code does it for you automatically.

Test your Clean Architecture 🥁

Something important is to know if we really did the job of building our clean architecture well, and this can be found very easily by following these steps:

Make sure you don't have any pending changes in your application to upload to your repository, otherwise upload them if you do.

Identify and remove

adaptersandinfrastructuredirectoriesfrom your solution, as well as theindex.tsfile.Execute the test command

npm tornpm run testand the build commandtscornpm run buildtoo, and everything should run smoothly, otherwise you violated the principle of dependency inversion or due to bad practice, application layers were coupled that should not be coupled.Run the

git checkout .command to get everything back to normal.Most importantly, no

domain entitycan make use of anapplication serviceand less of aprovider service(repository or provider), theapplication services use the entities, the flow goes from themost external partof the applicationto the most internal partof it.

Coupling 🧲

For the purpose of giving clarity to the following statement we will define coupling as the action of dependence, that is to say that X depends on Y to function.

Coupling is not bad if it is well managed, but in a software solution there should not be coupling of the domain and application layers with any other, but there can be coupling of the infrastructure layer or the adapters layer with the application and/or domain layer, or coupling of the infrastructure layer with the adapters layer and vice versa.

Clustering the App (Node Cluster)

NodeJs solutions run on a single thread, so it is important not to run CPU-intensive tasks, however NodeJs in Cluster Mode can run on several cores, so if you want to get the most out of your solution running on a multi-core machine, this is probably a good option, but if your machine has no more than one core, this will not help.

So, for Cluster de App, replace src/index.ts code for the next code example.

Observation 👀

For some reason that I don't understand yet, the dynamic loading of modules presents problems with Node in Cluster Mode, so if you plan to use cluster mode, you must inject the controllers to the AppWrapper class instance as shown in the following code sample, otherwise if you are not going to use the cluster mode then you can skip the import of the controllers and let the loading be done dynamically by the AppWrapper internal class method.

// Node App in Cluster mode

import { cpus } from "os";

import "express-async-errors";

import * as cluster from "cluster";

import config from "./infrastructure/config";

import AppWrapper from "./infrastructure/app/AppWrapper";

import { HttpServer } from "./infrastructure/app/server/HttpServer";

import errorHandlerMiddleware from "./infrastructure/middleware/error";

// Controllers

import BaseController from "./adapters/controllers/base/Base.controller";

import healthController from "./adapters/controllers/health/Health.controller";

import authController from "./adapters/controllers/auth/Auth.controller";

// End Controllers

const controllers: BaseController[] = [healthController, authController];

function startApp(): void {

const appWrapper = new AppWrapper(controllers);

const server = new HttpServer(appWrapper);

server.start();

process.on("uncaughtException", (error: NodeJS.UncaughtExceptionListener) => {

errorHandlerMiddleware.manageNodeException("UncaughtException", error);

});

process.on("unhandledRejection", (reason: NodeJS.UnhandledRejectionListener) => {

errorHandlerMiddleware.manageNodeException("UnhandledRejection", reason);

});

}

if (cluster.isMaster) {

const totalCPUs = cpus().length;

console.log(`Total CPUs are ${totalCPUs}`);

console.log(`Master process ${process.pid} is running`);

for (let i = 0; i < totalCPUs; i++) {

cluster.fork(config.Environment);

}

cluster.on("exit", (worker: cluster.Worker, code: number, signal: string) => {

console.log(`Worker ${worker.process.pid} stopped with code ${code} and signal ${signal}`);

cluster.fork();

});

} else {

startApp();

}

// Node App without Cluster mode and controllers dynamic load.

import "express-async-errors";

import AppWrapper from "./infrastructure/app/AppWrapper";

import { HttpServer } from "./infrastructure/app/server/HttpServer";

import errorHandlerMiddleware from "./infrastructure/middleware/error";

const appWrapper = new AppWrapper();

const server = new HttpServer(appWrapper);

server.start();

process.on("uncaughtException", (error: NodeJS.UncaughtExceptionListener) => {

errorHandlerMiddleware.manageNodeException("UncaughtException", error);

});

process.on("unhandledRejection", (reason: NodeJS.UnhandledRejectionListener) => {

errorHandlerMiddleware.manageNodeException("UnhandledRejection", reason);

});

// Node App without Cluster mode with controllers load by constructor.

import "express-async-errors";

import AppWrapper from "./infrastructure/app/AppWrapper";

import { HttpServer } from "./infrastructure/app/server/HttpServer";

import errorHandlerMiddleware from "./infrastructure/middleware/error";

// Controllers

import BaseController from "./adapters/controllers/base/Base.controller";

import healthController from "./adapters/controllers/health/Health.controller";

import authController from "./adapters/controllers/auth/Auth.controller";

// End Controllers

const controllers: BaseController[] = [healthController, authController];

const appWrapper = new AppWrapper(controllers);

const server = new HttpServer(appWrapper);

server.start();

process.on("uncaughtException", (error: NodeJS.UncaughtExceptionListener) => {

errorHandlerMiddleware.manageNodeException("UncaughtException", error);

});

process.on("unhandledRejection", (reason: NodeJS.UnhandledRejectionListener) => {

errorHandlerMiddleware.manageNodeException("UnhandledRejection", reason);

});

Strict mode

TypeScript's strict mode is quite useful because it helps you maintain the type safety of your application making the development stage of your solution more controlled and thus avoiding the possible errors that not having this option enabled can bring.

This option is enabled by default in NodeTskeleton and is managed in the tsconfig.json file of your solution, but if you are testing and don't want to have headaches you can disable it.

"strict": true,

Multi service monorepo

With this simple option you can develop a single code base and by means of the configuration file through the ENVs (environment variables) decide which service context to put online, so with the execution of different PipeLines.

Note that the system take the ServiceContext Server parameter in the config file from value of your .env file as follows:

// infrastructure/config/index

const serviceContext = process.env.SERVICE_CONTEXT || ServiceContext.NODE_TS_SKELETON;

...

Controllers: {

ContextPaths: [

// Health Controller should always be included, and others by default according to your needs.

Normalize.pathFromOS(

Normalize.absolutePath(__dirname, "../../adapters/controllers/health/*.controller.??"),

),

Normalize.pathFromOS(

Normalize.absolutePath(

__dirname,

`../../adapters/controllers/${serviceContext}/*.controller.??`,

),

),

],

// If the SERVICE_CONTEXT parameter is not set in the environment variables file, then the application will load by default all controllers that exist in the home directory.

DefaultPath: [

Normalize.pathFromOS(

Normalize.absolutePath(__dirname, "../../adapters/controllers/**/*.controller.??"),

),

],

Ignore: [Normalize.pathFromOS("**/base")],

},

Server: {

...

ServiceContext: {

// This is the flag that tells the application whether or not to load the drivers per service context.

LoadWithContext: !!process.env.SERVICE_CONTEXT,

Context: serviceContext,

},

}

Note that by default all solution Controllers are set to the NodeTskeleton context which is the default value DefaultPath, but you are free to create as many contexts as your solution needs and load your Controllers on the context that you set in SERVICE_CONTEXT env.

The HealthController must always words for any context ContextPaths or for NodeTskeleton context, it cannot change because you need a health check point for each exposed service.

For example, the application have the SECURITY context and you can get it as follow:

// In your ENV file set context as users, like this:

NODE_ENV=development

SERVICE_CONTEXT=users

SERVER_ROOT=/api

So the path into ContextPaths settings that contains ${serviceContext} constant will have the following value:

../../adapters/controllers/users/*.controller.??

Then in the AppWrapper class, the system will load the controllers that must be exposed according to the service context.

The ServiceContext file is located in the infrastructure server directory:

// NodeTskeleton is the only context created, but you can create more o change this.

export enum ServiceContext {

NODE_TS_SKELETON = "NodeTskeleton",

SECURITY = "auth",

USERS = "users",

}

How it working?

So, how can you put the multi-service mode to work?

It is important to note (understand) that the service contexts must be the names of the directories you will have inside the controllers directory, and you can add as many controllers as you need to each context, for example, in this application we have two contexts, users (USERS) and auth (SECURITY).

adapters

controllers

auth // Context for SECURITY (auth)

Auth.controller.ts

users // Context for USERS (users)

Users.controller.ts

otherContext // And other service contexts according to your needs

...

application

...

All the above works for dynamic loading of controllers, therefore, if you are going to work the solution in CLUSTER mode you must inject the controllers by constructor as indicated in the cluster mode explanation and you must assign the context to each controller as shown in the following example:

// For example, the application have the SECURITY context and the Authentication Controller responds to this context as well:

class AuthController extends BaseController {

constructor() {

super(ServiceContext.SECURITY);

}

...

}

So, for this feature the project has a basic api-gateway to route an entry point to the different ports exposed by each service (context).

You should note that you need, Docker installed on your machine and once you have this ready, then you should do the following:

First, open your console a go to the root directory of NodeTskeleton project.

Second, execute the next sequence of commands:

Build the

tskeleton image

docker build . -t tskeleton-image

Build the

tsk gateway image

cd tsk-gateway

docker build . -t tsk-gateway-image

Run docker-compose for launch our solution

docker-compose up --build

And latter you can use Postman or web browser for use the exposed endpoints of two services based in NodeTskeleton project

Security service

Health

curl --location --request GET 'localhost:8080/security/api/ping'

Login

curl --location --request POST 'localhost:8080/security/api/v1/auth/login' \

--header 'Content-Type: application/json' \

--data-raw '{

"email": "nodetskeleton@email.com",

"password": "Tm9kZVRza2VsZXRvbio4"

}'

Users service

Health

curl --location --request GET 'localhost:8080/management/api/ping'

Register a new user

curl --location --request POST 'localhost:8080/management/api/v1/users/sign-up' \

--header 'Accept-Language: es' \

--header 'Authorization: Bearer jwt' \

--header 'Content-Type: application/json' \

--data-raw '{

"firstName": "Nikola",

"lastName": "Tesla",

"gender": "Male",

"password": "Tm9kZVRza2VsZXRvbio4",

"email": "nodetskeleton@conemail.com"

}'

Considerations and recommendations

Database tables or collection names

It is recommended to useprefixesin the table or collection names because in microservice context you need to replicate data and you may have collisions in the local environment, for example, for the SECURITY service context you can use sec_users for the users table or collection and in the same way for the USERS service context you can use usr_users.

The idea is that you use an abbreviation of the service context as a prefix to the name of your tables or collections.Database connections

In release and production environments you can use the same database connection configuration section of the config file to connect to your different databases in each of the service contexts even under the same technology (NoSQL, SQL or another one) and this can be achieved through the ENVs configuration of each service.

But at local level (development) you can use the same database according to the technology because by using prefixes in the tables and collections you will not have collisions and you can simplify and facilitate the development and the use of resources.

You must take into account that you cannot create relationships between tables or collections that are in different service contexts because this will not work in a productive environment since the databases will be different.

Conclusions (Personal) 💩

The clean architecture allows us to develop the

use casesand thedomain(business logic) of an application without worrying about the type of database, web server framework, protocols, services, providers, among other things that can be trivial and that the same application during the development will tell us what could be the best choice for the infrastructure and adapters of our application.The clean architecture, the hexagonal architecture, the onion architecture and the ports and adapters architecture in the background can be the same, the final purpose is to decouple the

business layerof our application from theoutside world, basically it leads us to think about designing our solutions from theinside to outsideandnotfrom theoutside to inside.When we develop with clean architecture we can more

easily changeany"external dependency"of our application without major concerns, obviously there are some that will require more effort than others, for example migrating from a NoSql schema to a SQL schema where probably the queries will be affected, however our business logic can remain intact and work for both models.The advantages that clean architecture offers us are very significant; it is one of the

best practices for making scalable softwarethatworks for your businessandnot for your preferred framework.Clean architecture is basically based on the famous and well-known five

SOLID principlesthat we had not mentioned until this moment and that we very little internalized.

And then, Visit the project and give me a star.

Top comments (2)

Great resource for applied CLEAN architecture to node with typescript. Thanks!

Your welcome, we are here to learn and contribute!!