In this tutorial, we will discuss the most reliable and widely used RTC framework: WebRTC, the rapidly growing hybrid application development framework. Flutter-WebRTC, and how we can use the framework. I will explain how to create a Flutter-WebRTC app in Just 7 steps.

Video calling has become a common medium of communication since the pandemic. We saw a very huge wave in real-time communication space (audio and video communication). There are many use cases of RTC in modern businesses, such as video conferencing, real-time streaming, live commerce, education, telemedicine, surveillance, gaming, etc.

Developers often ask the same questions How to build real-time applications with minimal efforts (Me too 😅). If you ask such questions, then you are at the right place.

In this article, we will discuss the most reliable and widely used RTC framework: WebRTC, the rapidly growing hybrid application development framework Flutter, and how we can use WebRTC with the Flutter framework. We will also build a demo Flutter app with WebRTC.

What is Flutter?

Flutter is a mobile app development framework based on the Dart programming language, developed by Google. One can develop Android apps, iOS apps, web apps, and desktop apps using the same code with the Flutter Framework. Flutter has a large community, which is why it is the fastest-growing app development framework ever.

What is WebRTC?

WebRTC is an open source framework for real-time communication (audio, video, and generic data) adopted by the majority of browsers and can be used on native platforms like Android, iOS, MacOS, Linux, Windows, etc.

WebRTC relies on three major APIs

- getUserMedia: used to get local audio and video media.

- RTCPeerConnection: establishes connection with other peer.

- RTCDataChannel: Creates a channel for generic data exchange.

What is Flutter-WebRTC?

Flutter-WebRTC is a plugin for the Flutter framework that enables real-time communication (RTC) capabilities in web and mobile applications. It is a collection of communication protocols and APIs that allow direct communication between web browsers and mobile applications without third-party plugins or software.With Flutter-WebRTC, you can easily build video call applications without dealing with the underlying technologies' complexities.

How WebRTC works ?

In order to understand working of WebRTC, we need to understand following technologies.

1. Signalling

WebRTC allows peer-to-peer communication over the web even though a peer has no idea where other peers are and how to connect to them or communicate to them.

In order to establish connection between peers, WebRTC needs clients to exchange metadata in order to coordinate with them using Signalling. It allows to communicate over firewalls or work with NATs (Network Address Translators) . Technology, that is majorly used for signalling is WebSocket, which allows bidirectional communication between peers and signalling server.

2. SDP

SDP stands for Session Description Protocol. It describes session information like

- sessionId

- session expire time

- Audio/Video Encoding/Formats/Encryption etc...

- Audio/Video IP and Port

Suppose there are two peers Client A and Client B that will be connected over WebRTC. Then client A generates and sends an SDP offer (session related information like codecs it supports) to Client B then Client B responds with SDP Answer (Accept or Reject SDP Offer). SDP is used here for negotiation between two peers.

3. ICE

ICE stands for Interactive Connectivity Establishment, which allows peer to connect with other peers. There are many reasons why a straight up connection between peers will not work.

It requires to bypass firewalls that could prevent opening connections, give a unique IP address if like most situations device does not have public IP Address, and relay data through a server if router does not allow to directly connect with peers. ICE uses STUN or TURN servers to accomplish this.

Let's start with Flutter-WebRTC Project

First of all, we need to setup signalling server.

- Clone the Flutter-WebRTC repository

git clone https://github.com/videosdk-live/webrtc.git

- Go to webrtc-signalling-server and install dependencies for Flutter-WebRTC App

cd webrtc-signalling-server && npm install

- Start Signalling Server for Flutter-WebRTC App

npm run start

- Flutter-WebRTC Project Structure

lib

└── main.dart

└── services

└── signalling.service.dart

└── screens

└── join_screen.dart

└── call_screen.dart

7 Steps to Build a Flutter-WebRTC Video Calling App

Step 1: Create Flutter-WrbRTC app project

flutter create flutter_webrtc_app

Step 2: Add project dependency for Flutter-WebRTC App

flutter pub add flutter_webrtc socket_io_client

Step 3: Flutter-WrbRTC Setup for IOS and Android

- Flutter-WebRTC iOS Setup Add following lines to your Info.plist file, located at /ios/Runner/Info.plist.

<key>NSCameraUsageDescription</key>

<string>$(PRODUCT_NAME) Camera Usage!</string>

<key>NSMicrophoneUsageDescription</key>

<string>$(PRODUCT_NAME) Microphone Usage!</string>

These lines allows your app to access camera and microphone.

Note: Refer, if you have trouble with iOS setup.

- Flutter-WebRTC Android Setup Add following lines in AndroidManifest.xml, located at /android/app/src/main/AndroidManifest.xml

<uses-feature android:name="android.hardware.camera" />

<uses-feature android:name="android.hardware.camera.autofocus" />

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.CHANGE_NETWORK_STATE" />

<uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS" />

<uses-permission android:name="android.permission.BLUETOOTH" android:maxSdkVersion="30" />

<uses-permission android:name="android.permission.BLUETOOTH_ADMIN" android:maxSdkVersion="30" />

If necessary, you will need to increase minSdkVersion of defaultConfig up to 23 in app level build.gradle file.

Step 4: Create SignallingService for Flutter-WebRTC App

Signalling Service will deal with the communication to the Signalling Server. Here, we will use socket.io client to connect with socker.io server, which is basically a WebSocket Server.

import 'dart:developer';

import 'package:socket_io_client/socket_io_client.dart';

class SignallingService {

// instance of Socket

Socket? socket;

SignallingService._();

static final instance = SignallingService._();

init({required String websocketUrl, required String selfCallerID}) {

// init Socket

socket = io(websocketUrl, {

"transports": ['websocket'],

"query": {"callerId": selfCallerID}

});

// listen onConnect event

socket!.onConnect((data) {

log("Socket connected !!");

});

// listen onConnectError event

socket!.onConnectError((data) {

log("Connect Error $data");

});

// connect socket

socket!.connect();

}

}

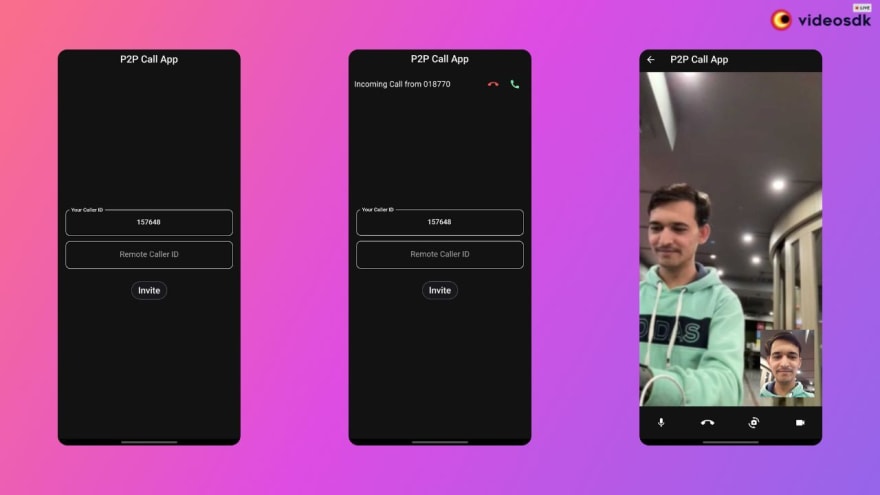

Step 5: Create JoinScreen for Flutter-WebRTC App

JoinScreen will be a StatefulWidget, which allows the user to join a session. Using this screen, user can start a session or join a session when some other user call this user using CallerID.

import 'package:flutter/material.dart';

import 'call_screen.dart';

import '../services/signalling.service.dart';

class JoinScreen extends StatefulWidget {

final String selfCallerId;

const JoinScreen({super.key, required this.selfCallerId});

@override

State<JoinScreen> createState() => _JoinScreenState();

}

class _JoinScreenState extends State<JoinScreen> {

dynamic incomingSDPOffer;

final remoteCallerIdTextEditingController = TextEditingController();

@override

void initState() {

super.initState();

// listen for incoming video call

SignallingService.instance.socket!.on("newCall", (data) {

if (mounted) {

// set SDP Offer of incoming call

setState(() => incomingSDPOffer = data);

}

});

}

// join Call

_joinCall({

required String callerId,

required String calleeId,

dynamic offer,

}) {

Navigator.push(

context,

MaterialPageRoute(

builder: (_) => CallScreen(

callerId: callerId,

calleeId: calleeId,

offer: offer,

),

),

);

}

@override

Widget build(BuildContext context) {

return Scaffold(

backgroundColor: Theme.of(context).colorScheme.background,

appBar: AppBar(

centerTitle: true,

title: const Text("P2P Call App"),

),

body: SafeArea(

child: Stack(

children: [

Center(

child: SizedBox(

width: MediaQuery.of(context).size.width * 0.9,

child: Column(

mainAxisAlignment: MainAxisAlignment.center,

children: [

TextField(

controller: TextEditingController(

text: widget.selfCallerId,

),

readOnly: true,

textAlign: TextAlign.center,

enableInteractiveSelection: false,

decoration: InputDecoration(

labelText: "Your Caller ID",

border: OutlineInputBorder(

borderRadius: BorderRadius.circular(10.0),

),

),

),

const SizedBox(height: 12),

TextField(

controller: remoteCallerIdTextEditingController,

textAlign: TextAlign.center,

decoration: InputDecoration(

hintText: "Remote Caller ID",

alignLabelWithHint: true,

border: OutlineInputBorder(

borderRadius: BorderRadius.circular(10.0),

),

),

),

const SizedBox(height: 24),

ElevatedButton(

style: ElevatedButton.styleFrom(

side: const BorderSide(color: Colors.white30),

),

child: const Text(

"Invite",

style: TextStyle(

fontSize: 18,

color: Colors.white,

),

),

onPressed: () {

_joinCall(

callerId: widget.selfCallerId,

calleeId: remoteCallerIdTextEditingController.text,

);

},

),

],

),

),

),

if (incomingSDPOffer != null)

Positioned(

child: ListTile(

title: Text(

"Incoming Call from ${incomingSDPOffer["callerId"]}",

),

trailing: Row(

mainAxisSize: MainAxisSize.min,

children: [

IconButton(

icon: const Icon(Icons.call_end),

color: Colors.redAccent,

onPressed: () {

setState(() => incomingSDPOffer = null);

},

),

IconButton(

icon: const Icon(Icons.call),

color: Colors.greenAccent,

onPressed: () {

_joinCall(

callerId: incomingSDPOffer["callerId"]!,

calleeId: widget.selfCallerId,

offer: incomingSDPOffer["sdpOffer"],

);

},

)

],

),

),

),

],

),

),

);

}

}

Step 6: Create CallScreen for Flutter-WebRTC App

In CallScreen, we will show local stream of user, remote stream of other user, controls like toggleCamera, toggleMic, switchCamera, endCall. Here, we will establish RTCPeerConnection between peers, create SDP Offer and SDP Answer and transmit ICE Candidate related data over signalling server (socket.io).

import 'package:flutter/material.dart';

import 'package:flutter_webrtc/flutter_webrtc.dart';

import '../services/signalling.service.dart';

class CallScreen extends StatefulWidget {

final String callerId, calleeId;

final dynamic offer;

const CallScreen({

super.key,

this.offer,

required this.callerId,

required this.calleeId,

});

@override

State<CallScreen> createState() => _CallScreenState();

}

class _CallScreenState extends State<CallScreen> {

// socket instance

final socket = SignallingService.instance.socket;

// videoRenderer for localPeer

final _localRTCVideoRenderer = RTCVideoRenderer();

// videoRenderer for remotePeer

final _remoteRTCVideoRenderer = RTCVideoRenderer();

// mediaStream for localPeer

MediaStream? _localStream;

// RTC peer connection

RTCPeerConnection? _rtcPeerConnection;

// list of rtcCandidates to be sent over signalling

List<RTCIceCandidate> rtcIceCadidates = [];

// media status

bool isAudioOn = true, isVideoOn = true, isFrontCameraSelected = true;

@override

void initState() {

// initializing renderers

_localRTCVideoRenderer.initialize();

_remoteRTCVideoRenderer.initialize();

// setup Peer Connection

_setupPeerConnection();

super.initState();

}

@override

void setState(fn) {

if (mounted) {

super.setState(fn);

}

}

_setupPeerConnection() async {

// create peer connection

_rtcPeerConnection = await createPeerConnection({

'iceServers': [

{

'urls': [

'stun:stun1.l.google.com:19302',

'stun:stun2.l.google.com:19302'

]

}

]

});

// listen for remotePeer mediaTrack event

_rtcPeerConnection!.onTrack = (event) {

_remoteRTCVideoRenderer.srcObject = event.streams[0];

setState(() {});

};

// get localStream

_localStream = await navigator.mediaDevices.getUserMedia({

'audio': isAudioOn,

'video': isVideoOn

? {'facingMode': isFrontCameraSelected ? 'user' : 'environment'}

: false,

});

// add mediaTrack to peerConnection

_localStream!.getTracks().forEach((track) {

_rtcPeerConnection!.addTrack(track, _localStream!);

});

// set source for local video renderer

_localRTCVideoRenderer.srcObject = _localStream;

setState(() {});

// for Incoming call

if (widget.offer != null) {

// listen for Remote IceCandidate

socket!.on("IceCandidate", (data) {

String candidate = data["iceCandidate"]["candidate"];

String sdpMid = data["iceCandidate"]["id"];

int sdpMLineIndex = data["iceCandidate"]["label"];

// add iceCandidate

_rtcPeerConnection!.addCandidate(RTCIceCandidate(

candidate,

sdpMid,

sdpMLineIndex,

));

});

// set SDP offer as remoteDescription for peerConnection

await _rtcPeerConnection!.setRemoteDescription(

RTCSessionDescription(widget.offer["sdp"], widget.offer["type"]),

);

// create SDP answer

RTCSessionDescription answer = await _rtcPeerConnection!.createAnswer();

// set SDP answer as localDescription for peerConnection

_rtcPeerConnection!.setLocalDescription(answer);

// send SDP answer to remote peer over signalling

socket!.emit("answerCall", {

"callerId": widget.callerId,

"sdpAnswer": answer.toMap(),

});

}

// for Outgoing Call

else {

// listen for local iceCandidate and add it to the list of IceCandidate

_rtcPeerConnection!.onIceCandidate =

(RTCIceCandidate candidate) => rtcIceCadidates.add(candidate);

// when call is accepted by remote peer

socket!.on("callAnswered", (data) async {

// set SDP answer as remoteDescription for peerConnection

await _rtcPeerConnection!.setRemoteDescription(

RTCSessionDescription(

data["sdpAnswer"]["sdp"],

data["sdpAnswer"]["type"],

),

);

// send iceCandidate generated to remote peer over signalling

for (RTCIceCandidate candidate in rtcIceCadidates) {

socket!.emit("IceCandidate", {

"calleeId": widget.calleeId,

"iceCandidate": {

"id": candidate.sdpMid,

"label": candidate.sdpMLineIndex,

"candidate": candidate.candidate

}

});

}

});

// create SDP Offer

RTCSessionDescription offer = await _rtcPeerConnection!.createOffer();

// set SDP offer as localDescription for peerConnection

await _rtcPeerConnection!.setLocalDescription(offer);

// make a call to remote peer over signalling

socket!.emit('makeCall', {

"calleeId": widget.calleeId,

"sdpOffer": offer.toMap(),

});

}

}

_leaveCall() {

Navigator.pop(context);

}

_toggleMic() {

// change status

isAudioOn = !isAudioOn;

// enable or disable audio track

_localStream?.getAudioTracks().forEach((track) {

track.enabled = isAudioOn;

});

setState(() {});

}

_toggleCamera() {

// change status

isVideoOn = !isVideoOn;

// enable or disable video track

_localStream?.getVideoTracks().forEach((track) {

track.enabled = isVideoOn;

});

setState(() {});

}

_switchCamera() {

// change status

isFrontCameraSelected = !isFrontCameraSelected;

// switch camera

_localStream?.getVideoTracks().forEach((track) {

// ignore: deprecated_member_use

track.switchCamera();

});

setState(() {});

}

@override

Widget build(BuildContext context) {

return Scaffold(

backgroundColor: Theme.of(context).colorScheme.background,

appBar: AppBar(

title: const Text("P2P Call App"),

),

body: SafeArea(

child: Column(

children: [

Expanded(

child: Stack(children: [

RTCVideoView(

_remoteRTCVideoRenderer,

objectFit: RTCVideoViewObjectFit.RTCVideoViewObjectFitCover,

),

Positioned(

right: 20,

bottom: 20,

child: SizedBox(

height: 150,

width: 120,

child: RTCVideoView(

_localRTCVideoRenderer,

mirror: isFrontCameraSelected,

objectFit:

RTCVideoViewObjectFit.RTCVideoViewObjectFitCover,

),

),

)

]),

),

Padding(

padding: const EdgeInsets.symmetric(vertical: 12),

child: Row(

mainAxisAlignment: MainAxisAlignment.spaceAround,

children: [

IconButton(

icon: Icon(isAudioOn ? Icons.mic : Icons.mic_off),

onPressed: _toggleMic,

),

IconButton(

icon: const Icon(Icons.call_end),

iconSize: 30,

onPressed: _leaveCall,

),

IconButton(

icon: const Icon(Icons.cameraswitch),

onPressed: _switchCamera,

),

IconButton(

icon: Icon(isVideoOn ? Icons.videocam : Icons.videocam_off),

onPressed: _toggleCamera,

),

],

),

),

],

),

),

);

}

@override

void dispose() {

_localRTCVideoRenderer.dispose();

_remoteRTCVideoRenderer.dispose();

_localStream?.dispose();

_rtcPeerConnection?.dispose();

super.dispose();

}

}

Step 7: Modify code in main.dart

We will pass websocketUrl (signalling server URL) to JoinScreen and create random callerId for user.

import 'dart:math';

import 'package:flutter/material.dart';

import 'screens/join_screen.dart';

import 'services/signalling.service.dart';

void main() {

// start videoCall app

runApp(VideoCallApp());

}

class VideoCallApp extends StatelessWidget {

VideoCallApp({super.key});

// signalling server url

final String websocketUrl = "WEB_SOCKET_SERVER_URL";

// generate callerID of local user

final String selfCallerID =

Random().nextInt(999999).toString().padLeft(6, '0');

@override

Widget build(BuildContext context) {

// init signalling service

SignallingService.instance.init(

websocketUrl: websocketUrl,

selfCallerID: selfCallerID,

);

// return material app

return MaterialApp(

darkTheme: ThemeData.dark().copyWith(

useMaterial3: true,

colorScheme: const ColorScheme.dark(),

),

themeMode: ThemeMode.dark,

home: JoinScreen(selfCallerId: selfCallerID),

);

}

}

Wohoo!! Finally we did it.

Problems with P2P WebRTC

- Quality of Service: Quality will decrease as number of peer connection increases.

- Client Side Computation: Low end devices can not synchronise multiple incoming streams.

- Scalability: It becomes very difficult for client to handle computation and network load when number of peers increases and uploading media at the same time.

Solutions

- MCU (Multipoint Control Unit)

- SFU (Selective Forwarding Unit)

- Video SDK

Integrate With Video SDK

Video SDK is the most developer-friendly platform for live video and audio SDKs. Video SDK makes integrating live video and audio into your Flutter project considerably easier and faster. You can have a branded, customised, and programmable call up and running in no time with only a few lines of code.

In addition, Video SDK provides best-in-class modifications, providing you total control over layout and rights. Plugins may be used to improve the experience, and end-to-end call logs and quality data can be accessed directly from your Video SDK dashboard or via REST APIs. This amount of data enables developers to debug any issues that arise during a conversation and improve their integrations for the best customer experience possible.

Alternatively, you can follow this quick start guide to Create a Demo Flutter Project with the Video SDK. or start with Code Sample.

Top comments (0)