A marketing friend once looked at my screen while I was coding and remarked, “That’s so scary looking!” This got me thinking about how we got to this strange-looking multi-colored-words-on-black aesthetic. So here’s a brief history of the developer experience.

Origins

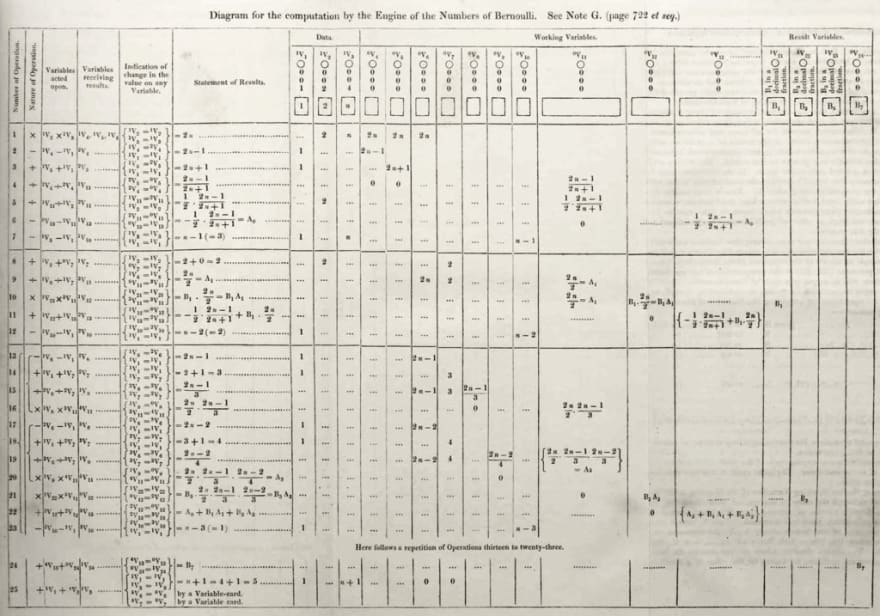

Ada Lovelace is credited with writing the first computer program in 1842. As there were no actual computers, the program never actually got to be run. But it’s fascinating to see how she represented the algorithm on paper, having no precedent to work from.

The program is for the Charles Babbage’s Analytical Engine to compute Bernoulli numbers, which are important in number theory.

Wires and literal bugs (1940s)

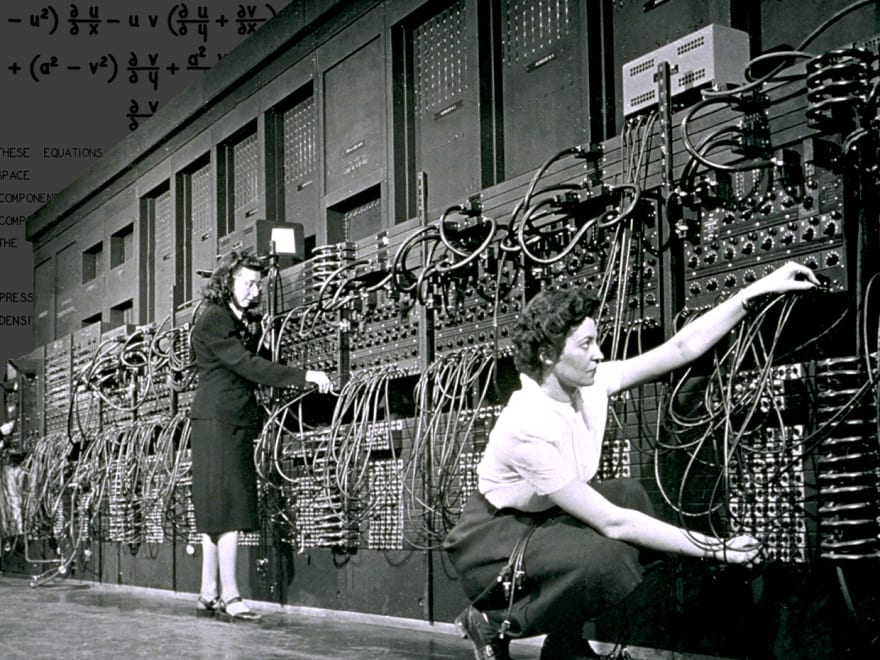

The very first computers were built in the 1940’s and were programmed by literally connecting wires, turning dials and flipping switches. “Programming” was a special case of “wiring.” There was no effort to make programming easier for humans. It was enough that these machines could exist at all!

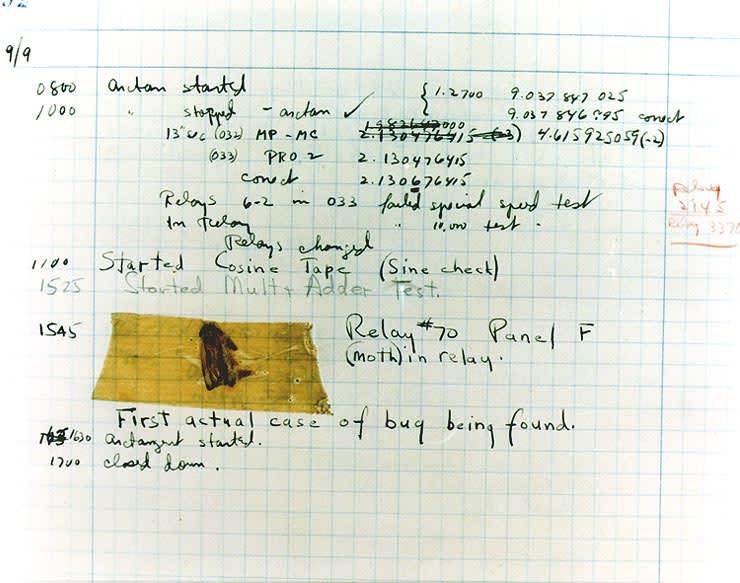

This era also brought us the concept of a program “bug.” The first one being a literal moth that shorted out a relay. Grace Hopper recorded this occurange, along with the moth, in her log book.

Assembly Language (1950s)

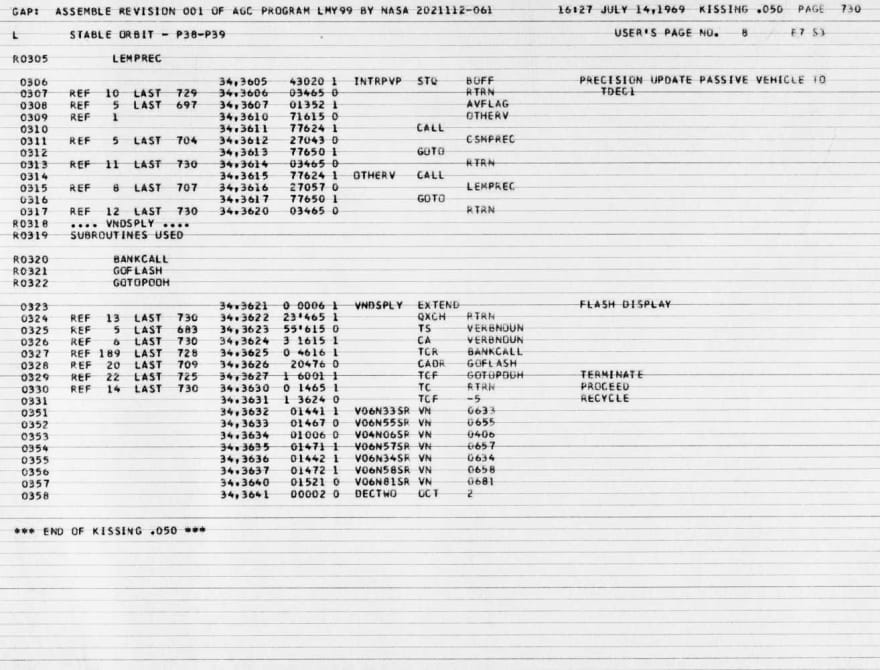

The first step toward a human affordance was assembly language, which allowed programmers to use human-sounding commands like “ADD” or “JUMP” instead of remembering numeric codes like “523” or “10011011”. But this was just a thin gloss, so the programmer still had to know the computer’s architecture inside and out.

The code for the Apollo Space Missions is a good example of assembly language, even though it was written in the 1960s. Here is a printout of part of that program, interestingly named kissing!

INTRPVP STQ BOFF # PRECISION UPDATE PASSIVE VEHICLE

RTRN # TDEC1

AVFLAG

OTHERV

CALL

CSMPREC

GOTO

RTRN

OTHERV CALL

LEMPREC

GOTO

RTRN

Punch cards and high level languages (1950s)

Languages like Fortran and COBOL used quasi-English function names like “if”, “not”, and “while”, and could be run on different computers as long as there was a suitable compiler.

These first generation “high level” languages were all written in the same way: on punched cards. Each card corresponds to one line of code, usually 80 characters across.

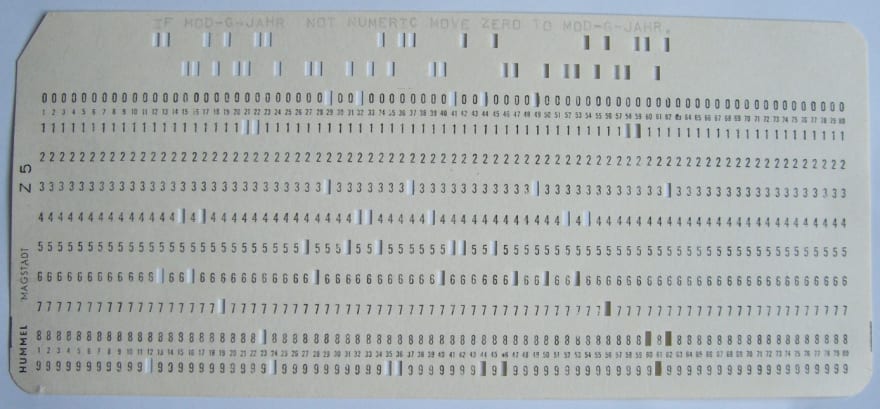

The human-readable code is at the top of the card and reads:

IF MOD-G-JAHR NOT NUMERIC MOVE ZERO TO MOD-G-JAHR.

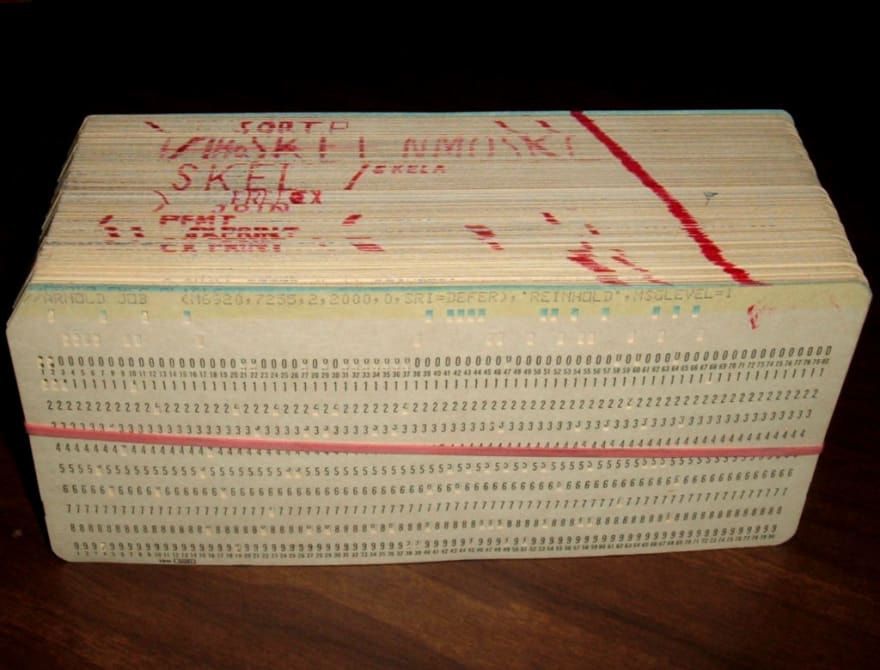

A program consisted of a deck of cards. Editing a program consisted of punching new cards, or re-ordering the deck.

As shown in the photo below, “comments” would be written on the edge of this deck indicating subroutines. The red angled line was used as a crude method to get the cards back in order if the deck were to be dropped. You can also see old red marks revealing sections that had been re-ordered.

Unlike in modern languages, there was no indentation of lines to represent blocks. Indentation makes no sense in a world where you see only one line at a time! Fortran instead used indentation to distinguish comments (column 1), labels (columns 2-5), and statements (columns 7 and beyond).

C AREA OF A TRIANGLE - HERON'S FORMULA

C INPUT - CARD READER UNIT 5, INTEGER INPUT

C OUTPUT -

C INTEGER VARIABLES START WITH I,J,K,L,M OR N

READ(5,501) IA,IB,IC

501 FORMAT(3I5)

IF(IA.EQ.0 .OR. IB.EQ.0 .OR. IC.EQ.0) STOP 1

S = (IA + IB + IC) / 2.0

AREA = SQRT( S * (S - IA) * (S - IB) * (S - IC) )

WRITE(6,601) IA,IB,IC,AREA

601 FORMAT(4H A= ,I5,5H B= ,I5,5H C= ,I5,8H AREA= ,F10.2,

$13H SQUARE UNITS)

STOP

END

REPL and line numbers (1960s)

The BASIC computer language was designed to be even easier to use. It was developed in parallel with time-sharing, which allowed many users to interact with a single computer via teletype without needing to punch cards and load them. For the first time, users could now write, edit, and run programs directly in computer memory! This was the first REPL, “read–eval–print loop”.

The standards and protocols developed for this live on as “TTY”, still used in developer terminals to this day.

In the image above, note the innovation of line numbers. Compared to manually sorting punch cards, this must have seemed like a great innovation!

This example from the 1968 manual averages the numbers that are input:

5 LET S = 0

10 MAT INPUT V

20 LET N = NUM

30 IF N = 0 THEN 99

40 FOR I = 1 TO N

45 LET S = S + V(I)

50 NEXT I

60 PRINT S/N

70 GO TO 5

99 END

Visual editing (1970s)

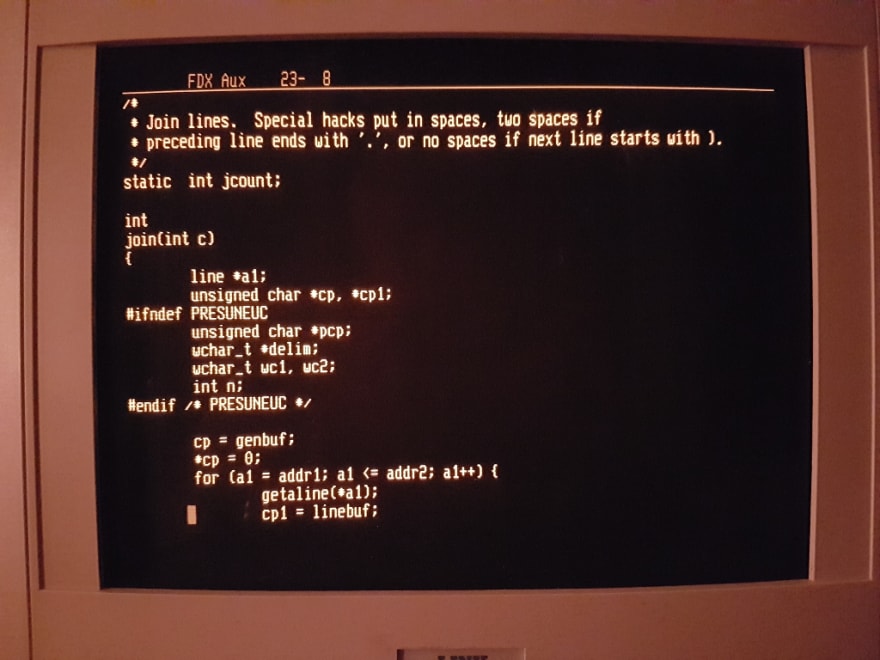

The advent of cheap CRTs meant that developers stop wasting paper and write their programs on a screen. Code editors vi and emacs were created, beginning the long developer tradition of arguing over the best editor. (vim, still popular today, is an updated version of vi written in the 1990s.)

New languages like C took advantage of this by introducing indentation. In the image above, the lines after join(int c) are intended, to show that they are within the scope of the function. The lines after the for(a1…) command are likewise further intended. This use of visual space to denote semantic meaning was a real innovation, and a true affordance to the way human vision can spot visual alignment.

This innovation also kicked off the unending “religious wars” of tabs-vs-spaces, 2-4-8 space intending, and “which line gets the brace?”

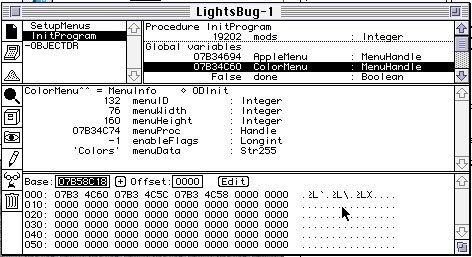

Syntax highlighting, auto-formatting and visual debugging (1980s)

Mac Pascal was the first editor to check for syntax errors while you were editing, not waiting until you compiled the program.

It also was the first editor to do syntax highlighting and auto-formatting. Because the original Mac could not show colors, highlighting was done with bold and italic.

A few years later, the company behind Mac Pascal gave the world its first visual debugger.

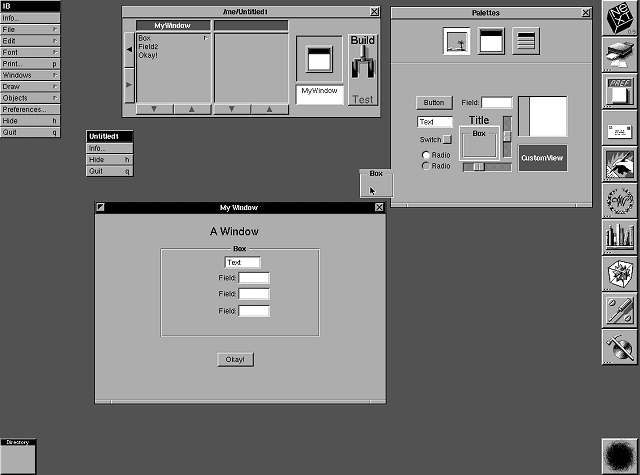

Interface builder, view source, and search (1990s)

After being kicked out of Apple, Steve Job’s founded NeXT computers. The NeXTStep operating system was groundbreaking for developers in that it separated interface development from code-writing. You could now add a button to a window by merely dragging it from a palette, rather than writing lines of code. This program was called Interface Builder, which lives on today in Apple’s XCode, used to create all iOS apps.

The twentieth century ended with the advent of the web. This was set to revolutionize developer experience again.

The web browser democratized developer learning with “View Source”: Anyone curious how a web page was coded could find out in an instant! Suddenly everyone had their own HTML and Javascript development environment, and every new web page was a new example to learn from!

1998 was the twilight of the century, and the year Google was founded. It would become the unsung cornerstone of developer experience up to today, as we copy-paste our error messages and “How do I quit vim?” into its search box. And that’s a topic for another post!

Top comments (1)

One of the interesting things is that (almost?) every step of the way there was resistance by the "seasoned" engineers to whatever newfangled affordance was being introduced.