Introduction to Windows Azure Storage

Windows Azure Storage (WAS) is Microsoft's answer to the rising demand for robust and accessible cloud storage solutions. This service allows users to store vast amounts of data indefinitely while ensuring accessibility from anywhere at any given time. Its diverse storage options include Blobs for files, Tables for structured data, and Queues for message delivery.

The strength of WAS lies in its commitment to data resilience, with features such as local and geographic replication. This ensures data remains intact, even in the face of disasters. Additionally, the system prides itself on scalability, with a partitioned global namespace allowing consistent data storage and access from any global location. Other commendable features include multi-tenancy, strong consistency, and cost-effective storage options.

Delve deeper into WAS's inner workings in this SOSP Paper.

An Overview of WAS's Architecture

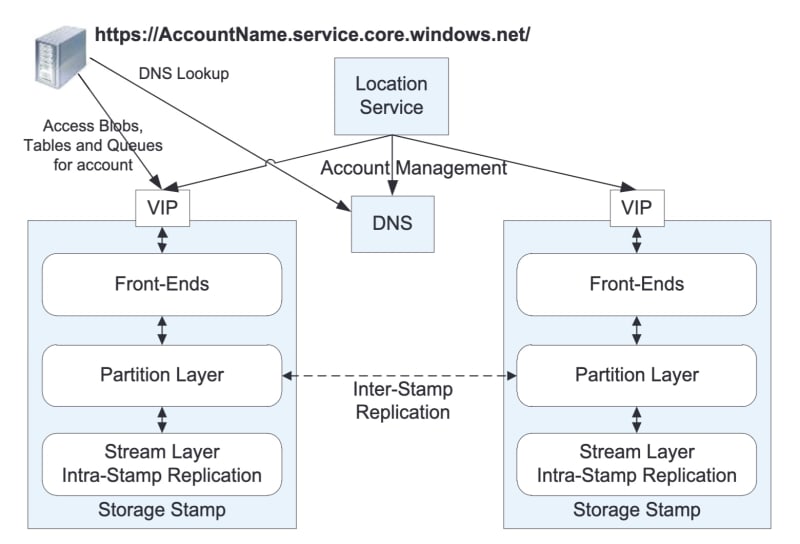

Within the Azure ecosystem, a user can set up one or more Storage Accounts. Each of these accounts is identifiable by a unique name. On account creation, the Location Service assigns it to a primary Storage Stamp and creates a DNS record. This action redirects the AccountName.service.core.windows.net to the Virtual IP (VIP) of that Storage Stamp. Consequently, users can then communicate directly with this Storage Stamp for their storage needs.

To understand the structure further, consider each Storage Stamp as a cluster of nodes, often spread across various racks within a data center. This is where Azure users will store their data.

Delving into the Storage Stamp

A Storage Stamp consists of three main layers:

- Front-Ends: These act as intermediaries, receiving client requests and forwarding them to the suitable partition server.

- Partition Layer: This layer processes high-level data abstractions, such as Blob, Table, and Queue. It ensures transaction sequencing and strong consistency while operating atop the stream layer.

- Stream Layer: Essentially the base, this layer saves data bits on disks. It's tasked with distributing and replicating data across multiple servers, ensuring data durability within a storage stamp.

A Closer Look at the Stream Layer

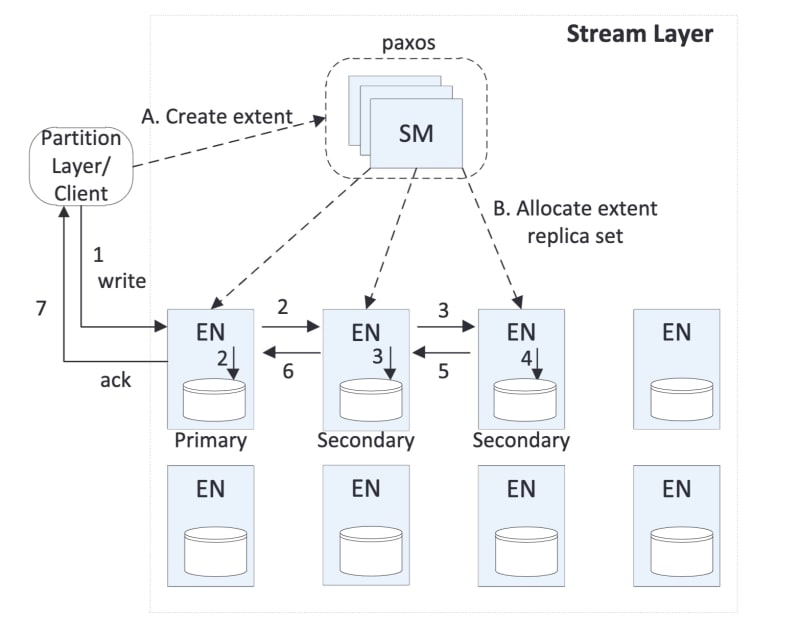

This layer is split into two core components:

- Stream Manager (SM): SM oversees the Extent Nodes (ENs). It's in charge of actions like generating new ENs and overseeing garbage collection. Additionally, it uses paxos to guarantee state consistency for itself.

- Extent Node (EN): Every EN governs a group of disks, maintaining the actual data storage. ENs communicate amongst themselves for the purpose of data replication.

When the Partition Layer seeks to create a new data extent, it requests the SM to assign three ENs. Data is primarily sent to the primary EN, which only acknowledges a successful transaction once the data has been replicated across the secondary ENs. This intra-stamp replication is synchronized to ensure internal errors, like disk failures or power outages, don't result in data loss.

Examining the Partition Layer

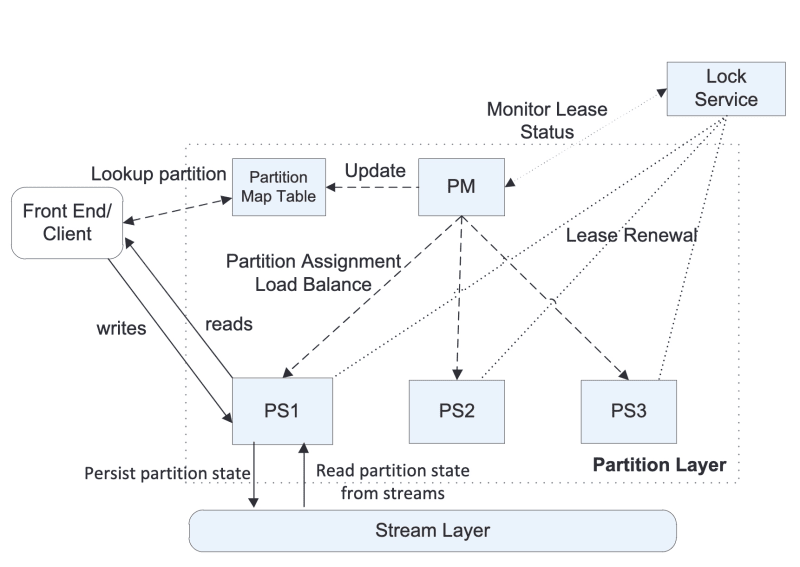

Primarily serving the Front-Ends, the Partition Layer structures the Blob, Table, and Queue using the Stream Layer. Its two main components are:

- Partition Manager (PM): When Front-Ends need to create or delete an object, they use a partition key and an object name. PM then identifies the appropriate Partition Server (PS) for the request.

- Partition Server (PS): The PS collaborates with the Stream Layer to organize data and ensure consistency within its partition.

An essential task for the Partition Layer is its asynchronous replication. This feature replicates data across various stamps. Inter-stamp replication is asynchronous, happening in the background, off the critical path of the user's request. This replication method ensures data storage in diverse geographical locations, fortifying disaster recovery measures.

Summary

Windows Azure Storage (WAS) stands as a testament to Microsoft's commitment to delivering a seamless and resilient cloud storage experience. I hope this blog can help you to have a quick understanding on its architecture, and I suggest you to take a look at the origin paper to have a better knowledge on this well-designed large scale storage system.

Top comments (0)