I recently received an offer to work on a fun project using OpenAI's new Dall-E artificial intelligence platform.

For those of you who aren't familiar with Dall-E, it's a generative AI service that creates artistic images using nothing more than a single descriptive sentence.

The robot image above is a good example. I created it with a single English sentence.

Here's a more specific example: I entered the following descriptive sentence into Dall-E's dashboard interface...

"A giant rabbit man, sitting in front of a computer, writing code, wearing a hoodie"

... after just 15 seconds, Dall-E produced these 4 illustrations:

Incredible!

Dall-E is remarkably easy to use. And there's something surprisingly entertaining about entering an image-description into the interface, and waiting expectantly to see what sort of wackiness the AI spits out.

I've been playing with Dall-E on and off, for about a month, and having a blast producing quirky images and posting them online.

But there's a big difference between entering a sentence and "seeing what you get", and actually "trying to get the image you want".

Here's an example of what I mean:

The project: AI-generated Tarot cards

When I received a proposal to create a set of custom works using Dall-E for a site called iFate, I jumped at the opportunity.

iFate is a well-known "divination site" which is perhaps best known for its free tarot readings, and its free interactive fortune-telling tools. 'Not something I know a whole lot about, but the web-content is right up my alley as their products combine a lot of visual wizardry with a lot of javascript expertise.

What the folks at iFate wanted to do was to see what Dall-E would "think up" when given the task of creating a custom set of Tarot cards. This sounded like a really fun project.

Specifically, they wanted a set of Tarot cards which loosely referenced the symbolism found in the seminal Rider-Waite deck of Tarot cards. So the task was to describe each of those cards to Dall-E in as much detail as possible, and see what Dall-E could "imagine" based on my input.

What happened was both awesome and incredibly frustrating.

I came away with an appreciation for how brilliant Dall-E is, but also how difficult it is to actually use the AI for any sort of goal-driven creative project. Along the way, I learned a ton of things about what works and what doesn't seem to work at all.

Getting Started

I started with the first card in the Tarot deck. It's called the Ace of Cups. My goal will be to create an image that incorporates some of the same symbolic elements as the original card, shown here:

As you can see, there's a whole lot going on in this illustration. This Tarot card is jam-packed with symbols, so the task of telling Dall-E what to do is the first challenge.

Attempt #1

I started by giving Dall-E the following description of the Ace of Cups card:

"Colored pen & ink drawing of a disembodied hand coming out of a small cloud in the sky, holding a large golden goblet, while a flying dove is dropping a small round wafer into the goblet, the sea is in the background"

Obviously, there are more details than that in the original card, but I'm going for the general gist of things here.

I pressed Enter after typing the above description, and I waited...

Dall-E spun into action, and a few seconds later I was staring at a some impressive attempts — none of which looked anything like what I was hoping for.

To be fair, #4 really isn't all that bad. I think for anyone well versed in Tarot imagery, it would be immediately recognizable as the Ace of Cups card.

But what's immediately noticeable is that Dall-E doesn't actually follow instructions word-for-word. For example, there's no "round wafer" to be seen anywhere.

Also, the hand doesn't actually emerge "out of" any of the clouds.

Attempt #2

I tried again with a modified descriptive sentence. This time I'll call the wafer a "communion wafer", which apparently is what it is. I'll also do a better job of explaining that the hand needs to come out from the inside of a cloud. I entered the following:

"Colored pen & ink drawing of a disembodied hand coming out from the inside of a small cloud in the sky, holding a large golden goblet, while a flying dove is dropping a small communion wafer into the goblet, the sea is in the background"

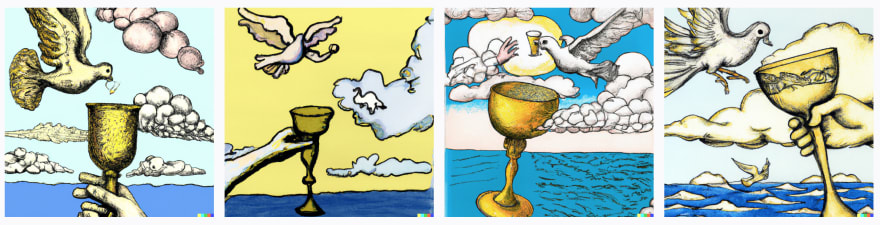

Again, Dall-E spun into action, quickly returning 4 attempts to render the sentence:

The first one is really, really close to fitting the description. The hand does appear to be emerging from a cloud, and there is some kind of wafer visible — although it's on the back of the dove. (Because how would the AI know that doves carry things in their beaks, not on their backs?) All in all, I could almost live with this.

Attempt #3

I tried again anyway:

"Colored pen & ink drawing of a disembodied hand coming out from the inside of a small cloud in the sky, holding a large golden goblet, while a flying dove is placing a small round communion-wafer from its beak into the goblet, the sea is in the background"

Again, the first one isn't terrible. Needless to say, there's a whole lot of wacky stuff happening in the others.

In number three, the dove appears to be ignoring the cup, and bringing the hand a beer!

Again though, for anyone who understands Tarot imagery, or who knows the Tarot deck well, the first one would probably be instantly recognizable as the Ace of Cups.

Final Results

After spending another hour, and another 15 bucks on Dall-E credits, I finally had a collection of passable Ace of Cups images. I tried every descriptive trick I could think of. I even called the communion-wafer a "poker chip" once. I also tried saying that the dove was "eating" the communion wafer.

Here were the best Dall-E Ace of Cups Tarot cards I managed to coax out of the AI:

The last one is the one I went with — mostly because the composition was the best.

But I never did get all the elements I wanted. (The actual final results are going to need a little bit of Photoshop work, but I'm not including any edited versions here).

Now just 77 more cards to go!

Dall-E can be frustrating

What can be frustrating about Dall-E is that there seems to be a whole lot of luck involved. Dozens of attempts were made with what I thought were increasingly accurate descriptions. Too many of these attempts resulted in decreasingly accurate images which seemed to completely ignore the text I entered.

In other words, working with Dall-E is not really an iterative process that gets you closer and closer to the objective you're hoping for. Instead, it's more like a repeated series of attempts combined with a lot of hoping you get it right.

Here are some hilarious failures that happened, even after I felt like my descriptive sentences had gotten very specific:

Often these failures are the result of a sentence that can actually be read more than one way.

For example if I were to tell Dall-E:

"A woman holding a sword in each hand", we humans would naturally tend to assume that there are going to be two swords involved, because well, humans have two hands. But the AI may not make that assumption every time.

Some tips for getting the most out of Dall-E

1) Don't force it. Think of yourself more as a creative director, and not the artist. You're conveying ideas, not controlling the brush. If you're used to coding or hands-on illustration as I am, you're likely going to find this process frustrating. You will almost certainly not get exactly what you want. Accept it for what it is, and work with it.

2) Dall-E is a great first-step. Unless you're willing to make thousands of attempts, you're almost certainly going to be using Photoshop to finalize your images. Don't expect it to be perfect.

3) Play with multiple ways of saying the same thing. Often the "human" way of saying something isn't the best way of describing it to the AI.

4) Don't be afraid to try the same description twice. Sometimes the second time is the charm.

5) Word order matters. Try changing the sequence of your description. Sentence parsing is clearly order dependent in terms of prioritization. Although it's not necessarily true that the beginning of the sentence is more important. Trial and error is the name of the game.

6) Dall-E can't do text. Plan on adding any required text afterwards in Photoshop or another graphics editor.

7) Dall-E is also very bad at counting. (This is a problem for many Tarot cards like the 7 Swords or the 9 of Cups). If you need an exact number of something, you're going to need to use photoshop.

So what to make of Dall-E?

Dall-E is amazing, awesome, inspiring and terrifying at times. But its true magic lies in surprising the user with the unexpected.

It simply isn't a tool (just yet) for approximating the detailed intent of the user. If you're looking for a specific set of features within an image, it can take multiple hours (and quite a few dollars) to cajole the image you want out of Dall-E.

By the time you've spent an hour or so, making repeated failed attempts to get the image you want, you're bound to realize that a skilled illustrator from Fiverr or someplace else could have done the job much better, and for around the same price.

That being said, Dall-E is an absolute delight to play with. The fact that we're this close to having AI illustration on demand is a magnificent achievement.

But is it a tool for professional creators?

Yes and no. If you're willing to accept Dall-E's weaknesses, and use it as a starting point for creative production — and if you're willing to do a whole lot of Photoshop tweaking afterwards, then yes. It's a great tool, and it can save you many hours of work.

Just don't expect it to replace humans yet. Using Dall-E for a professional creative task still requires many hours of human input — and loads of trial and error.

Please LIKE this post if you enjoyed it! Thanks!

Top comments (0)