An Annotated Docker Config for Frontend Web Development

A local development environment with Docker allows you to shrink-wrap the devops your project needs as config, making onboarding frictionless

Andrew Welch / nystudio107

Docker is a tool for containerizing your applications, which means that your application is shrink-wrapped with the environment that it needs to run.

This allows you to define the devops your application needs in order to run as config, which can then be easily replicated and reused.

While there are many uses for Docker, this article will focus on using Docker as a local environment for frontend web development.

Although Craft CMS is referenced in this article, Docker works well for any kind of web development with any kind of CMS or dev stack (Laravel, NodeJS, Rails, whatevs).

The Docker config used here is used in both the devMode.fm GitHub repo, and in the nystudio107/craft boilerplate Composer project if you want to see some “in the wild” examples.

Why Docker?

If you’re doing frontend web development, you very likely already have some kind of a local development environment.

This is a very reasonable question to ask, because any kind of switch of tooling requires some upskilling, and some work.

I’ve long been using Homestead—which is really just a custom Vagrant box with some extras — as my local dev environment as discussed in the Local Development with Vagrant / Homestead article.

I’d chosen to use Homestead because I wanted a local dev environment that was deterministic, disposable, and separated my development environment from my actual computer.

Docker has all of these advantages, but also a much more lightweight approach. Here are the advantages of Docker for me:

- Each application has exactly the environment it needs to run, including specific versions of any of the plumbing needed to get it to work (PHP, MySQL, Postgres, whatever)

- Onboarding others becomes trivial, all they need to do is install Docker and type docker-compose up and away they go

- Your development environment is entirely disposable; if something goes wrong, you just delete it and fire up a new one

- Your local computer is separate from your development environment, so switching computers is trivial, and you won’t run into issues where you hose your computer or are stuck with conflicting versions of devops services

- The cost of trying different versions of various services is low; just change a number in a .yaml file, docker-compose up, and away you go

There are other advantages as well, but these are the more important ones for me.

Additionally, containerizing your application in local development is a great first step to using a containerized deployment process, and running Docker in production as well.

Understanding Docker

This article is not a comprehensive tutorial on Docker, but I will attempt to explain some of the more important, broader concepts.

Docker has the notion of containers, each of which run one or more services. You can think of each container as a mini virtual machine (even though technically, they are not).

While you can run multiple services in a single Docker container, separating each service out into a separate container has many advantages.

If PHP, Apache, and MySQL are all in separate containers, they won’t affect each other, and also can be more easily swapped in and out.

If you decide you want to use Nginx or Postgres instead, the decoupling into separate containers makes it easy!

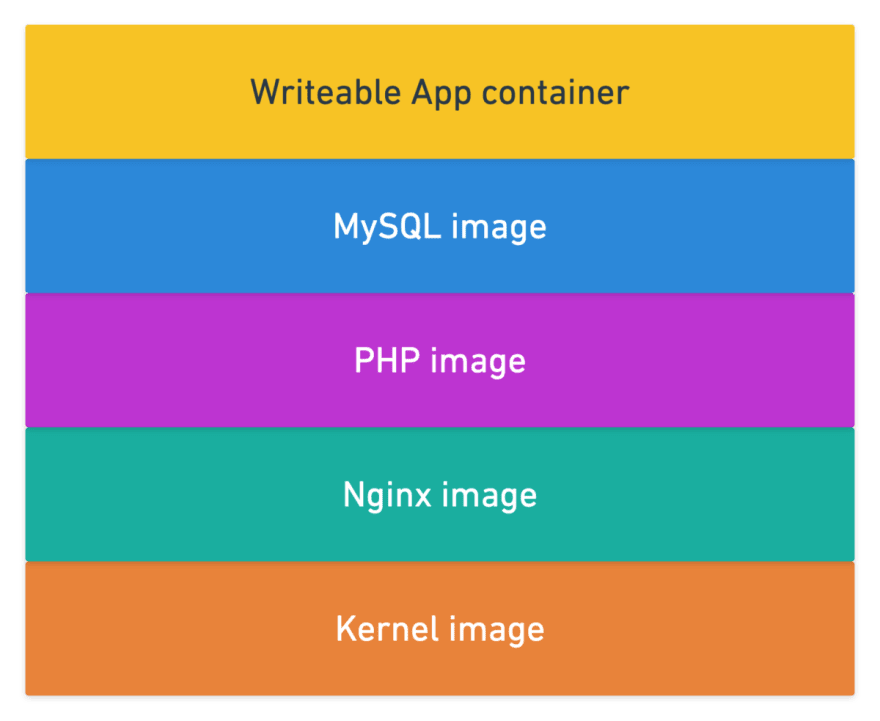

Docker containers are built from Docker images, which can be thought of as a recipe for building the container, with all of the files and code needed to make it happen.

Docker images almost always are layered on top of other existing images that they extend FROM. For instance, you might have a base image from Ubuntu or Alpine Linux which provide in the necessary operating system kernel layer for other processes like Nginx to run.

This layering works thanks to the Union file system, which handles composing all the layers of the cake together for you.

We said earlier that Docker is more lightweight than running a full Vagrant VM, and it is… but unfortunately, unless you’re running Linux there still is a virtualization layer running, which is HyperKit for the Mac, and Hyper‑V for Windows.

Fortunately, you don’t need to be concerned with any of this, but the performance implications do inform some of the decisions we’ve made in the Docker config presented here.

For more information on Docker, for that I’d highly recommend the Docker Mastery course (if it’s not on sale now, don’t worry, it will be at some point) and also the following devMode.fm episodes:

…and there are tons of other excellent educational resources on Docker out there such as Matt Gray’s Craft in Docker: Everything I’ve Learnt presentation, and his excellent A Craft CMS Development Workflow With Dockerseries.

In our article, we will focus on annotating a real-world Docker config that’s used in production. We’ll discuss various Docker concepts as we go, but the primary goal here is documenting a working config.

I learn best by looking at a working example, and picking it apart. If you do, too, let’s get going!

My Docker Directory Structure

This Docker setup uses a directory structure that looks like this (don’t worry, it’s not as complex as it seems, many of the Docker images here are for reference only, and are actually pre-built):

├── cms

│ ├── composer.json

│ ├── config

│ ├── craft

│ ├── craft.bat

│ ├── example.env

│ ├── modules

│ ├── templates

│ └── web

├── docker-compose.yml

├── docker-config

│ ├── mariadb

│ │ └── Dockerfile

│ ├── nginx

│ │ ├── default.conf

│ │ └── Dockerfile

│ ├── php-dev-base

│ │ ├── Dockerfile

│ │ └── zzz-docker.conf

│ ├── php-dev-craft

│ │ └── Dockerfile

│ ├── postgres

│ │ └── Dockerfile

│ ├── redis

│ │ └── Dockerfile

│ ├── webpack-dev-base

│ │ └── Dockerfile

│ └── webpack-dev-craft

│ ├── Dockerfile

│ ├── package.json

│ ├── postcss.config.js

│ ├── tailwind.config.js

│ ├── webpack.common.js

│ ├── webpack.dev.js

│ ├── webpack.prod.js

│ └── webpack.settings.js

├── scripts

│ ├── common

│ ├── docker_pull_db.sh

│ ├── docker_restore_db.sh

│ ├── example.env.sh

│ └── seed_db.sql

└── src

├── conf

├── css

├── img

├── js

├── php

├── templates -> ../cms/templates

└── vue

Here’s an explanation of what the top-level directories are:

- cms — everything needed to run Craft CMS. The is the “app” of the project

- docker-config — an individual directory for each service that the Docker setup uses, with a Dockerfile and other ancillary config files therein

- scripts — helper shell scripts that do things like pull a remote or local database into the running Docker container. These are derived from the Craft-Scripts shell scripts

- src — the frontend JavaScript, CSS, Vue, etc. source code that the project uses

Each service is referenced in the docker-compose.yaml file, and defined in the Dockerfile that is in the corresponding directory in the docker-config/ directory.

It isn’t strictly necessary to have a separate Dockerfile for each service, if they are just derived from a base image. But I like the consistency, and ease of future expansion should something custom be necessary down the road.

You’ll also notice that there are php-dev-base and php-dev-craft directories, as well as webpack-dev-base and webpack-dev-craft directories, and might be wondering why they aren’t consolidated.

The reason is that there’s a whole lot of the base setup in both that just never changes, so instead of rebuilding that each time, we can build it once and publish the images up on DockerHub.com as nystudio107/php-dev-base and nystudio107/webpack-dev-base.

Then we can layer anything specific about our project on top of these base images in the respective -craft services. This saves us significant building time, while keeping flexibility.

The docker-compose.yaml file

While a docker-compose.yaml file isn’t required when using Docker, from a practical point of view, you’ll almost always use it. The docker-compose.yaml file allows you to define multiple containers for running the services you need, and coordinate starting them up and shutting them down in unison.

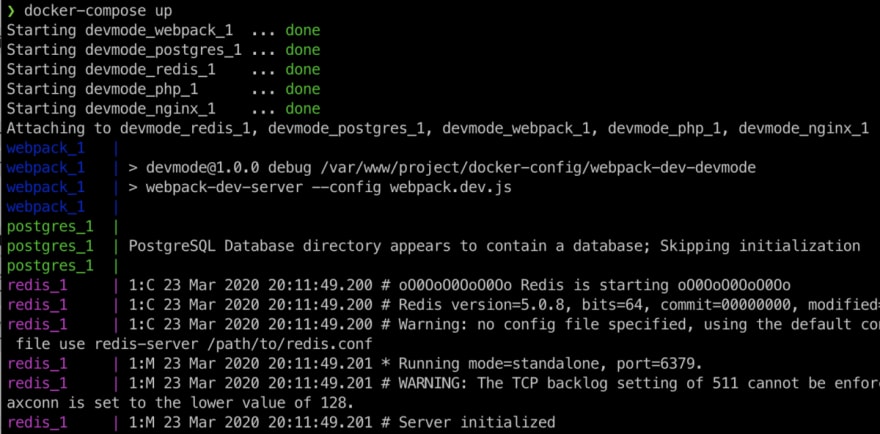

Then all you need to do is run docker-compose up via the terminal in a directory that has a docker-compose.yaml file, and Docker will start up all of your containers for you!

Here’s an example of what that might look like, starting up your Docker containers:

Let’s have a look at our docker-compose.yaml file:

version: '3.7'

services:

# nginx - web server

nginx:

build:

context: .

dockerfile: ./docker-config/nginx/Dockerfile

env_file: &env

- ./cms/.env

links:

- php

ports:

- "8000:80"

volumes:

- cpresources:/var/www/project/cms/web/cpresources

- ./cms/web:/var/www/project/cms/web:cached

# php - personal home page

php:

build:

context: .

dockerfile: ./docker-config/php-dev-craft/Dockerfile

depends_on:

- "mariadb"

- "redis"

env_file:

*env

expose:

- "9000"

links:

- mariadb

- redis

volumes:

- cpresources:/var/www/project/cms/web/cpresources

- storage:/var/www/project/cms/storage

- ./cms:/var/www/project/cms:cached

- ./cms/vendor:/var/www/project/cms/vendor:delegated

- ./cms/storage/logs:/var/www/project/cms/storage/logs:delegated

# mariadb - database

mariadb:

build:

context: .

dockerfile: ./docker-config/mariadb/Dockerfile

env_file:

*env

environment:

MYSQL_ROOT_PASSWORD: secret

MYSQL_DATABASE: project

MYSQL_USER: project

MYSQL_PASSWORD: project

ports:

- "3306:3306"

volumes:

- db-data:/var/lib/mysql

# redis - key/value database for caching & php sessions

redis:

build:

context: .

dockerfile: ./docker-config/redis/Dockerfile

expose:

- "6379"

# webpack - frontend build system

webpack:

build:

context: .

dockerfile: ./docker-config/webpack-dev-craft/Dockerfile

env_file:

*env

ports:

- "8080:8080"

volumes:

- ./docker-config/webpack-dev-craft:/var/www/project/docker-config/webpack-dev-craft:cached

- ./docker-config/webpack-dev-craft/node_modules:/var/www/project/docker-config/webpack-dev-craft/node_modules:delegated

- ./cms/web/dist:/var/www/project/cms/web/dist:delegated

- ./src:/var/www/project/src:cached

- ./cms/templates:/var/www/project/cms/templates:cached

volumes:

db-data:

cpresources:

storage:

This .yaml file has 3 top-level keys:

- version — the version number of the Docker Compose file, which corresponds to different capabilities offered by different versions of the Docker Engine

- services — each service corresponds to a separate Docker container that is created using a separate Docker image

- volumes — named volumes that are mounted and can be shared amongst your Docker containers (but not your host computer), for storing persistent data

We’ll detail each service below, but there are a few interesting tidbits to cover first.

BUILD

When you’re creating a Docker container, you can either base it on an existing image (either a local image or one pulled down from DockerHub.com), or you can build it locally via a Dockerfile.

As mentioned above, I chose the methodology that each service would be creating as a build from a Dockerfile (all of which extend FROM an image up on DockerHub.com) to keep things consistent.

This means that some of our Dockerfiles we use are nothing more than a single line, e.g.: FROM mariadb:10.3, but this setup does allow for expansion.

The two keys used for build are are:

- context — this specifies where the working directory for the build should be, relative to the docker-compose.yaml file. We have this set to . (the current directory) for each service

- dockerfile — this specifies a path to the Dockerfile to use to build the service Docker container. Think of the Dockerfile as a local Docker image

So the context is always the root directory of the project, with the Dockerfile and any supporting files for each service are off in a separate directory. We do it this way to keep the paths consistent (always relative to the project root) regardless of the service.

DEPENDS_ON

This just lets you specify what other services this particular service depends on; this allows you to ensure that other containers are up and running before this container starts up.

ENV_FILE

The env_file setting specifies a path to your .env file for key/value pairs that will be injected into a Docker container.

Docker does not allow for quotes in its .env file, which is contrary to how .env files work almost everywhere else… so remove any quotes you have in your .env file.

You’ll notice that for the nginx service, there’s a strange &env value in the env_file setting, and for the other services, the setting is *env. This is taking advantage of YAML aliases, so if we do change the .env file path, we only have to do it in one place.

Doing it this way also ensures that all of the .env environment variables are available in every container. For more on environment variables, check out the Flat Multi-Environment Config for Craft CMS 3 article.

Because it’s Docker that is injecting these .env environment variables, if you change your .env file, you’ll need to restart your Docker containers.

LINKS

Links in Docker allow you to define extra aliases by which a service is reachable from another service. They are not required to enable services to communicate, but I like being explicit about it.

The come into play when one container needs to talk to another. For example, if you normally would communicate with your database via the localhost socket, instead in our setup you’d use the socket named mariadb.

The key take-away is that when containers need to talk to each other, they are doing so over the internal Docker network, and refer to each other via their service or links name.

PORTS

This specifies the port that should be exposed outside of the container, followed by the port that the container uses internally. So for example, the nginx service has "8000:80", which means the externally accessible port for the Nginx webserver is 8000, and the internal port the service runs on is 80.

If this sounds confusing, understand that Docker uses its own internal network to allow containers to talk to each other, as well as the outside world.

VOLUMES

Docker containers run in their own little world, which is great for isolation purposes, but at some point you do need to share things from your “host” computer with the Docker container.

Docker volumes allow you to do this. You specify either a named volume or a path on your host, followed by the path where this volume should be bind mounted in the Docker container.

This is where performance problems can happen with Docker on the Mac and Windows. So we use some hints to help with performance:

- consistent — perfect consistency (host and container have an identical view of the mount at all times)

- cached — the host’s view is authoritative (permit delays before updates on the host appear in the container)

- delegated — the container’s view is authoritative (permit delays before updates on the container appear in the host)

So for things like node_modules/ and vendor/ we mark them as :delegated because while we want them shared, the container is in control of modifying these volumes.

Some Docker setups I’ve seen put these directories into a named volume, which means they are visible only to the Docker containers.

But the problem is we lose out on our editor auto-completion, because our editor has nothing to index.

See the Auto-Complete Craft CMS 3 APIs in Twig with PhpStorm article for details.

Service: Nginx

Nginx is the web server of choice for me, both in local dev and in production.

FROM nginx:1.16

COPY ./docker-config/nginx/default.conf /etc/nginx/conf.d/default.conf

We’ve based the container on the nginx image, tagged at version 1.16

The only modification it makes is COPYing our default.conf file into place:

server {

listen 80 default_server;

root /var/www/project/cms/web;

index index.html index.php;

charset utf-8;

location / {

try_files $uri $uri/ /index.php?$query_string;

}

access_log off;

error_log /var/log/nginx/error.log error;

sendfile off;

ssi on;

client_max_body_size 10m;

gzip on;

gzip_http_version 1.0;

gzip_proxied any;

gzip_min_length 500;

gzip_disable "MSIE [1-6]\.";

gzip_types text/plain text/xml text/css

text/comma-separated-values

text/javascript

application/x-javascript

application/javascript

application/atom+xml;

location ~ \.php$ {

fastcgi_split_path_info ^(.+\.php)(/.+)$;

fastcgi_pass php:9000;

fastcgi_index index.php;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_intercept_errors off;

fastcgi_buffer_size 16k;

fastcgi_buffers 4 16k;

fastcgi_read_timeout 300;

}

location ~ /\.ht {

deny all;

}

}

This is just a simple Nginx config that works well with Craft CMS. You can find more about Nginx configs for Craft CMS in the nginx-craft GitHub repo.

Service: MariaDB

MariaDB is a drop-in replacement for MySQL that I tend to use instead of MySQL itself. It was written by the original author of MySQL, and is binary compatible with MySQL.

FROM mariadb:10.3

We’ve based the container on the mariadb image, tagged at version 10.3

There’s no modification at all to the source image.

Service: Postgres

Postgres is a robust database that I am using more and more for Craft CMS projects. It’s not used in the docker-compose.yaml presented here, but I keep the configuration around in case I want to use it.

Postgres is used in local dev and in production on the devMode.fm GitHub repo, if you want to see it implemented.

FROM postgres:12.2

We’ve based the container on the postgres image, tagged at version 12.2

There’s no modification at all to the source image.

Service: Redis

Redis is a key/value pair database that I set all of my Craft CMS installs to use both as a caching method, and as a session handler for PHP.

FROM redis:5.0

We’ve based the container on the redis image, tagged at version 5.0

There’s no modification at all to the source image.

Service: PHP

PHP is the language that the Yii2 framework and Craft CMS itself is based on, so we need it in order to run our app.

This service is composed of a base image that contains all of the packages and PHP extensions we’ll always need to use, and then the project-specific image that contains whatever additional things are needed for our project.

FROM php:7.3-fpm

# Install packages

RUN apt-get update \

&& \

# apt Debian packages

apt-get install -y \

apt-utils \

autoconf \

ca-certificates \

curl \

g++ \

libbz2-dev \

libfreetype6-dev \

libjpeg62-turbo-dev \

libpng-dev \

libpq-dev \

libssl-dev \

libicu-dev \

libmagickwand-dev \

libzip-dev \

unzip \

zip \

&& \

# pecl PHP extensions

pecl install \

imagick-3.4.4 \

redis \

xdebug-2.8.1 \

&& \

# Configure PHP extensions

docker-php-ext-configure \

gd --with-freetype-dir=/usr/include/ --with-jpeg-dir=/usr/include/ \

&& \

# Install PHP extensions

docker-php-ext-install \

bcmath \

bz2 \

exif \

ftp \

gettext \

gd \

iconv \

intl \

mbstring \

opcache \

pdo \

shmop \

sockets \

sysvmsg \

sysvsem \

sysvshm \

zip \

&& \

# Enable PHP extensions

docker-php-ext-enable \

imagick \

redis \

xdebug

# Append our php.ini overrides to the end of the file

RUN echo "upload_max_filesize = 10M" > /usr/local/etc/php/php.ini && \

echo "post_max_size = 10M" >> /usr/local/etc/php/php.ini && \

echo "max_execution_time = 300" >> /usr/local/etc/php/php.ini && \

echo "memory_limit = 256M" >> /usr/local/etc/php/php.ini && \

echo "opcache.revalidate_freq = 0" >> /usr/local/etc/php/php.ini && \

echo "opcache.validate_timestamps = 1" >> /usr/local/etc/php/php.ini

# Copy the `zzz-docker.conf` file into place for php-fpm

COPY ./zzz-docker.conf /usr/local/etc/php-fpm.d/zzz-docker.conf

We’ve based the container on the php image, tagged at version 7.3

We’re then adding a bunch of Debian packages that we want available for our Ubuntu operating system base, some debugging tools, as well as some PHP extensions that Craft CMS requires.

Then we add some defaults to the php.ini, and copy into place the zzz-docker.conf file:

[www]

pm.max_children = 10

pm.process_idle_timeout = 10s

pm.max_requests = 1000

This just sets some defaults for php-fpm that make sense for local development.

By itself, this image won’t do much for us, and in fact we don’t even spin up this image. But we’ve built this image, and made it available as nystudio107/php-dev-base on DockerHub.

Since it’s pre-built, we don’t have to build it every time, and can layer on top of this image anything project-specific via the php-dev-craft container image:

FROM nystudio107/php-dev-base

# Install packages

RUN apt-get update \

&& \

# apt Debian packages

apt-get install -y \

nano \

&& \

# Install PHP extensions

docker-php-ext-install \

pdo_mysql \

&& \

# Install Composer

curl -sS https://getcomposer.org/installer | php -- --install-dir=/usr/local/bin/ --filename=composer

WORKDIR /var/www/project

# Copy over the directories/files php needs access to

COPY --chown=www-data:www-data ./cms/composer.* /var/www/project/cms/

# Create the storage directory and make it writeable by PHP

RUN mkdir -p /var/www/project/cms/storage && \

mkdir -p /var/www/project/cms/storage/runtime && \

chown -R www-data:www-data /var/www/project/cms/storage

# Create the cpresources directory and make it writeable by PHP

RUN mkdir -p /var/www/project/cms/web/cpresources && \

chown -R www-data:www-data /var/www/project/cms/web/cpresources

WORKDIR /var/www/project/cms

# Do a `composer install` without running any Composer scripts

# - If `composer.lock` is present, it will install what is in the lock file

# - If `composer.lock` is missing, it will update to the latest dependencies

# and create the `composer.lock` file

# Force permissions, update Craft, and start php-fpm

CMD if [! -f "./composer.lock"]; then \

composer install --no-scripts --optimize-autoloader --no-interaction; \

fi \

&& \

if [! -d ./vendor -o ! "$(ls -A ./vendor)"]; then \

composer install --no-scripts --optimize-autoloader --no-interaction; \

fi \

&& \

chown -R www-data:www-data /var/www/project/cms/vendor \

&& \

chown -R www-data:www-data /var/www/project/cms/storage \

&& \

composer craft-update \

&& \

php-fpm

This is the image that we actual build into a container, and use for our project. We install the nano editor because I find it handy sometimes, and we also install pdo_mysql so that PHP can connect to our MariaDB database.

We do it this way so that if we want to create a Craft CMS project that uses Postgres, we can just swap in the PDO extension needed here.

Then we make sure the various storage/ and cpresources/ directories are in place, with the right ownership so that Craft will run properly.

Then we do a bit of magic to do a composer install, but only if:

- The composer.lock file doesn’t exist

- The vendor/ directory doesn’t exist, or is empty

We have to do the composer install as part of the Docker image CMD because the file system mounts aren’t in place until the CMD is run.

This allows us to update our Composer dependencies just by deleting the composer.lock file or the vendor/ directory, and doing docker-compose up

Simple.

The alternative is doing a docker exec -it craft_php_1 /bin/bash to open up a shell in our container, and running the command manually. Which is fine, but a little convoluted for some.

Then we make sure that the ownership on important directories is correct, and we run the craft-update Composer script:

{

"require": {

"craftcms/cms": "^3.4.0",

"vlucas/phpdotenv": "^3.4.0",

"yiisoft/yii2-redis": "^2.0.6",

"nystudio107/craft-imageoptimize": "^1.0.0",

"nystudio107/craft-fastcgicachebust": "^1.0.0",

"nystudio107/craft-minify": "^1.2.5",

"nystudio107/craft-typogrify": "^1.1.4",

"nystudio107/craft-retour": "^3.0.0",

"nystudio107/craft-seomatic": "^3.2.0",

"nystudio107/craft-webperf": "^1.0.0",

"nystudio107/craft-twigpack": "^1.1.0",

"nystudio107/dotenvy": "^1.1.0"

},

"autoload": {

"psr-4": {

"modules\\sitemodule\\": "modules/sitemodule/src/"

}

},

"config": {

"sort-packages": true,

"optimize-autoloader": true,

"platform": {

"php": "7.0"

}

},

"scripts": {

"craft-update": [

"@php craft migrate/all",

"@php craft project-config/sync",

"@php craft clear-caches/all"

],

"post-root-package-install": [

"@php -r \"file_exists('.env') || copy('example.env', '.env');\""

],

"post-update-cmd": "@craft-update",

"post-install-cmd": "@craft-update"

}

}

So the craft-update script automatically does the following when our container starts up:

- All migrations are run

- Project Config is sync’d

- All caches are cleared

Starting from a clean slate like this is so helpful in terms of avoiding silly problems like things being cached, not up to date, etc.

Service: webpack

webpack is the build tool that we use for building the CSS, JavaScript, and other parts of our application.

The setup used here is entirely based on the An Annotated webpack 4 Config for Frontend Web Development article, just with some settings tweaked.

That means our webpack build process runs entirely inside of a Docker container, but we still get all of the Hot Module Replacement goodness for local development.

This service is composed of a base image that contains node itself, all of the Debian packages needed for headless Chrome, the npm packages we’ll always need to use, and then the project-specific image that contains whatever additional things are needed for our project.

FROM node:11

# Install packages for headless chrome

# https://medium.com/@ssmak/how-to-fix-puppetteer-error-while-loading-shared-libraries-libx11-xcb-so-1-c1918b75acc3

RUN apt-get update \

&& \

# apt Debian packages

apt-get install -y \

ca-certificates \

fonts-liberation \

gconf-service \

libgl1-mesa-glx \

libasound2 \

libatk1.0-0 \

libc6 \

libcairo2 \

libcups2 \

libdbus-1-3 \

libexpat1 \

libfontconfig1 \

libgcc1 \

libgconf-2-4 \

libgdk-pixbuf2.0-0 \

libglib2.0-0 \

libgtk-3-0 \

libnspr4 \

libpango-1.0-0 \

libpangocairo-1.0-0 \

libstdc++6 \

libx11-6 \

libx11-xcb1 \

libxcb1 \

libxcomposite1 \

libxcursor1 \

libxdamage1 \

libxext6 \

libxfixes3 \

libxi6 \

libxrandr2 \

libxrender1 \

libxss1 \

libxtst6 \

libappindicator1 \

libnss3 \

lsb-release \

wget \

xdg-utils

We’ve based the container on the node image, tagged at version 11

We’re then adding a bunch of Debian packages that we need in order to get headless Chrome working (needed for Critical CSS generation), as well as other libraries for the Sharp image library to work effectively.

By itself, this image won’t do much for us, and in fact we don’t even spin up this image. But we’ve built this image, and made it available as nystudio107/webpack-dev-base on DockerHub.

Since it’s pre-built, we don’t have to build it every time, and can layer on top of this image anything project-specific via the webpack-dev-craft container image:

FROM nystudio107/webpack-dev-base

WORKDIR /var/www/project/docker-config/webpack-dev-craft/

# We'd normally use `npm ci` here, but by using `install`:

# - If `package-lock.json` is present, it will install what is in the lock file

# - If `package-lock.json` is missing, it will update to the latest dependencies

# and create the `package-lock-json` file

# Run our webpack build in debug mode

CMD if [! -f "./package-lock.json"]; then \

npm install; \

fi \

&& \

if [! -d "./node_modules" -o ! "$(ls -A ./node_modules)"]; then \

npm install; \

fi \

&& \

npm run debug

Then, just like with the php-dev-craft image, we do a bit of magic to do a npm install, but only if:

- The package-lock.json file doesn’t exist

- The node_modules/ directory doesn’t exist, or is empty

We have to do the npm install as part of the Docker image CMD because the file system mounts aren’t in place until the CMD is run.

This allows us to update our npm dependencies just by deleting the package-lock.json file or the node_modules/ directory, and doing docker-compose up

The alternative is doing a docker exec -it craft_webpack_1 /bin/bash to open up a shell in our container, and running the command manually.

All Aboard!

Hopefully this annotated Docker config has been useful to you. If you use Craft CMS, you can dive in and start using it yourself; if you use something else entirely, the concepts here should still be very salient for your project.

I think that Docker — or some other conceptually similar containerization strategy — is going to be an important technology going forward. So it’s time to jump on board.

As mentioned earlier, the Docker config used here is used in both the devMode.fm GitHub repo, and in the nystudio107/craft boilerplate Composer project if you want to see some “in the wild” examples.

Happy containerizing!

Further Reading

If you want to be notified about new articles, follow nystudio107 on Twitter.

Copyright ©2020 nystudio107. Designed by nystudio107

Top comments (0)