Functions as a service, FaaS, is most likely the most interacted with serverless product on the market. Function execution platforms really gained rise as more people got tired of setting up full containerized environments for simple code execution. The costs, overall maintenance, and cascading consequences of failures meant more people were looking for a lean context to execute one-off workers in the cloud.

The industry really rose to notoriety when AWS introduced AWS Lambda, a lambda being an anonymous function, and the service being that promise, as a service - these are anonymized functions that are not bound to a calling context, but respond to discrete events and take discrete inputs. They’re simple to reason about, simple to call, and most of all, they’re drastically more affordable to run, lending themselves really well to “pay for compute time”, not “pay for standby” which containers need to do.

The pros and cons of FaaS

Outlined above, there’s a few specific advantages and a few disadvantages (though decreasing by the day) to opting for a FaaS provider.

Pro: Maintenance

FaaS providers allow you to design, deploy, and execute functions – and only functions. You don’t need to maintain additional infrastructure. Setting up an environment to run a function is akin to running a restaurant to make yourself a cup of coffee from time to time.

Pro: Cost

Functions have obvious execution signatures which lend themselves to detailed billing. The more fine-tuned your billing, the more exact your cost to benefit analysis will be, and more often than not, that leads to cheaper costs on the whole.

Pro: Upgradable

Your functions can be independently updated, optimized, and measured. Scoping domain logic to independent functions allows for working in an iterative manner.

Cons: Higher Latency

While many tools have risen to solve this problem, serverless functions are subject to slow cold-start times and overall more latency than a dedicated execution environment.

Cons: Portability

Many FaaS providers have proprietary route handling logic

Choosing a FaaS Provider

Up to a few years ago, the question about choosing a FaaS approach versus maintaining a dedicated execution environment was much more nuanced. Today, the developments of the FaaS ecosystem can handle the vast majority of all cloud execution needs. Choosing a provider, however, has simply adopted the former nuances.

When choosing a FaaS provider, the two most important questions to answer are related to workload and latency.

Workload dependent criteria

While all major FaaS providers are capable of handling simple workloads, not all of them are capable of offering the same breadth of services. Computationally intensive tasks including machine learning or resource intensive tasks like rendering all need robust service providers supporting those needs.

Some features such as ML processing is only even supported by a smaller number of service providers to begin with.

Latency dependent criteria

As the number of FaaS providers has increased, their product differentiation in solving common problems has also increased. One area of differentiation is where the code actually executes, either in centralized regions or at the edge.

Edge computing is a growing trend, set to explore in the coming few years. At the moment, 10% of enterprise data is currently created/stored outside of standard cloud center. By 2025, that is estimated to reach 75%. Edge computing replicates the code that is to be executed to smaller servers closer to the individual customers, which means that the amount of time, network resources, and energy required to execute my code is all reduced dramatically.

This is, of course, a hard problem to solve, thus not all providers are capable of offering these benefits. CDN providers, having solved many of these challenges in different contexts, are some of the first to embrace these patterns.

Other criteria

Some honorable mentions for things to consider when choosing a FaaS provider also include pricing, speed of prototype, and working with what you have. If you are running serverless workloads with hyper cost-sensitive business requirements, you may find yourself naturally limited to a certain set of providers.

If you are working on setting up “quick and dirty” prototypes, you’re not going to have the time to onboard with an account manager, high-tough, FaaS provider – and there’s plenty of those.

Lastly, if you have existing GCP or AWS infrastructure with billing accounts in place and stakeholder approved, it’s typically best practice to keep serverless services within a single chain of command for both credentialed access, unified billing, and observability tooling.

Comparing FaaS providers

With so many providers popping up, it’s helpful to have a short analysis of where the different ones excel, and how they rank. Beyond the provider’s individual ability to offer support for the widest array of workloads, at the fastest speeds possible, at a reasonable cost, we need to consider developer efficiency, which has knock-on impacts on operational costs, as well.

DX considerations for FaaS providers

Developer experience for FaaS focuses around design, development, and deployment.

Design

Local simulation of a production environment allows you to ensure that your local code you tested will execute with some degree of predictability in a production environment. This is one of the most useful features for designing serverless functions where layers between your code and the hosting provider may introduce instability.

CLI based templating allows developers to quickly scaffold functions with consistency and best practices built in.

Code first configuration also allows for provisioning resources and designing serverless functions with higher degree of predictability and consistency, benefiting from version control and static analysis tooling.

Develop

Language selection is one of the most important factors when choosing a FaaS provider as different languages lend themselves best to different workload types. Being able to have a range of selection allows executing all serverless workloads with the same FaaS provider. Having to adapt operational code to a different language or runtime just because of FaaS requirements is a substantial hit on developer productivity.

Portable code, or generally abstracted code that is able to run equally across more than one vendor is another critical metric. If each vendor is implementing their own networking interfaces, this will also slow down developer productivity. Code designed for one execution environment should be able to be used with multiple providers.

Tangentially related, but when provider specific APIs are offered or required, providing strong typings for those APIs increases productivity even more, allowing developers to reduce context switching from IDE to documentation and back again.

Deploy

Continuous deployment is perhaps the highest development improvement, allowing versioning and roll-backs as an integrated part of the development process.

Where continuous deployment is not available, or even where it is, CLI based deployment and log tailing is another critical piece of developer experience improvement that reduces time to production, deployment errors, and onboarding of new team members.

FaaS comparison criteria

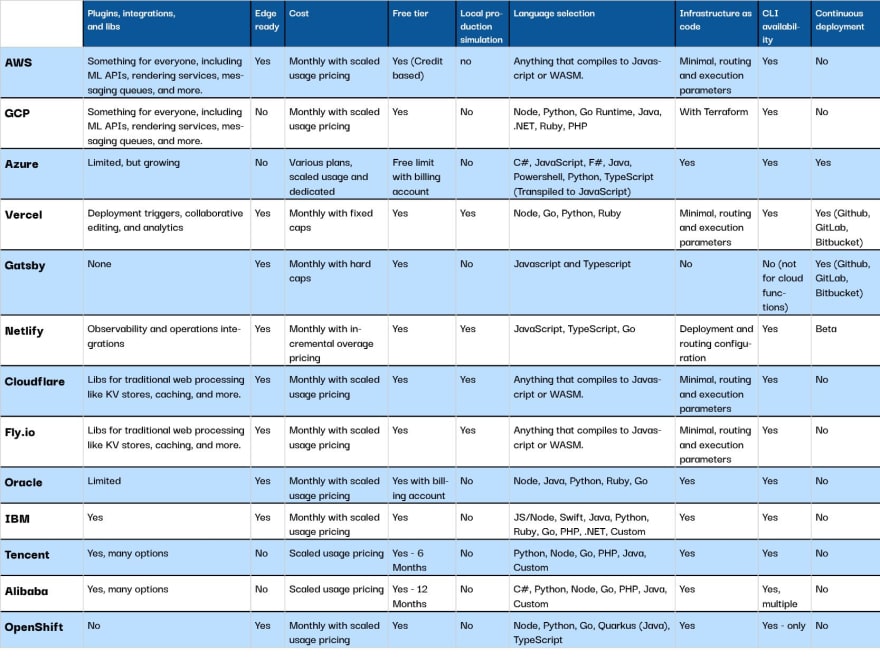

Abstracting all our results above, we’ll evaluate some of the more popular FaaS providers with the following criteria.

- Plugins, Integrations, and Libs

- Edge Ready

- Cost

- Local production simulation

- Language selection

- Infrastructure as code (IaC)

- CLI availability

- Continuous deployment

Comparing the Vendors

Below is a non-exhaustive list of FaaS providers. Our list doesn't include options such as Knative, or OpenFaaS which are platforms for running FaaS solutions themselves. The distinction turns grey quickly, but for the purposes of a general overview, we’ll be looking at providers offering a “hosted function” product proper.

Traditional providers

AWS Lamdba

You can’t really begin talking about FaaS providers without talking about Lambda, or really even AWS as a whole. Seen by many as the forerunner of cloud computing at scale, Lambda is the 800 pound gorilla in the room.

Plugins, integrations, and libs

|

Something for everyone, including ML APIs, rendering services, messaging queues, and more.

|

|

Edge ready

|

Yes - Lambda@Edge

|

|

Cost

|

Monthly with scaled usage pricing

|

|

Free tier

|

Yes (Credit based)

|

|

Local production simulation

|

no

|

|

Language selection

|

Anything that compiles to Javascript or WASM.

|

|

Infrastructure as code

|

Minimal, routing and execution parameters

|

|

CLI availability

|

Yes

|

|

Continuous deployment

|

No

|

Google Cloud functions

GCP will of course have their own cloud computing options. Where raw computing resources may lag behind some alternatives, the knowledge APIs and libraries are the best of the best.

Plugins, integrations, and libs

|

Something for everyone, including ML APIs, rendering services, messaging queues, and more.

|

|

Edge ready

|

No

|

|

Cost

|

Monthly with scaled usage pricing

|

|

Free tier

|

Yes

|

|

Local production simulation

|

No

|

|

Language selection

|

Node, Python, Go Runtime, Java, .NET, Ruby, PHP

|

|

Infrastructure as code

|

With Terraform

|

|

CLI availability

|

Yes

|

|

Continuous deployment

|

No

|

Azure functions

Microsoft is sure to bring their own FaaS solutions to the table, and with one of the most diverse set of supported languages, as well. No matter how you write your business logic, Microsoft's rich ecosystem and pre-existing billing approval with many companies make it a great place to host serverless workloads.

Plugins, integrations, and libs

|

Limited, but growing

|

|

Edge ready

|

No

|

|

Cost

|

Various plans, scaled usage and dedicated

|

|

Free tier

|

Free limit with billing account

|

|

Local production simulation

|

No

|

|

Language selection

|

C#, JavaScript, F#, Java, Powershell, Python, TypeScript (Transpiled to JavaScript)

|

|

Infrastructure as code

|

Yes

|

|

CLI availability

|

Yes

|

|

Continuous deployment

|

Yes

|

Vercel

Vercel has long been a favorite for rapid deployment in the JavaScript community. Having taken a developer obsessed focus to product offerings and being the makers of the wildly popular NextJS framework, they’ve been the gateway to serverless computing for many.

Plugins, integrations, and libs

|

Deployment triggers, collaborative editing, and analytics

|

|

Edge ready

|

Yes

|

|

Cost

|

Monthly with fixed caps

|

|

Free tier

|

Yes

|

|

Local production simulation

|

Yes

|

|

Language selection

|

Node, Go, Python, Ruby

|

|

Infrastructure as code

|

Minimal, routing and execution parameters

|

|

CLI availability

|

Yes

|

|

Continuous deployment

|

Yes (Github, GitLab, Bitbucket)

|

Gatsby Cloud functions

For those with front-end experience, Gatsby is a familiar name, having extolled the promises and benefits of static sites for years. Born of the static first approach is the need to still have a few processes running server side. Gatsby Cloud functions is a great place to see just how far the frontier of static plus serverless has really gone.

Plugins, integrations, and libs

|

None

|

|

Edge ready

|

Yes

|

|

Cost

|

Monthly with hard caps

|

|

Free tier

|

Yes

|

|

Local production simulation

|

No

|

|

Language selection

|

Javascript and Typescript

|

|

Infrastructure as code

|

No

|

|

CLI availability

|

No (not for cloud functions)

|

|

Continuous deployment

|

Yes (Github, GitLab, Bitbucket)

|

Netlify functions

Another well loved tool by front-end developers, Netlify is an all-inclusive solution for hosting web applications, cloud functions, and more. For those looking for less overhead than the big cloud providers, Netlify is a great option that can still perform as well as the rest of them.

Plugins, integrations, and libs

|

Observability and operations integrations

|

|

Edge ready

|

Yes

|

|

Cost

|

Monthly with incremental overage pricing

|

|

Free tier

|

Yes

|

|

Local production simulation

|

Yes

|

|

Language selection

|

JavaScript, TypeScript, Go

|

|

Infrastructure as code

|

Deployment and routing configuration

|

|

CLI availability

|

Yes

|

|

Continuous deployment

|

Beta

|

Cloudflare Workers

Cloudflare workers are a newer entrant into the cloud computing ecosystem, but with the extensive experience of Cloudflare in edge networks, they’ve risen quickly in prominence.

Plugins, integrations, and libs

|

Libs for traditional web processing like KV stores, caching, and more.

|

|

Edge ready

|

Yes

|

|

Cost

|

Monthly with scaled usage pricing

|

|

Free tier

|

Yes

|

|

Local production simulation

|

Yes

|

|

Language selection

|

Anything that compiles to Javascript or WASM.

|

|

Infrastructure as code

|

Minimal, routing and execution parameters

|

|

CLI availability

|

Yes

|

|

Continuous deployment

|

No

|

Fly.io

Fly.io does a lot more than cloud computing, including multi-cloud, distributed databases. Their cloud computing, however, is why they’re in this list, so the stats are:

Plugins, integrations, and libs

|

Libs for traditional web processing like KV stores, caching, and more.

|

|

Edge ready

|

Yes

|

|

Cost

|

Monthly with scaled usage pricing

|

|

Free tier

|

Yes

|

|

Local production simulation

|

Yes

|

|

Language selection

|

Anything that compiles to Javascript or WASM.

|

|

Infrastructure as code

|

Minimal, routing and execution parameters

|

|

CLI availability

|

Yes

|

|

Continuous deployment

|

No

|

Oracle Functions

Rounding out their long standing strong feature set, oracle has surprisingly one of the more open and portable FaaS solutions available, allowing the product to be wholesale picked up and transferred into alternative cloud environments.

Plugins, integrations, and libs

|

Limited

|

|

Edge ready

|

Yes

|

|

Cost

|

Monthly with scaled usage pricing

|

|

Free tier

|

Yes with billing account

|

|

Local production simulation

|

No

|

|

Language selection

|

Node, Java, Python, Ruby, Go

|

|

Infrastructure as code

|

Yes

|

|

CLI availability

|

Yes

|

|

Continuous deployment

|

No

|

IBM Functions

Of course big blue wouldn’t be left out of the cloud revolution. As part of a massive push into the cloud, IBM has kept lock step with all the major cloud developments while maintaining the enterprise features and trust they’ve come to be counted on for by so many of the world’s biggest companies.

Plugins, integrations, and libs

|

Yes

|

|

Edge ready

|

Yes

|

|

Cost

|

Monthly with scaled usage pricing

|

|

Free tier

|

Yes

|

|

Local production simulation

|

No

|

|

Language selection

|

JS/Node, Swift, Java, Python, Ruby, Go, PHP, .NET, Custom

|

|

Infrastructure as code

|

Yes

|

|

CLI availability

|

Yes

|

|

Continuous deployment

|

No

|

Tencent Cloud

Tencent is the juggernaut cloud you either hear everything or nothing about. Dominating the asian market as well as powering many of the popular apps arrayed across your phone, Tencent has a robust offering worth adding to your price comparison analysis.

Plugins, integrations, and libs

|

Yes, many options

|

|

Edge ready

|

No

|

|

Cost

|

Scaled usage pricing

|

|

Free tier

|

Yes - 6 Months

|

|

Local production simulation

|

No

|

|

Language selection

|

Python, Node, Go, PHP, Java, Custom

|

|

Infrastructure as code

|

Yes

|

|

CLI availability

|

Yes

|

|

Continuous deployment

|

No

|

Alibaba Cloud

Another of the asian giants, Alibaba is more than the everything store of the east, it boasts a growing and competitive cloud offering as well. Alibaba has a multitude of additional developer tools available including integrated IDEs and more.

Plugins, integrations, and libs

|

Yes, many options

|

|

Edge ready

|

No

|

|

Cost

|

Scaled usage pricing

|

|

Free tier

|

Yes - 12 Months

|

|

Local production simulation

|

No

|

|

Language selection

|

C#, Python, Node, Go, PHP, Java, Custom

|

|

Infrastructure as code

|

Yes

|

|

CLI availability

|

Yes, multiple

|

|

Continuous deployment

|

No

|

Redhat Open Shift

Open Shift is a strong contender in cloud computing and robust development environments. Their FaaS support is an early beta preview, but worth keeping an eye on for all the benefits Redhat has to offer.

Plugins, integrations, and libs

|

No

|

|

Edge ready

|

Yes

|

|

Cost

|

Monthly with scaled usage pricing

|

|

Free tier

|

Yes

|

|

Local production simulation

|

No

|

|

Language selection

|

Node, Python, Go, Quarkus (Java), TypeScript

|

|

Infrastructure as code

|

Yes

|

|

CLI availability

|

Yes - exclusively

|

|

Continuous deployment

|

No

|

No-Code / Low-Code providers

These last providers don’t warrant an analysis block in that much of their core product offering is to abstract those implementation details away anyways. But for speed of prototyping, and, in many cases, aptly handling a large number of use cases, the low-code / no-code providers make the list.

TinyFunction

A very new entrant onto the scene, TinyFunction is, without a doubt, the fastest way to get a function executing at an endpoint. And that’s all it does. If you’re looking for a quick prototype method, look no further.

Pipedream

Pipedream is a robust platform that performs well under load. They provide a large number of integrations from Fitbit to finance. While still allowing for breakout code environments, they opt for a configuration over code interface design that allows anyone to drag-and-drop glue code with minimal effort.

Autocode

Autocode allows you to write any type of serverless code and implement with their large standard library of external service providers. With helpful log handling, team account management, and an intuitive online IDE, Autocode is a great solution to bridge the gap from pseudo-code to production.

Using FaaS with Hasura

Where does FaaS fit into the Hasura story? Hasura has two specific features that pair really well with serverless execution: actions and events.

With actions, you can design serverless workloads that execute behind a custom query or mutation field. If you need to perform custom authentication logic, this is a perfect use case for actions.

With events, you can also define serverless workloads that execute in reaction to events that happen in your database. Let’s say a user uploads a PDF to your storage engine, which in turn writes that file reference into your database, an event could begin processing that PDF with OCR for content extraction. Paired with subscriptions, this is a great way to execute long running processes.

A bonus opportunity for cloud execution is for micro GraphQL services that perform limited functionality that can then be added as remote schemas. Performance restrictions will limit the number of cases this should be used in production, but for some problems this can be a good solution!

Conclusion

Serverless execution is here to stay. With the rough 80/20 analysis method, serverless will be a dominant strategy for many companies that want to control costs, embrace low-op approaches to infrastructure, and focus on domain logic, not managing their own servers. Embracing FaaS providers is a great way to begin the serverless journey for incremental adoption and observing the benefits that it provides.

Top comments (0)