What's a reasonable way to tackle a problem that looks hard to solve? Well, you would need to try to make the problem easier and there are some problems that can be solved by going smaller - splitting the problem multiple times into sub-problems, looking for an answer.

Those sub-problems can either interact with each other (i.e. requiring one of them to be solved before solving another one) or they could be entirely separate from one another, perfectly fitted for solving separately and then combining them.

Both cases describe problems that can be solved using two programming paradigms widely considered to be siblings: dynamic programming, for the first type of problems, and divide and conquer, for the second type. For now, we will look into the second type of problems, with the first one to come in its own, later, article.

Defining Divide and Conquer Formally

Divide and conquer is an algorithm design paradigm which works by recursively breaking down a problem into a number of sub-problems until they become easy enough to be solved directly and then combining their solutions.

In general, three steps can be observed in the algorithms designed using this paradigm:

- Divide the problem into smaller sub-problems. (i.e. smaller instances of the problem, like sorting an array of size N/2 instead one of size N)

- Conquer the sub-problems, solving them recursively. If a sub-problem has become small enough, solve it directly. (i.e. sort an array of two numbers)

- Combine the solutions found directly by moving up the recursive stack. This allows for the sub-problems to pass the solution to their "parent" problems, until getting to the "big" problem. (i.e. merge two sorted arrays)

The examples I have given to each steps sum up to explain the concept of one of the most efficient comparison-based sorting algorithms - Merge Sort, which follows a Divide and Conquer approach.

Quicksort, another efficient sorting algorithms also follows this design paradigm.

However, in this article we will focus on another extremely interesting problem that can be solved using a Divide and Conquer approach. Let's state it.

Consider an euclidean plane containing N points given by their x and y coordinates. Determine the distance between the closest two points in the plane.

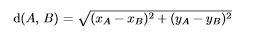

We know that the distance between two points, A and B can be expressed as:

From now on, we may denote this distance as d(A, B), for simplicity.

We can devise a straightforward solution - consider every pair of points A and B and calculate their distance. This approach has an O(N^2) time complexity.

Let's try and find another solution by following a Divide and Conquer approach.

In order to do that, we have to consider subsets of points at each step. We have to find the point at which computing the closest two points of such a subset is direct. Let P be a subset of points.

The last subset of P we can split so that we can cheaply calculate its two closest points and avoid searching for pairs in a subset of size 1 is 4. If we go smaller and try to split a subset of 3 into two, we would then have to find the closest pair of points in a one point subset.

(in the lines below, |P| means the size, or cardinal, or P)

Then:

- if |P| < 4, consider all |P|/2 pairs and remember the smallest distance.

- if |P| >= 4, let us follow the paradigm "recipe":

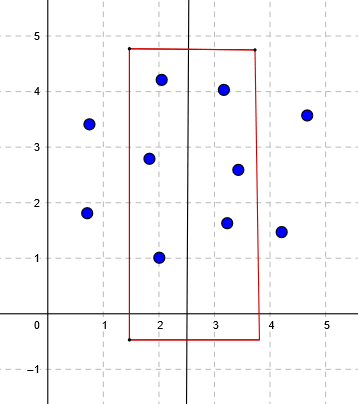

- Divide - let's determine a vertical line, let it be called a, which "cuts" our set of points P in two subsets. Let us call them PL and PR, for P left and P right. We need to determine this line such that the subsets are as close in size as possible.

- Conquer - recursively find the two closest points in PL and PR.

- Combine - let us assume that the answer to the problem, for set P, is D. Then, D can come from one of the two recursive calls or can be obtained from a point in PL and the other in PR. If we already have a candidate for D from the recursive calls, let it be called d, it is obvious that those two points can only come from a region of length d at most on each side of the line. We then, have to pick the points from PL that are situated at distance d from a at most and the same for PR.

We can find those points at step 3 by building another set, Q, storing the points on either side of line a that are, at most, at distance d from line a. We can then brute-force our way to finding if there is any pair of points that can result in a smaller distance.

For example, considering the set of points I chose above, it is possible to obtain an answer to the problem by looking in the area determined by the red rectangle. Bear in mind that this drawing is not exact, as I have approximated the distances and how the area would look myself.

Now let's look at a Python implementation of the method I presented. We will work with the squares of the distances to improve performance.

That concludes our article for today. The Magic of Computing will be back with another article in which we will discuss the other problem solving paradigm I mentioned in this article - dynamic programming. Until then, you could take a look at some backtracking insights. Or maybe you fancy Graph Theory. Or you're a big fan of the Fibonacci series?

Top comments (0)