Setting up logging inside containers can be annoying — especially when logs vanish with the container or you have to mess with volume mounts just to see what's going on.

Here's how I made it super simple using Grafana Alloy to send logs from a Flask server running inside a Docker container to Grafana Cloud, without touching host volumes.

Quick Demo App: Flask Logger

Let's start with a super basic Flask app that just logs requests:

# app.py

from flask import Flask

import logging

app = Flask(__name__)

logging.basicConfig(filename="liveapi.log", level=logging.INFO)

@app.route("/")

def hello():

app.logger.info("GET / was hit")

return "Hello, World!"

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5000)

This will create a liveapi.log file in the current working directory.

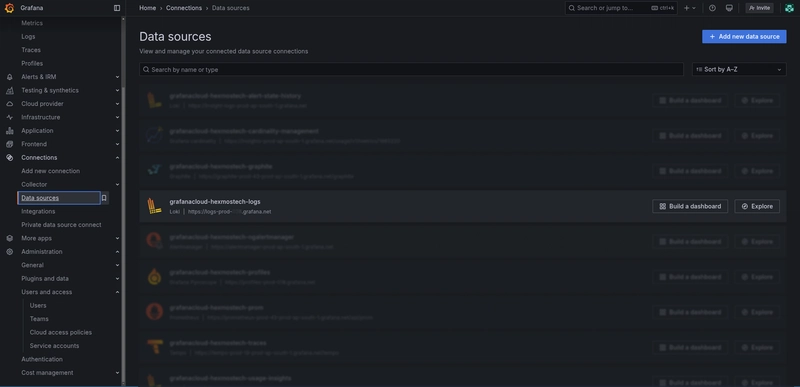

☁️ Set Up Grafana Cloud + Loki

- Go to Grafana Cloud.

- Sign up or log in.

- In the sidebar, go to Connections → Data sources.

- Search for Loki and set it up.

- Copy the Loki Push URL from the connection config. It'll look like:

https://<username>:<api-key>@logs-prod-000.grafana.net/api/prom/push

You'll use this in Alloy later.

🐳 Dockerfile: Flask + Alloy Without Volume Mounts

Here's the Dockerfile that installs everything, writes logs, and sends them to Grafana Loki using Alloy:

FROM debian:bookworm-slim

RUN apt-get update && apt-get install -y \

python3 python3-pip gpg wget curl gnupg ca-certificates systemctl \

&& rm -rf /var/lib/apt/lists/*

WORKDIR /app

COPY requirements.txt .

RUN pip3 install --no-cache-dir --break-system-packages -r requirements.txt

# Install Alloy

RUN mkdir -p /etc/apt/keyrings/ && \

wget -q -O - https://apt.grafana.com/gpg.key | gpg --dearmor | tee /etc/apt/keyrings/grafana.gpg > /dev/null && \

echo "deb [signed-by=/etc/apt/keyrings/grafana.gpg] https://apt.grafana.com stable main" > /etc/apt/sources.list.d/grafana.list && \

apt-get update && apt-get install -y alloy && \

rm -rf /var/lib/apt/lists/*

COPY . .

RUN mkdir -p /etc/alloy/ && cp config.alloy /etc/alloy/config.alloy

EXPOSE 5000

CMD systemctl restart alloy && python3 app.py

No volume mounts. No host bind paths. Everything is self-contained inside the container.

⚙️ config.alloy (Log Pipeline for Alloy)

Create config.alloy in your repo:

local.file_match "local_files" {

path_targets = [{ "__path__" = "/app/liveapi.log" }]

sync_period = "5s"

}

loki.source.file "log_scrape" {

targets = local.file_match.local_files.targets

forward_to = [loki.process.filter_logs.receiver]

tail_from_end = true

}

loki.process "filter_logs" {

forward_to = [loki.write.grafana_loki.receiver]

stage.static_labels {

values = {

job = "liveapi"

service_name = "liveapi"

}

}

}

loki.write "grafana_loki" {

endpoint {

url = "https://<your-username>:<your-api-key>@logs-prod-000.grafana.net/api/prom/push"

}

}

Change the endpoint to match your Grafana Loki Push URL.

Build & Run

Build the Docker image:

docker build -t flask-alloy .

Run it:

docker run -p 5000:5000 flask-alloy

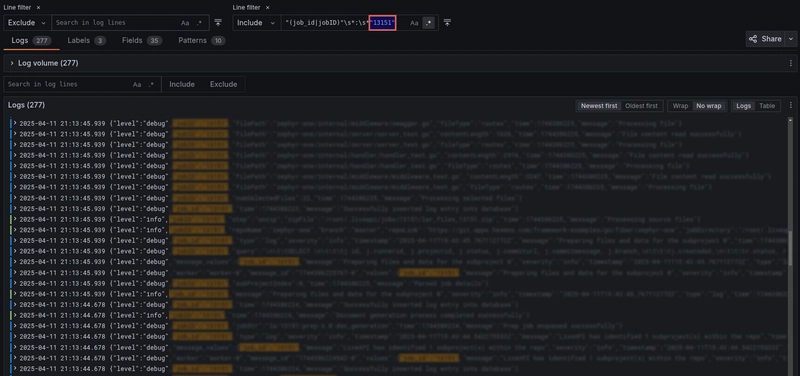

Now visit http://localhost:5000 to trigger logs. Check Grafana Cloud’s Explore tab and query:

{job="liveapi"}

Boom — your logs are in the cloud. 🎉

Any Better Way?

Let me know in the comments — is there a simpler or cooler way you’re pushing logs to Grafana Loki from containers? Would love to steal it.

I’ve been actively working on a super-convenient tool called LiveAPI.

LiveAPI helps you get all your backend APIs documented in a few minutes

With LiveAPI, you can quickly generate interactive API documentation that allows users to execute APIs directly from the browser.

If you’re tired of manually creating docs for your APIs, this tool might just make your life easier.

Top comments (2)

Amazing guide, and it's super simple to follow!

Thanks @nevodavid :)