I just realized my old branch wasn't merged in yet, and as I do that, my CI flags up coverage failure, since I'd set the test coverage minimum to 95%, and was getting lower than that.

While you shouldn't live life by these numbers, these are indicators and should be looked into. If I deem there's a reason to allow it, I'd ignore it. The code for this post can be found at commit 48c6051

First, I'm going to add a quick script into my Pipfile called test_report to help look at coverage results. Since pycov generates HTML reports, this line of code makes my life easier by spitting out the HTML report and starting a temporary webserver with python to let me view it. If I weren't using WSL, I could have this script pop open a web browser too

[scripts]

test = "pytest"

test_report = "bash -c 'pytest --cov-report=html && python -m http.server --directory htmlcov'"

deploy = "./deploy.sh"

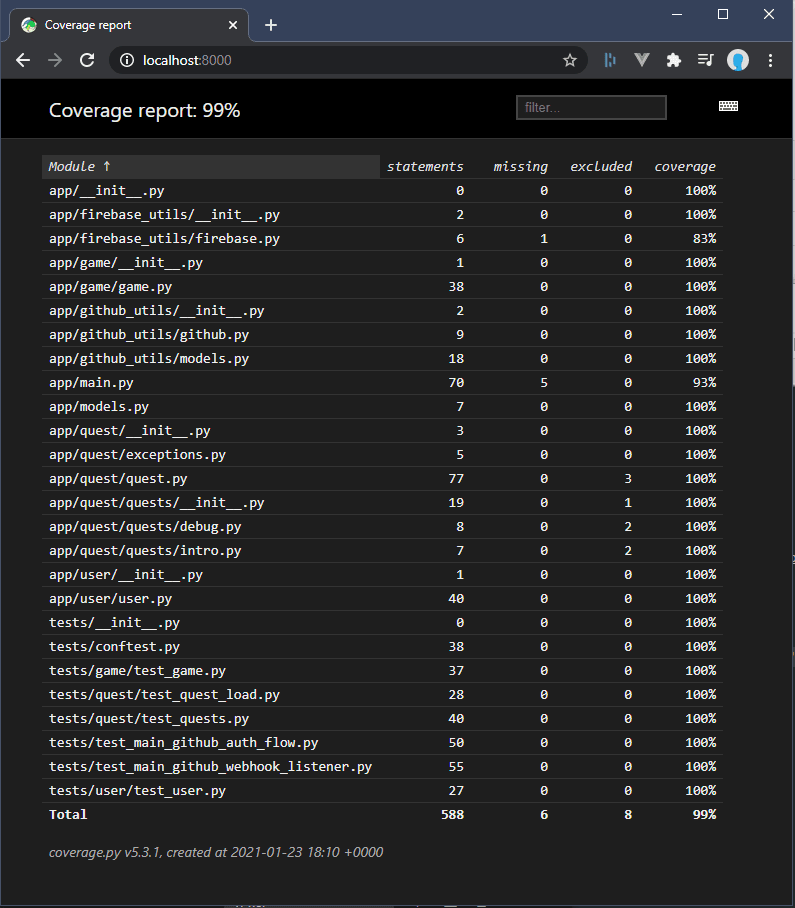

Now I can have a browsable file tree containing coverage results

I need to set up my VSCode extensions to display coverage results at some point. For now this is an easy way to view my report

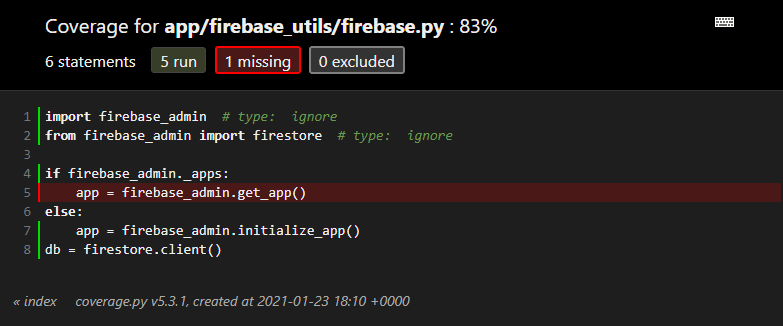

firebase.py

Starting with Firebase.py, at 83% coverage, the reason is simple: I added in some conditional code to return the previously initialized firebase app/singleton, when in fact, putting this code in it's own module achieved the same effect, so it never ends up going into the branch. And since it's only 8 lines of code, drops the coverage by 12.5%.

I simply remove this whole if statement. Done

main.py

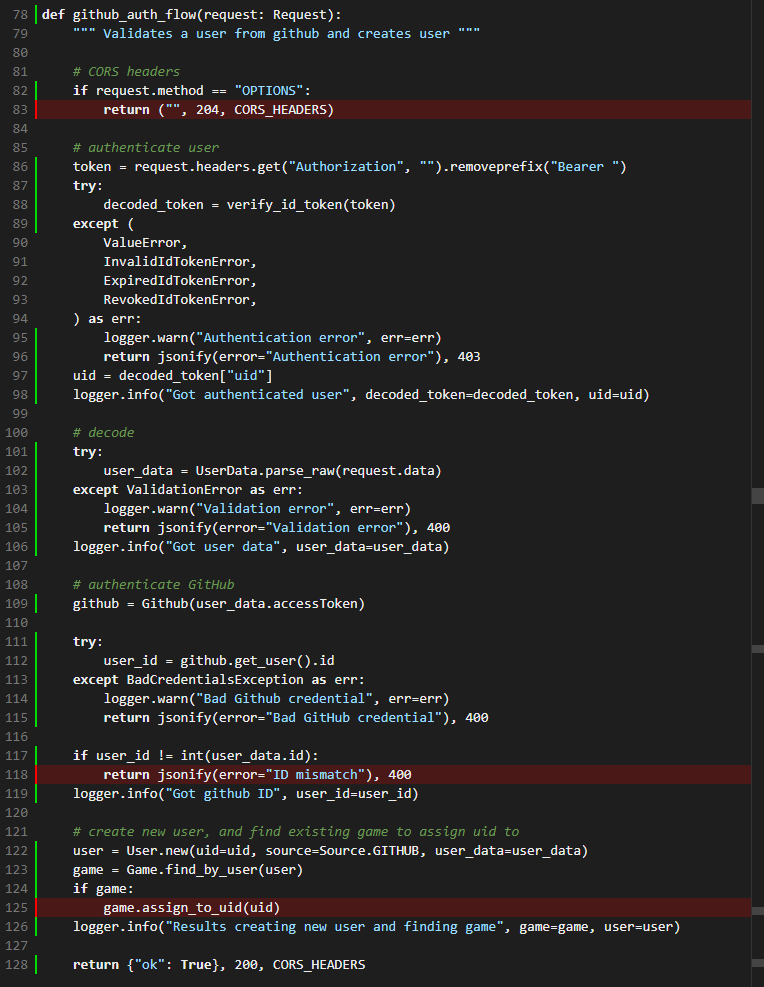

Over at the main function, the first lack of coverage is an error handling when the hook repo didn't correctly match.

This indicates I'm missing a test where I present this function with a bad repo address. To adequately test this, I would need to generate some new hook payloads that are otherwise valid but have the wrong source repo. So I simply add an extra test case here.

In the next function, there's a few things going on, firstly a missing CORS header check. I don't have a test for CORS header handling. While I could add one, I've opted to instead eliminate this manual check and just use the flask-cors library that provides a decorator. Now instead of handling CORS manually, I let the decorator deal with it:

from flask_cors import cross_origin

@cross_origin(

origins=CORS_ORIGIN,

headers=["Authorization", "Content-Type"],

supports_credentials=True,

)

def github_auth_flow(request: Request):

""" Validates a user from github and creates user """

...

The next item relates to handling when the provided user ID does not match the user ID that github returns as a token, this may happen in a spoofing attempt for example. For this I add another test that manipulates the user Id data provided to the function so that it is incorrect.

def test_id_mismatch(auth_flow_client, test_user_token, user_data):

""" Test a successful flow """

user_data["id"] = "foobar" # make id bad

res = auth_flow_client.post(

"/", headers={"Authorization": "Bearer " + test_user_token}, json=user_data

)

assert res.status_code == 403

Finally, the last remaining line not covered by testing is the event where a game already exists so that a user can be assigned. To achieve this, I need to go and create a new game for the user before anything else, so the test fixture now looks like this:

def test_good_flow(auth_flow_client, test_user_token, user_data, test_auth_user):

""" Test a successful flow """

# make sure a game exists for user

user = User.reference(Source.GITHUB, user_data["id"])

game = Game.new(user, "fork_url")

res = auth_flow_client.post(

"/", headers={"Authorization": "Bearer " + test_user_token}, json=user_data

)

assert res.status_code == 200

# check firestore

doc = db.collection("users").document(test_auth_user.uid).get()

assert doc.exists

assert doc.get("id") == user_data["id"]

# cleanup

db.collection("game").document(game.key).delete()

db.collection("system").document("stats").update({"games": firestore.Increment(-1)})

# cleanup auto-created quest too

QuestClass = Quest.get_first()

quest = QuestClass()

quest.game = game

db.collection("quest").document(quest.key).delete()

There's a lot of cleanup in here. I can reduce a lot of this by re-using the fixtures already defined for creating users. I will do so in a future tidy-up, particularly if I can get the local Firestore emulator working so that I no longer have to test with the actual database (and therefore don't have to worry about cleaning up after test data).

Automatically handle exceptions

Firebase's python functions framework has a way of registering exception handlers to automatically run to return values on exception, rather than having to explicitly return a json object. We can use this to keep the code tidy. For now, I will add a single exception handler for dealing with pydantic validation failures:

@errorhandler(ValidationError)

def validation_error(err: ValidationError):

""" Handler for pydantic validation errors (usuall http payload) """

logger.error("Validation error", err=err)

return jsonify(error="Validation error"), 400

Now, within each of the functions, I no longer have to explicitly handle the pydantic validationError, as the framework will run this function for me when that exception occurs.

Dependency Injection

One thing I really miss about using FastAPI, and having to work with this kind of weird Flask-like implementation, is how FastAPI handle's dependency injection. So... let's roll our own. I use some of Python's introspection methods to detect whether there's a Pydantic model defined as any of the function arguments' type hints, and do a decode of the payload and inject it into the function, using a decorator:

def inject_pydantic_parse(func):

""" Wrap method with pydantic dependency injection """

def wrapper(request: Request):

kwargs = {}

for arg_name, arg_type in get_type_hints(func).items():

parse_raw = getattr(arg_type, "parse_raw", None)

if callable(parse_raw):

kwargs[arg_name] = parse_raw(request.data)

logger.info(

"Decoded model and injected",

model=arg_type.__name__,

func=func.__name__,

)

return func(request, **kwargs)

return wrapper

@inject_pydantic_parse

def github_webhook_listener(request: Request, hook_fork: GitHubHookFork):

""" A listener for github webhooks """

...

In the above example, github_webhook_listener() has been wrapped by my new inject_pydantic_parse() function, which will detect that there is an extra hook_fork: GitHubHookFork argument, and since GitHubHookFork has a parse_raw callable, it will run request.data through it, and inject it as that argument.

Now, whenever one of my functions expects a json payload that I have a pydantic model for, I just specify it as an argument whose type hint is a pydantic object (or any class with a `parse_raw()' method, thanks to duck-typing). This keeps the code clean of duplicate pydantic model decoding and error handling.

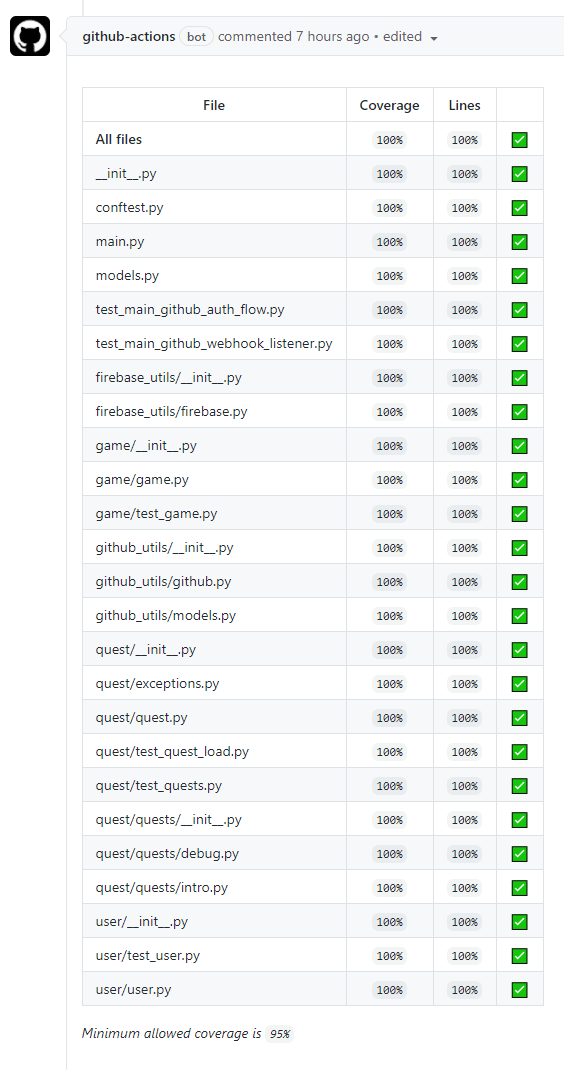

And now, the CI reports 100% test coverage!

Top comments (0)