This is a Plain English Papers summary of a research paper called Samba: Simple Hybrid State Space Models for Efficient Unlimited Context Language Modeling. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

- This paper introduces a new language modeling approach called "Samba" that combines simple state space models with large language models for efficient and scalable unlimited context modeling.

- Samba uses a hybrid architecture that integrates recurrent neural networks with linear time-invariant state space models to capture both short-term and long-term dependencies in text.

- The authors demonstrate that Samba achieves competitive perplexity scores on standard language modeling benchmarks while being more efficient and scalable than previous state-of-the-art models.

Plain English Explanation

The researchers have developed a new way to build language models, called "Samba", that can understand and generate human language more efficiently than existing approaches. Traditional language models struggle to capture both short-term patterns and long-term dependencies in text. Samba solves this by using a combination of simple mathematical models and large neural networks.

At the core of Samba is a state space model - a type of mathematical model that can efficiently represent and predict sequences of data over time. This state space model is combined with a large neural network, which helps Samba understand the complex semantics and structure of natural language.

By blending these two components, Samba can understand the immediate context of a piece of text as well as broader, longer-term patterns. This allows it to generate human-like text that flows naturally and coherently, without requiring huge amounts of computational power. The researchers show that Samba performs well on standard language modeling benchmarks, matching the accuracy of state-of-the-art models while being more efficient and scalable.

Technical Explanation

The paper introduces a new language modeling approach called "Samba" that combines simple state space models with large neural language models to capture both short-term and long-term dependencies in text.

Samba's architecture integrates a linear time-invariant state space model with a large transformer-based language model. The state space model handles the long-range context, while the neural network handles the local, short-term patterns. This hybrid approach allows Samba to be more efficient and scalable than previous state-of-the-art models.

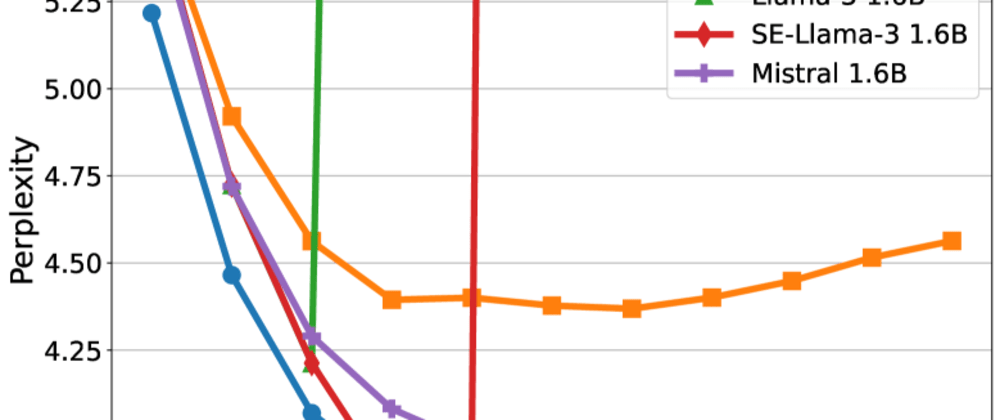

The authors evaluate Samba on standard language modeling benchmarks and show that it achieves competitive perplexity scores while being more computationally efficient than previous approaches. This demonstrates the potential of combining simple state space models with large neural networks for effective and scalable language modeling.

Critical Analysis

The paper provides a thorough evaluation of Samba's performance on standard language modeling tasks, but there are a few potential limitations that could be explored in future work:

- The authors only evaluate Samba on text-based language modeling benchmarks. It would be interesting to see how the model performs on tasks involving multimodal data, such as vision-language modeling.

- The paper does not delve into the interpretability of Samba's internal representations. Understanding how the state space and neural network components interact to capture language structure could lead to valuable insights.

- The authors mention that Samba is more computationally efficient than previous models, but they do not provide a detailed analysis of the model's scaling properties or its suitability for real-world, resource-constrained deployment scenarios.

Overall, the Samba approach is a promising step forward in the quest for efficient and scalable language modeling. Further research exploring its broader applications and potential limitations could yield valuable insights for the field.

Conclusion

The Samba paper presents a novel language modeling approach that combines simple state space models with large neural networks to capture both short-term and long-term dependencies in text. By blending these two components, the authors demonstrate that Samba can achieve competitive performance on standard language modeling benchmarks while being more computationally efficient than previous state-of-the-art models.

This work highlights the potential for hybrid architectures that leverage the strengths of different modeling techniques to create more effective and scalable language models. As natural language processing continues to advance, approaches like Samba may play an important role in developing language models that are both accurate and practical for real-world applications.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Top comments (0)