This is a Plain English Papers summary of a research paper called Understanding Hallucinations in Diffusion Models through Mode Interpolation. If you like these kinds of analysis, you should subscribe to the AImodels.fyi newsletter or follow me on Twitter.

Overview

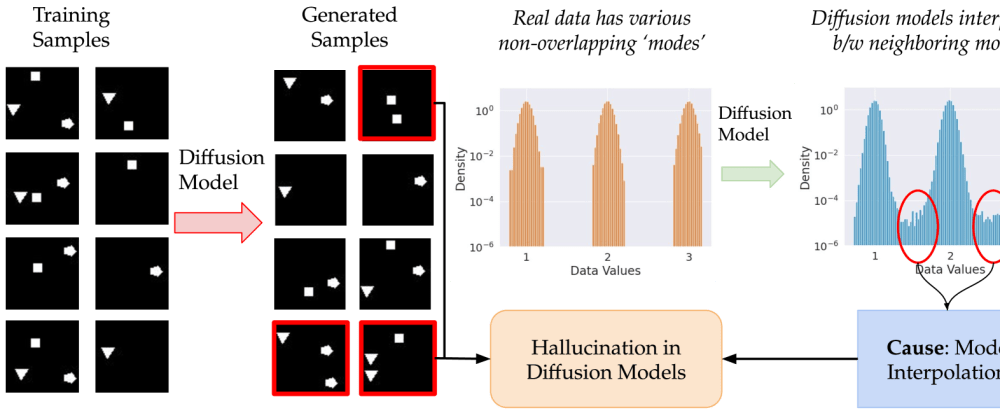

- This paper explores the issue of "hallucinations" in diffusion models, which are a type of machine learning model used to generate images.

- Hallucinations refer to the model generating content that does not align with the input data, such as creating objects or details that are not present in the original image.

- The researchers investigate this phenomenon through a technique called "mode interpolation", which allows them to better understand how diffusion models behave and the factors that contribute to hallucinations.

Plain English Explanation

Diffusion models are a powerful type of AI that can create new images from scratch. However, sometimes these models can generate content that doesn't quite match the original image - this is what's known as "hallucination." The researchers in this paper looked into hallucinations in diffusion models using a technique called "mode interpolation."

Mode interpolation allows the researchers to explore how diffusion models work under the hood and what factors might lead to hallucinations. By understanding this better, they hope to find ways to reduce or eliminate hallucinations in the future. This is an important issue because we want AI-generated images to be accurate and truthful representations, not something that's been "made up" by the model.

The paper dives into the technical details of how diffusion models and mode interpolation work, but the key takeaway is that the researchers are trying to shine a light on this hallucination problem in order to improve the reliability and trustworthiness of AI-generated images going forward. Looks too good to be true: Information

Technical Explanation

The researchers use a technique called "mode interpolation" to better understand hallucinations in diffusion models. Diffusion models work by adding noise to an image in a stepwise fashion, then learning to reverse that process to generate new images. However, this can sometimes lead to the model "hallucinating" content that isn't present in the original data.

Mode interpolation allows the researchers to visualize the different modes, or "subimages", that the diffusion model is learning. By interpolating between these modes, they can see how the model transitions between different types of content and where hallucinations might occur. Tackling Structural Hallucination in Image Translation with Local Diffusion

The paper provides detailed experiments and analysis of how mode interpolation reveals insights about hallucinations in diffusion models. For example, they find that hallucinations are more likely to occur when the model has to "bridge the gap" between different modes or types of content in the training data.

Critical Analysis

The researchers acknowledge several limitations in their work. For one, mode interpolation only provides a partial view into the inner workings of diffusion models - there may be other factors beyond just the modes that contribute to hallucinations. Hallucination in Multimodal Large Language Models: A Survey

Additionally, the experiments are conducted on a relatively simple image generation task, so it's unclear how well the insights would translate to more complex, real-world applications of diffusion models. Further research would be needed to validate the findings at scale.

That said, the mode interpolation technique does seem like a promising avenue for better understanding and potentially mitigating hallucinations in these types of generative models. The researchers outline some directions for future work, such as investigating the role of model architecture and training data in hallucination behavior. Alleviating Hallucinations in Large Vision-Language Models Through Prompting

Conclusion

This paper takes an important step towards unpacking the issue of hallucinations in diffusion models, a critical problem as these generative AI systems become more widely adopted. By leveraging mode interpolation, the researchers gain valuable insights into the inner workings of diffusion models and the factors that can lead to the generation of content that doesn't align with the input data.

While more research is needed, this work lays the groundwork for developing strategies to reduce or eliminate hallucinations, which will be crucial for ensuring the reliability and trustworthiness of AI-generated imagery. Prescribing the Right Remedy: Mitigating Hallucinations in Large Vision-Language Models As diffusion models and other generative AI continue to advance, addressing the challenge of hallucinations will only become more important for the field.

If you enjoyed this summary, consider subscribing to the AImodels.fyi newsletter or following me on Twitter for more AI and machine learning content.

Top comments (0)