Over the last weeks, our team successfully has taken on the challenge to develop a simple and yet effective Content Moderation Service. The end goal is to help app creators keep their projects safe for work and free from abusive images, without spending too much time and effort in content moderation.

The solution comes in a brief series of just three tutorials that present a fully-functional Open-Source Content Moderation service with ReactJS based Admin Panel that can be easily integrated into every project even if this is your first encounter with Machine Learning.

Agenda

6. Configuration and Deployment

Background

We have already released the first piece of our moderation system just last week. It offers a ready-to-integrate image classification REST API, which uses NSFW.JS to return predictions how likely a certain image is categorized as Porn, Sexy, Hentai, Drawing or Neutral.

In case you missed the previous article about building the REST API, we would strongly recommend you to have a look now, as it gives further insights on the motivation behind this service, stack used, API setup and configuration.

The Problem

Solely classification of images still leaves us with the task to manually moderate them one by one... and if you’re lucky enough to have many active users, the total number of pics for approval may easily get your head spinning.

Let’s say we have a growing self-training fitness application with a social element to it. Everybody wants a beach body...and they want to show it off! 😄 To stay on track and motivate each other, our users upload photos of their progress.

Now, imagine it’s a relatively medium-sized app with 5 000 daily users and 5 000 photos are updated every 24 hours. That makes 35 000 per week and 150 000 per month. WOW! Do we need to set aside a budget for content moderators? Or go through 150.000 pictures manually each month? No, thanks! There’s gotta be an easier way.

Instead of examining a huge pile of photos, we will put Machine Learning into action and upgrade the Image Classification REST API with automation logic that will significantly cut down the number of photos for manual moderation.

The Solution

We already have an easy way of classifying images, but going through all the results still is quite time-consuming...and to be honest - not really fun. So to optimize the process - we will add automation.

As in the first tutorial, we will use a SashiDo hosted app for the examples, so we can spare any infrastructure hassles. Anyhow, the code is open-source and can be integrated into projects hosted on any other provider that supports fully featured NodeJS + MongoDB - Parse Server or even cloud-hosting solutions like Digital Ocean and AWS.

This second part of our moderation service holds altogether the Image Classification API and Moderation Automation Engine. You can simply clone the project from SashiDo’s GitHub repo.

git clone https://github.com/SashiDo/content-moderation-automations

Next, deploy it to production and set the parameters defining which photos will be considered as safe or toxic. Simple as that! 😊 But wouldn’t be nice to have some context what’s all about?

Automation Engine

The entire service is built on top of Parse Server (NodeJS Backend framework) and uses Cloud Code for all server-side logic. To solve the task at hand and automate the decision making process we use Simple Cloud Code Triggers and build the process around a few easy steps. Basically, the fundament of the Automation Engine is to determine whether an image is considered safe or harmful for your users based on your predefined ranges.

Moderation Preferences

In the beginning, you need to define which of the five NSFW classes and values can contain disturbing images and require moderation, i.e. which prognoses are considered safe and which toxic.

For our fitness application, for example, images with classification Hentai > 0.8% are absolutely intolerable and we’ll want to directly flag those for deletion. Briefly, the idea is to define all classes and value ranges that will require moderation, e.g:

{

"Sexy": { "min": 0.5, "max": 0.8 },

"Porn": { "min": 0.4, "max": 0.8 },

"Hentai": { "min": 0.4, "max": 0.8 }

}

As defining your moderation preferences is of great importance, we’ll pay some special attention to its tuning later on.

The Pure Automation Process

The automation process is integrated with Parse Server afterSave trigger, which self-executes once a change to the class holding user-generated content is made.

The logic inside is simply to check if the newly uploaded photo is safe, toxic, or requires manual moderation and save the result to the database.

For the above-mentioned parameters, once we integrate the Automation Engine, it will identify all pictures that classify below the min values as safe and the ones above the max values - toxic. The in-between results require manual moderation.

In this project the afterSave is hooked to a collection named

UserImage. To use straight away after deployment, you should either keep the same class name or change with the respective one here into your production code.

Of course, it is up to you how these images will be handled on the client-side. You can also extend the Engine logic and customize the server-side in a way corresponding to the specific use case, applying auto-deletion of toxic images for example, and so on.

More information about the implementation of the Automation Engine and the Trigger you can find in the repo - NSFW Image Valuation and Cloud Trigger Automation

Database Schema

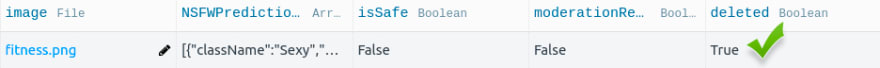

Keeping a neat record of classification and moderation actions also falls into the best practices that save time and effort. Storing information for each image gives us a clear history of why for instance it has been flagged as rejected or approved. However, you need to make some adjustments to the DB Schema and add the following columns to the DB collection that stores users’ images.

-

isSafe(Boolean) - If an image is safe according to the moderation preferences, the Automation Engine will mark

isSafe - true. -

deleted(Boolean) - Likewise, the Automation Engine will mark the inappropriate images, as

deleted - true. Those pictures won’t be automatically deleted from the file storage. Good practice suggests toxic images should not be erased, as they might help you detect abusive users. For example, we can easily check how many indecent photos are uploaded from a specific user and ban him from the app after a certain count that makes clear this is not an involuntary mistake but intended action. - moderationRequired(Boolean) - All images that lay in between the isSafe and the deleted mark. Manual moderation is required for those images!

- NSFWPredictions(Array) - Stores the NSFW predictions as json for this image.

The automation engine will take care to fill all data inside these columns respectively, once a photo is uploaded.

To learn more on how to upload your files with a Parse SDK you can take a look here: Uploading file with Parse JS SDK

Moderation Preferences Tuning

Evaluating which images are safe and which inacceptable is crucial and individual for every app or business. There are as many different preferences, like the apps on the market and no clear-cut, recognized standards.

For example, in our fitness application, uploading sexy images of well-shaped bodies after a completed program makes perfect sense. However, nudes are highly undesirable. And on the other hand, if we have a children’s app, where pre-school kids upload drawings - sexy photos are also out of boundaries.

You have to take into account which parameters match your needs best and set the ranges respectively. From then on our content moderation service provides a flawless way to adjust these settings. We save the Moderation Preferences into a moderationScores config parameter prior to deployment. Such an approach makes changing the criteria in times of need or for different projects as easy as pie! 😉

Examples

Let’s see how the automation will behave with some examples of suitable moderation parameters. We’ll set different moderationScores that match the respective audience for our fitness application and the one for children's art and check the results.

Quick throwback - the REST API classifies image probabilities 5 classes: Drawing, Hentai, Neutral, Porn, Sexy. If any of these classes is disturbing for your users, include it to the moderationScores.

1. Fitness Application

Let’s take the fitness Application we already mentioned for our first example. We decided that sexy photos are perfectly okay and do not need moderation, hence this class has no place in the moderationScores config. We’ll just add Porn and Hentai, as this is undesirable content for our users. Next, let's also include the Drawing class since we want fitness photos only. Here’s one recommendation for moderation preferences:

{

"Drawing": { "min": 0.4, "max": 0.8 },

"Porn": { "min": 0.4, "max": 0.8 },

"Hentai": { "min": 0.2, "max": 0.8 }

}

Let’s upload this sexy pic of woman training and see what happens. 🙂

The prediction we get from the API is:

{"className": "Sexy","probability": 0.9727559089660645},

{"className": "Neutral","probability": 0.01768375374376774},

{"className": "Porn","probability": 0.009044868871569633},

{"className": "Drawing","probability": 0.0004224120930302888},

{"className": "Hentai","probability": 0.00009305890125688165}

As the picture doesn’t fall in any of the classes that require moderation, the upload is automatically approved and we get the following result in the database:

2. Children’s drawings platform.

While the photo above is perfectly fine for our fitness app example, it can’t be defined as a kid’s drawing for sure. Therefore it has no place on our app for preschoolers’ art. Also, images that have even the slightest chance to be categorized as Hentai or Porn are definitely off the table. If only children’s drawings should be uploaded, we can include the Neutral class for moderation as well, but select higher values, as there might be a child holding a drawing for example.

Take a look at the parameters we’ve set to protect children and how the Automation Engine handles the same photo once we change the moderationScores.

{

"Porn": { "min": 0.1, "max": 0.4 },

"Sexy": { "min": 0.1, "max": 0.6 },

"Hentai": { "min": 0.1, "max": 0.4 } ,

"Neutral": { "min": 0.4, "max": 0.9}

}

As already established - moderationScores values are strictly specific, so even though we’ve shared some examples, our recommendation is to put some serious thought on fine-tuning the parameters to match your needs best!

Best thing to do is to set some default parameters, like the ones we suggest above and test with some real pictures from your app. Take 50-60 images that you consider inappropriate and monitor the NSFW predictions. This is a safe and effective way to calibrate the settings according to the beat of your project.

Manual Moderation

Next week we will bundlе the Image Classification REST API and Automation Engine with an impeccable React-based UI. This will allow you to rapidly make a decision for all photos that require manual moderation and apply action with just one click.

Still, if you can’t wait to add an interface to the moderation service, you can build an Admin panel of your own. On SashiDo, you can easily build an Admin panel with the JS technologies that please you most - Angular, React, Vue… just take your pick. 🙂

Here’s an example of how to get all images that require moderation from the most-used Parse SDKs and from Parse REST API.

JS SDK

const query = new Parse.Query("UserPicture");

query.equalTo("moderationRequired", true);

query.find().then((results) => {

console.log(results);

});

More information about how to work with the Parse JS SDK can be found in the official docs

Android SDK

ParseQuery<ParseObject> query = ParseQuery.getQuery("UserPicture");

query.whereEqualTo("moderationRequired", true);

query.findInBackground(new FindCallback<ParseObject>() {

public void done(List<ParseObject> UserPicture, ParseException e) {

if (e == null) {

Log.d("isSafe", "Safe images retrieved");

} else {

Log.d("isSafe", "Error: " + e.getMessage());

}

}

});

More information about how to work with the Android SDK can be found in the official docs.

iOS SDK

let query = PFQuery(className:"UserImage")

query.whereKey("moderationRequired", equalTo:true)

query.findObjectsInBackground { (objects: [PFObject]?, error: Error?) in

if let error = error {

// Log details of the failure

print(error.localizedDescription)

} else if let objects = objects {

// The find succeeded.

print("Successfully retrieved images for moderation")

}

}

}

More information about how to work with the Parse iOS SDK can be found in the official docs.

REST API

curl -X GET \

-H "X-Parse-Application-Id: ${APPLICATION_ID}" \

-H "X-Parse-REST-API-Key: ${REST_API_KEY}" \

-G \

--data-urlencode 'where={"moderationRequired": true}' \

http://localhost:1337/1/classes/UserImage

});

More details on REST Queries you can find in the official Parse REST API Guide. And SashiDo users can test REST requests from a super-friendly API Console that’s built in the Dashboard.

Configuration and Deployment

Hopefully, you now have a clear image of how the Classification REST API and Automation Engine work together. All that’s left is to set the configs. Apart from the moderationScores, we’ll include an option to enable/disable the automation and config caching.

Configuration

Parse Server offers two approaches for app config settings Parse.Config and Environment Variables. What’s the difference? Parse.Config is a very simple and useful feature that empowers you to update the configuration of your app on the fly, without redeploying. However, the downside is that these settings are public, so it’s not recommended for sensitive data. Environment variables, on the other hand, are private but will trigger a redeployment of your project each time you change something. As always, truth is somewhere in between and we’ll use both!

Parse.Configs

We’ve chosen to keep the moderationScores as a Parse.Config, so we can update and fine-tune criteria on the fly.

Also, we’ll add moderationAutomation option of type boolean. It gives us a way for enabling/disabling content moderation automation with just a click when needed. For example, when you want to test the new code version without automation.

Environment Variables

If you have already integrated the first piece of our service - the image classification API, then TF_MODEL_URL and TF_MODEL_SHAPE_SIZE are already set to your project. As these are a must, let me refresh your memory for the available options.

All that’s left to add is the CONFIG_CACHE_MS variable. It will serve us for cashing the Parse.Configs and the value you pass is in milliseconds.

Here are all the environment variables you need.

Deployment

SashiDo has implemented an automatic git deployment process following The Twelve-Factor App principle. To deploy the Automation Engine, first connect your SashiDo account to Github.

Once done, continue with the following simple steps:

1. Clone the repo from GitHub

git clone https://github.com/SashiDo/content-moderation-automations.git

2. Set the configs and env vars in production

checked ✔️

3. Add your SashiDo app as a remote branch and push changes

git remote add production git@github.com:parsegroundapps/<your-pg-app-your-app-repo>.git

git push -f production master

Missing something? Check the README.md for details. And don’t forget to drop us a line of what you would like to see also included in this article at hello@sashido.io :)

TA-DA!🎉 You’re now equipped with fully functional Content moderation logic that will surely spare you a lot of time.

What is Next

The first two chunks of the Moderation Services are already assembled. The icing on the cake comes with the third part - a beautiful ReactJS Admin Panel that turns even the most boring tasks into a game. 😄 Check the Demo!

And if you’re still wondering about where to host such a project, don’t forget that SashiDo offers an extended 45-day Free trial, no credit card required as well as exclusive Free consultation by SashiDo's experts for projects involving Machine Learning.

What is your specific use case and what features would you like added to our moderation service? You're more than welcome to share your thoughts at hello@sashido.io.

Happy Coding!

Top comments (0)