In the last article, we asked ourselves how the operating system gives each process the illusion that it has its own address space despite only having one hardware RAM. This is one of the most important and most complicated virtualization techniques that the operating system performs. Because of that we will discuss memory virtualization in three separate articles. The goals of the operating system with respect to memory are as follows:

- Give processes a contiguous address space

- Give processes memory isolation from each other

- Do both of the above efficiently with respect to memory usage and processing speed

Let’s first talk about what memory is. Memory can be thought of as a series of slots. 32-bit memory has 32-bit slots and 64-bit memory has 64-bit slots. The size of a computer’s memory can be quite large. For example, on my Mac, the size of the memory is 8 gigabytes. Each memory slot (more commonly referred to as address), is numbered. Memory 8 gigabytes large with 64-bit slots is numbered 0 to 8,000,000,000 each at intervals of 8 bytes (64-bits).

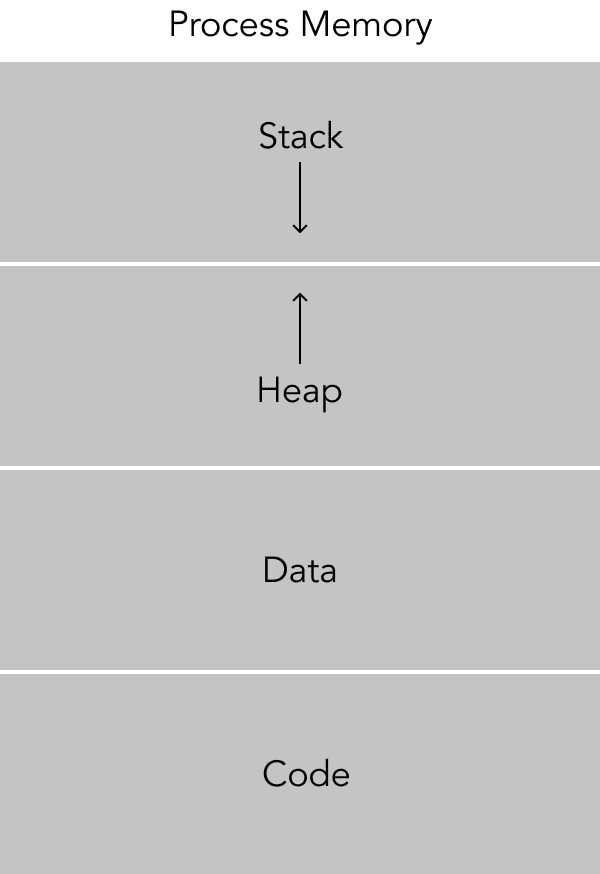

Diving a bit deeper, what does a specific process’s memory address space look like? There are a couple important sections in a program’s memory and they are: the stack, the heap, the code, and the data. At the bottom is the text segment, this is where the code lives. Above that is the data segment; variables that are global or static live here. Above that is the heap segment. This is where data allocated at runtime using the malloc call in C, for example, is located. The heap grows upwards, memory is allocated at the bottom first and grows to the top. Conversely, the stack is above the heap and grows downward. The stack area is used for local variables that exist only during the duration of its scope and are automatically free’d when the scope is exited.

Contiguous Address Space

It’s important for a process to have the illusion that it has a contiguous address space. If it doesn’t, then pointer arithmetic can not happen and we wouldn’t be able to allocate memory larger than a word at a time. A programmer needs a consistent view of memory to them to be able to write code that performs deterministically. For example, the program needs to know that the calling function’s stack is above the callee function’s stack. Otherwise, when exiting the scope of a function, the programming language wouldn’t know where to go to find the next instruction.

One way to give programs a contiguous address space is by dividing up the computer’s total available memory (or RAM - random access memory) into a fixed number and giving each process one of these chunks.

The obvious downside of this approach is that we may only be able to have a fixed number of processes running at a particular time. For example, if I divided up my computer’s 8 gigabytes of RAM into 1 gigabyte per process, then I would only be able to have 8 processes running simultaneously. The 9th process wouldn’t have a chunk of memory readily available to it! Additionally, there will be a large amount of wasted memory since all these 8 processes would likely not use up the entire address space.

How can we minimize the amount of unused memory and make it so that any process can access a free memory address? One way to do this is to add a layer of indirection that gives each process the illusion that it has a contiguous address space while it may be fragmented in the physical address space. This layer of indirection would be a translation table. The operating system could have a table that translates address spaces as viewed by the process to address spaces as viewed by the actual hardware. Every time the process reads or writes to memory, it needs to ask the operating system to look at the translation table to determine what physical address space corresponds to the virtual address space the process is reading or writing from.

One huge downside of this approach is that every memory access has to go through the operating system! Since most programs out there need to access memory frequently, having the operating system maintain this data structure and check it on every memory access would take up a lot of CPU cycles. How can we make this lookup faster? One way to make it faster is by having this translation table live in hardware and have the hardware do the translation instead of the operating system doing it. This would be much faster!

A common implementation of this is to have a translation lookaside buffer (TLB) in hardware. The TLB will map virtual addresses to physical addresses and is updated by the operating system. When the hardware looks up a virtual address in the TLB, if the translation doesn’t exist, the hardware executes a handler in the operating system. The operating system will fill the TLB with the correct address and the instruction will re-execute. Now, the user process does not need to request an address translation from the operating system everytime it reads or writes to memory. Instead, the hardware does the memory address translation under the hood.

Let’s see how this might work in practice. Let’s say a user process wants to allocate some memory at address 0x00. The CPU attempts to access the address 0x00 by first looking for a translation in the TLB. Because this is the first time the program is attempting to access 0x00, it doesn’t find any entry for 0x00 in the TLB and it jumps to execute instructions in the operating system. The operating system then checks to see if the process can still allocate memory (it is not at its memory limit). If it does, then it finds a memory address that is unused by any other processes. To do this, the operating system needs a mapping of memory addresses to whether it is available or not. Once the operating system finds an available physical address, lets say 0x10, it fills the TLB with the virtual address to physical address mapping 0x00 -> 0x10. Then, it will resume the execution of the CPU instruction. The next time the CPU wants to read the data at virtual address 0x00, the CPU looks it up in the TLB and finds the physical address is 0x10 and is able to pull the value correctly.

To make all this possible, the operating system needs a data structure for the mapping of virtual memory addresses to its physical memory address. Wait a minute...this means that for every memory address, we need another memory address to tell us what physical address a virtual address maps to (if it does at all). Woah, this means that we lose half the memory addresses available to us.

Is there a way we can use memory a little bit more efficiently so we don’t lose half of it to operating system accounting overhead? Yes, there is actually! What if instead of a one-to-one mapping of physical address space to whether it is free or not, we map things in larger chunks. For example, if we have a chunk size of 256 addresses, then we would have one entry in memory representing whether addresses 0 - 255 are free, another entry representing if addresses 256 - 511 are free, etc… What is the space complexity of this scheme? Instead of having to reserve half our memory for a virtual address to physical address mapping, we spend 1/256 the amount of memory on it. This is a huge win and the chunk size parameter can be tuned! I call this a chunk but it is more commonly referred to as a page and the technique referred to as paging. Let’s see an example of how all this works.

Let’s say that our program wants to access memory address 0x0110 (address 272 in decimal). If our page size is 256, then the TLB will use the last two bits as an offset into the page, and the remaining bits to find the virtual to physical page mapping.

The TLB will first identify the offset which is 16 in decimal (0x10). Next, it will identify the remaining bits used to find the virtual to physical page mapping. The remaining bits are 0x01 in hex which corresponds to the virtual to physical page table mapping at index 1.

The TLB will take the physical page frame and append the offset to generate the physical memory address that the program will find the data the CPU is requesting.

Great, we now have an efficient way of giving processes the illusion that it has a contiguous address space and access exclusive access to physical hardware when it really doesn’t! The next thing we need to worry about is memory isolation between processes.

Memory Isolation

What’s stopping a malicious process (or a buggy process) from accessing an area of memory that it isn’t allocated? Easy! We can re-use the address translation mechanism for creating a contiguous address space. In the TLB, we can include data about the active process. If the active process running an instruction doesn’t match the TLB entry, then the CPU will trap and execute a SEGFAULT signal handler into the operating system.

Demand Paging

So...are we done? Is that what all modern operating systems do? Nope, one more question! Our computers are not limited to a fixed number of processes, so how do they do it while still providing memory isolation between processes? The answer to this is demand paging. Demand paging was an invention during the multi-programming era of the operating system initially invented by the Atlas operating system. The trick is: we can store the memory chunk of a process that isn’t running to disk. When this process requests the memory again, the operating system swaps it from disk and back into memory. We’re effectively swapping out these pages of memory and putting it into disk when it is not needed and bringing it back when it is needed. We are now able to run many processes on the operating system without worrying about the specific number of allotted chunks.

Conclusion

These are the general principles behind how physical memory is virtualized by the operating system. If there is something you are curious to learn more about, feel free to reach out to me on Twitter or via email.

Top comments (3)

"Memory 8 gigabytes large with 64-bit slots is numbered 0 to 8,000,000,000 each at intervals of 8 bytes (64-bits)." -- this is confusing me. If you're numbering those slots from 0 to 8B, you're numbering individual bytes, and not slots. And that's how it works, like, 0x001 and 0x002 addresses point to adjacent bytes, not to adjacent 8 bytes pieces. Also, it's not 8B but 2^33.

"One way to make it faster is by having this translation table live in hardware" -- but the virtual table doesn't live in hardware... It lives in the RAM, and the part of context switching process is to set CPUs register CR3 (amd64). The part that looks up in that table, on the other hand, lives in the hardware, and is called MMU (memory management unit).

"A common implementation of this is to have a translation lookaside buffer (TLB) in hardware." -- you're putting the cart before the horse here. TLB is merely a cache in the system. The indirection is handled by MMU.

"The TLB will map virtual addresses to physical addresses and is updated by the operating system." -- this part is completely wrong. TLB cannot be updated by the operating system, as there's no interface to mangle TLB from outside of the CPU. Operating system mangles the page table for a given process, nothing else. If, for example, an address is mapped in PT but is never used by the program, it will never end up in TLB.

"When the hardware looks up a virtual address in the TLB, if the translation doesn’t exist, the hardware executes a handler in the operating system." -- again, absence of something in TLB is merely a cache miss. MMU then examines the actual PT in RAM, and the process of raising a page fault goes from there.

It'd be also good to use the common terminology for this stuff. "Page table" is used only once, "page table entry" is used... never, as "page fault", as "MMU" etc.

Went to check my old comments, sad to see that the article still contains plain wrong information, 3 years after I pointed it out.

Good one, gave some clarity.