The more that one delves into the world of failure, the more obvious it becomes that so many different parts of a distributed system can fail. This is true as a system becomes more intricate and more nodes are communicating with one another, but it is also the case for even smaller systems, too!

As we learned in part one of this two-part series on the different modes of failure, the ways that our system can fail vary in intensity. A performance failure is not as bad as an omission failure, and an omission failure isn’t as terrible as a crash failure. And, of course, if we know the ways that our system can fail, we can try to plan for those eventualities. However, there are some modes of failure that are more complex in nature; ultimately, they are just realities of any distributed system — particularly very large ones.

When it comes to handling complex failures, we must thoughtfully consider how we can plan for them. So far, the failures that we’ve looked at have been fairly straightforward, but now, that’s going to change! Let’s dive into some more tricky failures and try to identify what makes them hard to contend with.

Replying all wrong

When it comes to performance , omission , and crash failures (all three of which we have already learned about), there is one common thread: when one node asks for some reply from another node, the response doesn’t arrive in an appropriate amount of time — sometimes it just doesn’t even show up! But, even if the response shows up late, the value of it is still correct.

In other words, with all three of these failures, we can be sure that if a response from a node does arrive, the content of it will be correct.

However, as we might be able to imagine, it could be possible for a node to deliver a response that has incorrect content. So what then? Well, in this situation, we’re actually dealing with a different kind of failure entirely; this is called a response failure. A response failure is one where a node successfully delivers a response, but the value of that response is wrong or incorrect.

For some of us, a response failure might have very well been the first real encounter we had with failure in a distributed system. For example, web developers have definitely seen many response failures in their day. If a web developer write some buggy code on the backend, and their web server responded with an unexpected (read: incorrect) value to the frontend, they’d see an unexpected value on the browser/client side of their code. What’s actually happening, of course, is that there is a bug in the code is the software (the fault ), which produces an unexpected result (the error ), which manifests itself in the response from the server as an incorrect response (the failure ).

It’s important to note that response failures themselves come in two different forms. A node’s response can be incorrect in two different ways. When a node’s response is wrong in terms of its actual value, we call that a value failure.

However, if the state of the node is incorrect when it responds, we call that a state transition failure. In this kind of response failure, we should be able to see that the node has entered into an incorrect state, which usually indicates that the node somehow transitioned into an unexpected state, and something is probably wrong with the logic or flow control of that node’s internals.

The other nodes in a distributed system perceive both value response failures and state transition failures in a similar fashion: when they receive a response from the node that they are trying to communicate, they’ll notice that the response is wrong in some way. Ideally, when we’re crafting a distributed system, we would build it in such a way that we can account for potential failures; the best way to do this is by implementing error handling, which gives our system a way to catch any “incorrect” responses that we might receive from a node that fails in this way.

Overall, response failures are not great to deal with, but we can often try to handle the errors that come from an “unexpected” response in a node. Furthermore, as many of us might even know from experience, dealing with an “incorrect” response means having to debug the node where that error came from. However, even though response failures are annoying, they are still not the worse of the lot.

Random, unpredictable failures

Whether a node crashes, doesn’t respond, is super slow, or returns an incorrect response, one thing is for sure — it’s very nice when it fails consistently.

Debugging failures and trying to understand them is a whole lot easier when things fail consistently, and in the same way.

But there are some situations where we can’t even rely on our failures being consistent, which makes handling them many levels of magnitude more difficult. These kinds of failures are known as arbitrary failures.

Arbitrary failures are ones that occur when a node responds with different responses when parts of the system communicate with it. In this particular kind of failure, a node could respond one way when one part of the system talks to it and might respond a completely different way with another part of the system attempts to communicate with it; in other words, a node can respond with arbitrary messages at completely arbitrary times.

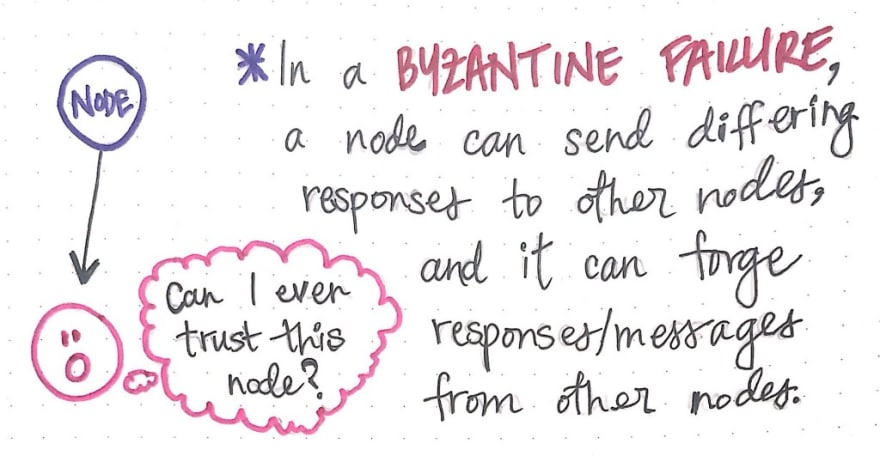

Arbitrary failures are also known as Byzantine failures , which, as we might be able to guess, derive from the Byzantine faults. Since we’ve run into Byzantine faults before, we’ll recall that a node with a Byzantine fault is one that responds with different (erroneous) content each time, and is inconsistent in what kind of response it delivers to different nodes in the system that are talking to it. Similarly, a Byzantine failure is the manifestation of a Byzantine fault.

Byzantine failures are probably the hardest kinds of failures to deal with in distributed computing. In fact, Byzantine fault tolerance is a topic that is still being heavily studied and researched, simply because there isn’t an obvious, straightforward answer on how to handle it. But what makes it so hard, exactly? Well, to answer that question we need to think a bit more about what the uncertainty of Byzantine failures and faults means for the rest of our distributed system.

Failures of trust

One of the reasons that Byzantine failures are so tricky to deal with are because they are so different from the other failures we’ve looked at. Unlike performance, omission, and crash failures, which are all consistent failures, a Byzantine failure is an inconsistent failure. A consistent failure is one where all the receiving “service user” — for example, another node or service that is communicating with some part of the distributed system — perceive a failure in the same way. All the service user nodes that communicate with a crashed node will perceive the same situation: they will all see that the node they’re trying to talk to has crashed. Thus, they perceive the crash failure in the same way.

However, a Byzantine failure is much different; it is an inconsistent failure , where some “service users” in the system will view the situation differently than others. In other words, the way that the node that is failing is behaving could be perceived differently throughout the system. As we can begin to imagine, debugging, accounting for, and handling inconsistent failures is incredibly difficult (and, as I mentioned earlier, people are still researching and studying these kinds of failures to try to combat this aspect of them).

We’ll dive more into Byzantine faults and the problems surrounding them later on in this series, but for now, the important thing to remember is that inconsistent failures are hard to deal with — not just for the people designing the distributed system, but also for the other nodes that exist within the system.

When a node behaves inconsistently, other nodes in the system might not know how to handle or deal with that inconsistency. For example, let’s say that we have a node X with a Byzantine fault. Node X might tell node A that some value (v)is true, but might tell node B that the same v is false. Now, node A and B might talk to one another and get confused about what the value v actually is! Obviously, both nodes A and B would be a little less trusting of node X and think that something suspicious was afoot! People who study Byzantine fault tolerance try to come up with algorithms to try an reconcile inconsistent responses like these.

Another added complication here is that Node X also has the ability to tell other nodes in the system about the things it knows. For example, perhaps node A asks the faulty node X something about node B. Node X could tell node A something true about node B, or it could tell it something completely false! This is again a case of not being able to trust what one node says — particularly when it is saying incorrect things about other nodes in the system.

Given this last scenario, it is also possible for some distributed systems to experience yet another kind of failure: authentication detectable Byzantine failures. These failures are actually a subset of Byzantine failures themselves, with one caveat.

In an authentication detectable Byzantine failures , the arbitrary messages sent by a node cannot ever be messages that they are forging on behave of another node. This means that, while a node can behave inconsistently in the way that it responds to other nodes, it can only do so when it comes to facts about itself, and the knowledge that the node itself has. In other words, the node cannot “lie” about facts about other nodes. At the very least, authentication detectable Byzantine failures are a little bit better to contend with than Byzantine failures — but they are by no means easy!

But even so, when designing a distributed system, it’s up to us to take all failures (even the not-so-complicated ones) into account. Identifying what different failures can pop up in a system is the very first step to figuring out how to plan for and handle them when they occur. Failures are bound to happen, and they’re mostly out of our control. How we deal with them, however, is something that we have more autonomy over.

But that’s a story for a different day!

Resources

Understanding the different ways that system can fail is crucial if we want to be able to If you’d like to keep learning about different mode of failure, there are lots of resources out there; the ones below are, in my opinion, some of the best ones to start with.

- Fault Tolerance in Distributed Systems, Sumit Jain

- Failure Modes in Distributed Systems, Alvaro Videla

- Distributed Systems: Fault Tolerance, Professor Jussi Kangasharju

- Understanding Fault-Tolerant Distributed Systems, Flaviu Cristian

- Failure Modes and Models, Stefan Poledna

- Fault Tolerant Systems, László Böszörményi

Top comments (1)

Great serie of articles, but I must say, what I love the most are those handwriting drawings. Every time I read one of your articles my

designer selfcan't resist the temptation to throw 🌈 and 🦄 everywhere!