In the previous post, we discussed the structural building block of a neural network, also called the perceptron. In this post, we will learn how these perceptrons connect together to form a layer of a neural network and how these layers connect to form what is called a deep neural network.

Multi-Output Perceptron

Let us recall the structure of a perceptron, that we discussed in the last post.

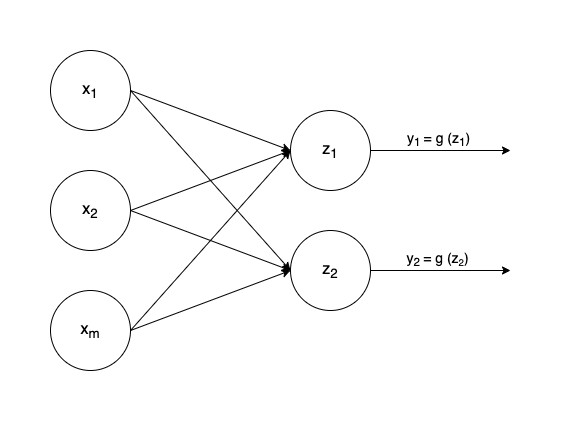

Now, let us consider that we have 2 perceptrons, instead of 1, each connected to all the inputs. This can be visually represented as the image below.

This is called a multi-output perceptron.

- This allows us to create as many outputs as we want, by stacking together multiple perceptrons.

- For the sake of simplicity, the bias term has been omitted here.

- Each perceptron is connected to all the inputs from the previous layer. This is known as a dense layer.

Single Layer Neural Network

Let's add more perceptrons to create a single layer neural network.

Here, we can see 3 types of layers:

- The first layer contains the inputs to the network and is called Input Layer

- The last layer provides the final output of the network and is called the Output Layer

- The layers between the input and output layer are called the Hidden Layers. The number of hidden layers represent the depth of the network.

As the number of connections increase, so do the number of weights. For example, there are two sets of weights, w1,1(1) connecting x1 to z1 & w1,1(2) connecting z1 to y^1. The equations for the perceptrons in the hidden and output layer can be represented as:

To make these easier to manage, and also to speed up computation, the inputs, weights, and outputs are usually stored in the form of vectors. This also allows us to take advantage of multi-core CPUs to speed up training by better parallelizing computations.

To vectorize the equation, we can represent input, output, and weights as vectors and simply replace the summation of products with a matrix dot product:

Conclusion

In this post, we learned how a neural network is constructed by connected individual perceptrons. In the next part, we will see how the network computes the output and eventually gets better and more accurate.

I would love to hear your views and feedback in the comments. Feel free to hit me up on Twitter.

Top comments (0)