A Neural Network is a machine learning model inspired by the human brain. A neural network learns to perform a task by looking at examples without being explicitly programmed to perform the task. These tasks can vary from predicting sales based on historic data, detecting objects in images, language translation, etc.

The Perceptron

The Perceptron is the structural building block of a neural network. It is modeled after the neurons inside the brain.

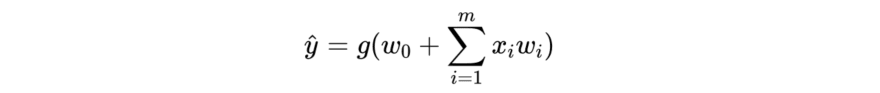

Mathematically, this can be represented by the following equation:

We can explain the working of a single perceptron as follows:

- Each perceptron receives a set of inputs x1 to xm

- Each input has a weight associated with it ie. w1 to wm for x1 to xm respectively.

- Each input is multiplied with its respective weight, added, and passed as input to an activation function.

- A bias term w0 is also added, which allows us to shift the activation function left or right, irrespective of the inputs.

- An activation function, also called a non-linearity, is a non-linear function that produces the final output of the perceptron.

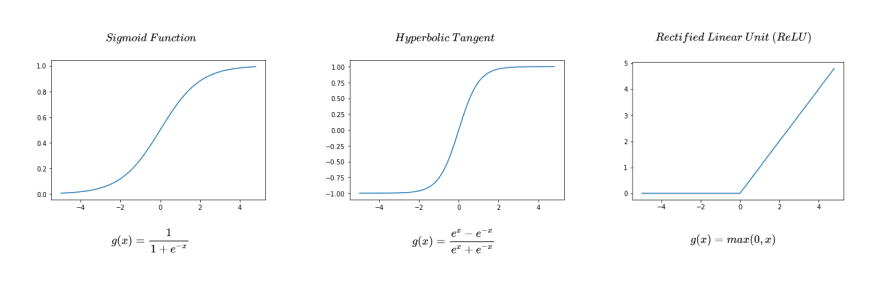

Activation Functions

An activation function is a non-linear function. A few common activation functions are the sigmoid function, the hyperbolic tangent (tanh) function, and the rectified linear unit (ReLU) function. The choice of the activation function used depends on the type of output expected from the perceptron. For example, the output of the sigmoid function ranges between 0 and 1. Therefore, it is useful when output is supposed to be a probability.

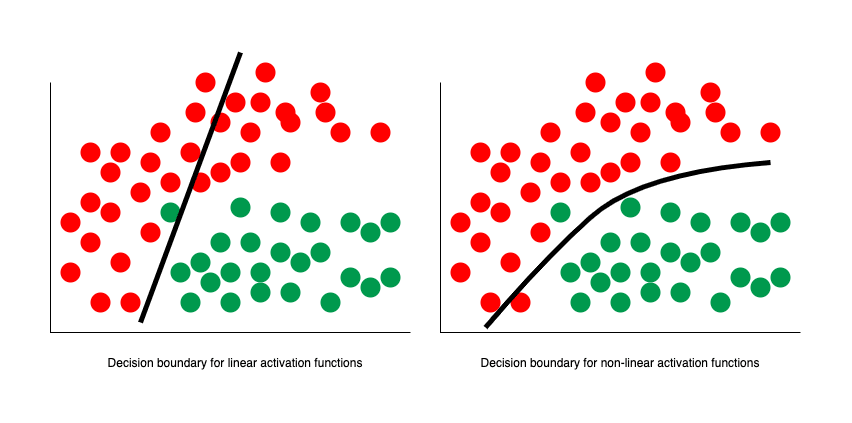

Why do we need an Activation Function

Activation functions introduce non-linearity into the network. In real life, almost all of the data is non-linear. Without an activation function, the output will always be linear. Non-linearities, on the other hand, allows us to approximate arbitrarily complex functions.

Conclusion

In this post, we learned how a single perceptron works. In the next part, we will see how these perceptrons are connected together to form a neural network and how the network learns to do a task that it was not explicitly programmed to do.

I would love to hear your views and feedback. Feel free to hit me up on Twitter.

Top comments (2)

dope...i love it...

Thanks! Glad you liked it!