Have you ever wondered what's your most influential tweet since you created your Twitter profile? Or how your tweets performed in the last 30 days? With the open-source software twint (MIT) you can scrape all of your tweets (or from someone else 😬) and analyze them - without using Twitter's API. I'll show you how you can scrape them within minutes.

What you need

- the twitter username you want to analyse (eg. mine @natterstefan)

- Terminal (I prefer iTerm2 on macOS)

- Python 3.6

- and some other dependencies they list on their GitHub page.

- Optional: Docker (it also works without Docker)

Installation

As you'll later see I run twint within docker, but if you'd like to install it directly onto your system these are your options:

Git:

git clone https://github.com/twintproject/twint.git

cd twint

pip3 install . -r requirements.txt

Pip:

pip3 install twint

or

pip3 install --user --upgrade git+https://github.com/twintproject/twint.git@origin/master#egg=twint

Pipenv:

pipenv install git+https://github.com/twintproject/twint.git#egg=twint

Usage

Once you have installed twint you can start scraping your tweets and save the result in a .csv file with the following command:

twint -u username -o file.csv --csv

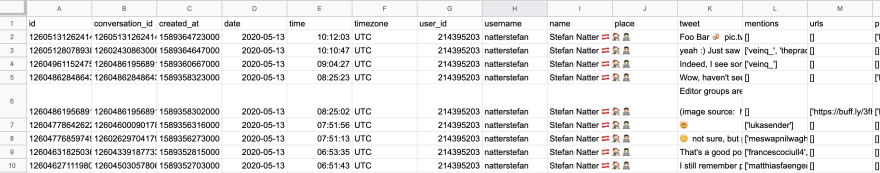

The result will look like this:

You can do even more with twint!

# Display Tweets by verified users that Tweeted about Trevor Noah.

twint -s "Trevor Noah" --verified

# Scrape Tweets from a radius of 1 km around the Hofburg in Vienna export them to a csv file.

twint -g="48.2045507,16.3577661,1km" -o file.csv --csv

# Collect Tweets published since 2019-10-11 20:30:15.

twint -u username --since "2019-10-11 21:30:15"

# Resume a search starting from the last saved tweet in the provided file

twint -u username --resume file.csv

Take a look at the list of all commands on GitHub for more inspiration.

You can then start analyzing your tweets, by sorting them by likes, retweets, or any other KPI you are focusing on. It is up to you what you do with the data.

Use twint and twint-search with Docker

twint also provides a nice UI to search your tweets (eg. by hashtags) called twint-search. In this next step, I am going to show you how to scrape tweets with docker, save them into Elasticsearch and explore the result with twint-search.

First of all, you need to clone the twint-docker repository:

git clone https://github.com/twintproject/twint-docker

cd twint-docker/dockerfiles/latest

Finally, you can spin up the docker containers:

docker pull x0rzkov/twint:latest

docker-compose up -d twint-search elasticsearch

Once everything is started you can execute the "scrape tweets from a user and save it in a .csv file" command like this:

docker-compose run -v $PWD/twint:/opt/app/data twint -u natterstefan -o file.csv --csv

Let's take a closer look at what's going on here. We start the x0rzkov/twint docker image with docker-compose run with one mounted volume -v $PWD/twint:/opt/app/data. Inside this container we execute twint -u natterstefan -o file.csv --csv.

The result of the task will be saved in the mounted directory $PWD/twint. Which is basically the current path in the twint subfolder.

It depends on the number of tweets of the selected account to finish the command. Once it is completed you should see the result with ls -lha ./twint/file.csv.

Now you can execute any supported twint command with docker-compose run -v $PWD/twint:/opt/app/data twint.

Explore tweets with twint-search

In the previous example, we saved the results into a .csv file. But it is also possible to store the results in Elasticsearch.

First of all, open docker-compose.yml with your favorite editor (mine is VSCode by the way) and fix the existing CORS issue, until they've merged my pull request.

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:${ELASTIC_VERSION}

container_name: twint-elastic

environment:

- node.name=elasticsearch

- cluster.initial_master_nodes=elasticsearch

- cluster.name=docker-cluster

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=${ELASTIC_JAVA_OPTS}"

+ - http.cors.enabled=true

+ - http.cors.allow-origin=*

Now you are ready to start the apps.

# start twint-search and elasticsearch

docker-compose up -d twint-search elasticsearch

and then start saving the results into Elasticsearch

docker-compose run -v $PWD/twint:/opt/app/data twint -u natterstefan -es twint-elastic:9200

Finally, open http://localhost:3000 and voilà you should see something similar like mine example.

Play with the data you scraped as you like. You can even add more data into Elasticsearch and explore more tweets. It is that easy.

Have fun.

Special thanks go to Cyris (@sudo_overflow). He shared the tool with me.

>> Let's connect on Twitter 🐦: https://twitter.com/natterstefan <<

Top comments (14)

this is SUPER cool. just a couple of issues with output

followers,followers_names,following,following_namesthat are empty text and csv work as expected AFAICTHi Fitsum,

thanks for your feedback. Unfortunately, I am not a maintainer of twint. If you experience issues, report them on GitHub, please.

I'm sure they will be happy to help you and resolve the issues.

Thanks Stefan. Great article. 🙌

Thank you, Marco, highly appreciated!

Awesome! Thanks for sharing

Hi Dustin, you're welcome and thank you!

Hi Julien,

you're welcome!

Twint is not working nowadays. Any updates?

Hi Ali, can you tell me what didn't work, please? I am not an official maintainer, but maybe I can help.

Wow

So great

Tnx for sharing bro

You're welcome 🥳

Awesome tip/hack, thanks Stefan!

Thanks for the feedback and you're welcome. ✌🏻