In Dapper .NET, Multiple execution is a convenient (or maybe not? make sure you read the article until the end!) way to execute the same query multiple times with different parameters values.

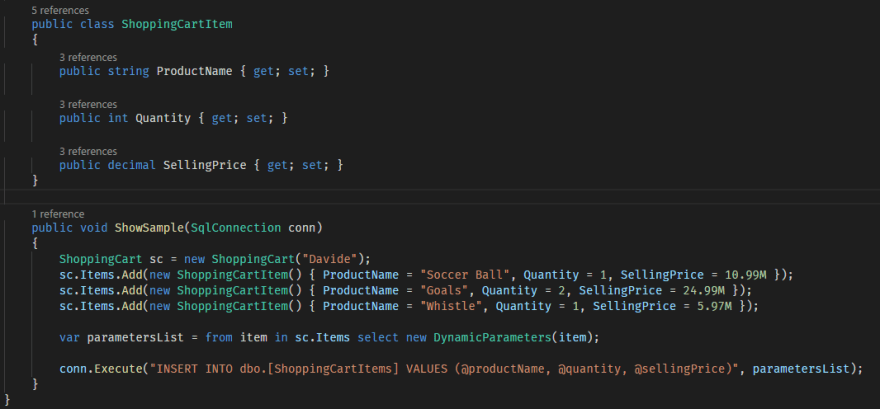

Let’s say for example that you have a shopping cart full of items and you want to save it into your database. The following code snipped shows how you can do it using the multiple execution feature:

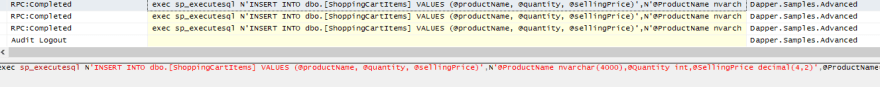

As you can see you just have to pass an IEnumerableof parameters, be it done using DynamicParameters or an array of anonymous objects, and you’re done. This is what gets executed on the database server (here I’m using the SQL Profiler to see what gets executed on SQL Server):

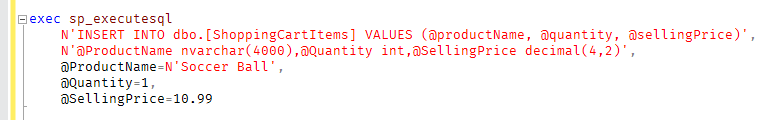

Each statement looks like the following:

While this could seem pretty cool, since it solves the problem of sending an array of values to the database, instead of passing it one-by-one manually, the reality is that it is not. In fact, if your database supports more clever ways of passing sets of values, you should use one of those instead of this feature.

Samples of Multiple Execution usage can be found here:

Avoid using it, if you can

With SQL Server/Azure SQL you have better options to send an array of values to the database. Using Table-Valued-Parameters or JSON, or even XML if you are a good-old fashioned lover boy, is just the way to go if you have a collection of up to a thousand — as general rule — values that needs to be sent to the database. And in case you have more, you should go for the BULK INSERT command instead.

What the multiple execution does behind the scenes, in fact, is just an iteration over the provided values. Yes, just a simple loop. And it couldn’t be different in order to make it work with any supported database connection.

But this approach sends every command as a single, stand-alone transaction, which may cause inconsistencies in case of error while executing one or more statements. The workaround here is to use an IDBTransaction object to create an explicit transaction that covers all the executions. Performances and scalability will be worse than executing just one command passing an array of objects (due to network latency and thus longer transactions), but at least consistency will be guaranteed.

But since Dapper supports SQL Server/Azure SQL’s Table-Valued-Parameters and also JSON my recommendation is to use one of those if you need to pass an array of values to a parameter. I’ll discuss about them in future articles, so stay tuned.

Now, what about if you have to pass an array of, say, 10.000 values or more? The right choice, here, is to use a bulk load, and more specifically with SQL Server/Azure SQL the BULK INSERT command, which is, unfortunately, not supported by Dapper natively. The workaround is to just use the regular SqlBulkCopyclass here and you’re done.

Conclusions

This feature could be nice if your database doesn’t offer anything specific way to handle array of data as parameter values. Keep in mind that all values are sent as separate commands, and if you want to group them all in a single transaction you have to do it explicitly. As a general rule, if the database you’re using has specific support to deal with arrays, use that feature instead of the Multiple Execution option.

What’s Next?

Next topic will be on handling “Multiple Resultsets”

Top comments (0)